10 Key Announcements from Microsoft's Build 2024: Copilot, Large Models, and Generative AI

Microsoft Build 2024 Highlights: Copilot, Large Models, and the Era of Generative AI!

1.8 million people are using GitHub Copilot, and it's already changing the world.

On May 22, Microsoft held the "Build 2024" global developer conference in Seattle. This 3-day event focused heavily on large models and generative AI.

From the first day's announcements, it's clear that large models and generative AI are integral to all of Microsoft's development platforms and product lines. For example, Copilot Studio supports developing applications with AI Agent features, and the new Azure AI Studio platform supports over 1,600 large models, including GPT-4o and Phi-3-vision.

There was a lot of information released on the first day, so I've summarized the most important points for you. For products and models that have been officially released, I've included detailed links for you to explore.

Azure AI Studio Officially Launched

Generative AI like ChatGPT is very important for life and work. But making such AI apps is hard. Microsoft has now launched Azure AI Studio to help developers.

With Azure AI Studio, developers can quickly use features like Retrieval-Augmented Generation. They can test and check big models. They can deploy models on a large scale. They can also keep watching the models.

Try it for free: https://azure.microsoft.com/en-us/products/ai-studio

One of the key features of Azure AI Studio is its support for over 1,600 major models, including GPT-4o, Phi-3-vision, Llama 3, and TimeGen-1. This helps developers quickly find the model that best fits their needs.

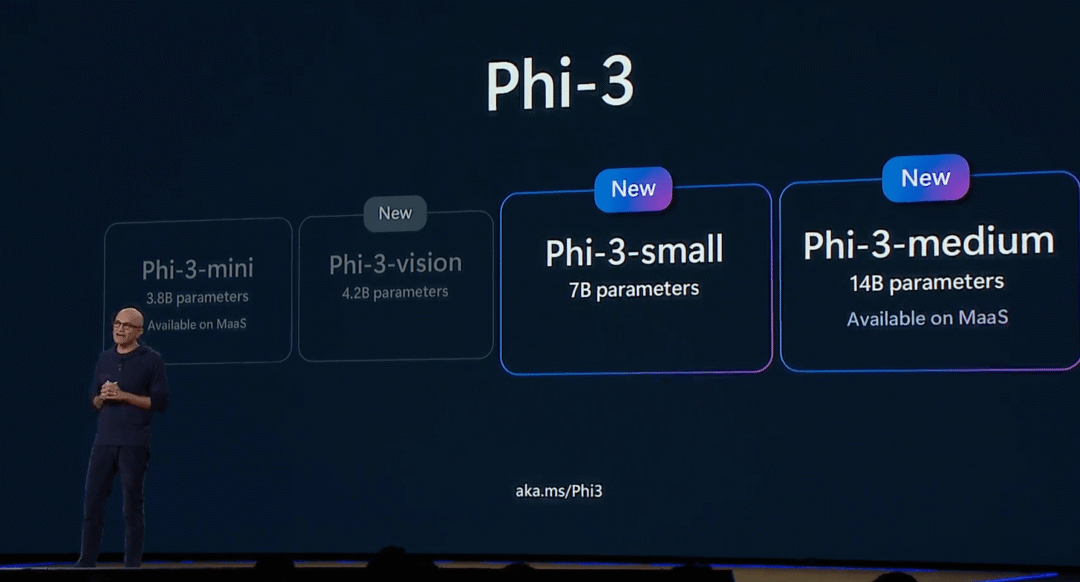

Phi-3 Series Models Launched: Small Parameters, Strong Performance

Microsoft released Phi-1 and Phi-2 models. Their small size, good performance, and low power made them popular with developers.

In April, Microsoft showed the open-source Phi-3 series models. Now, these Phi-3 models are available on Microsoft Azure.

The Phi-3 series includes:

Phi-3-vision: 4.2 billion parameters, with visual recognition and transformation capabilities.

Phi-3-mini: 3.8 billion parameters, supports 128K and 8K context windows.

Phi-3-small: 7 billion parameters, with performance stronger than GPT-3.5.

Phi-3-medium: 14 billion parameters, outperforming Google Gemini 1.0 Pro.

Phi-3-mini is the most popular, with over 1 million downloads on Huggingface.

Try it for free: https://ai.azure.com/explore/models/Phi-3-vision-128k-instruct/version/1/registry/azureml

Open source: https://huggingface.co/collections/microsoft/phi-3-6626e15e9585a200d2d761e3

The Phi-3 family has a special model called Phi Silica. Phi Silica is in the new Copilot+ PC.

Phi Silica has 3.3 billion parameters. It can generate 27 tokens per second. It uses only 1.5 watts of power.

This makes Phi Silica great for laptops, tablets, and mobile devices. It is the most powerful small model available now.

Phi Silica: https://learn.microsoft.com/en-us/windows/ai/apis/phi-silica?ref=maginative.com

Microsoft has also released the SDK for Phi Silica for those interested.