5 Steps to Create Your Own Open-Source AI Copilot

Building a Generative Search Engine for Local Files with Llama 3

Microsoft Copilot offers similar features. However, I want to make an open-source version myself. I'll share what I learned while building this system quickly.

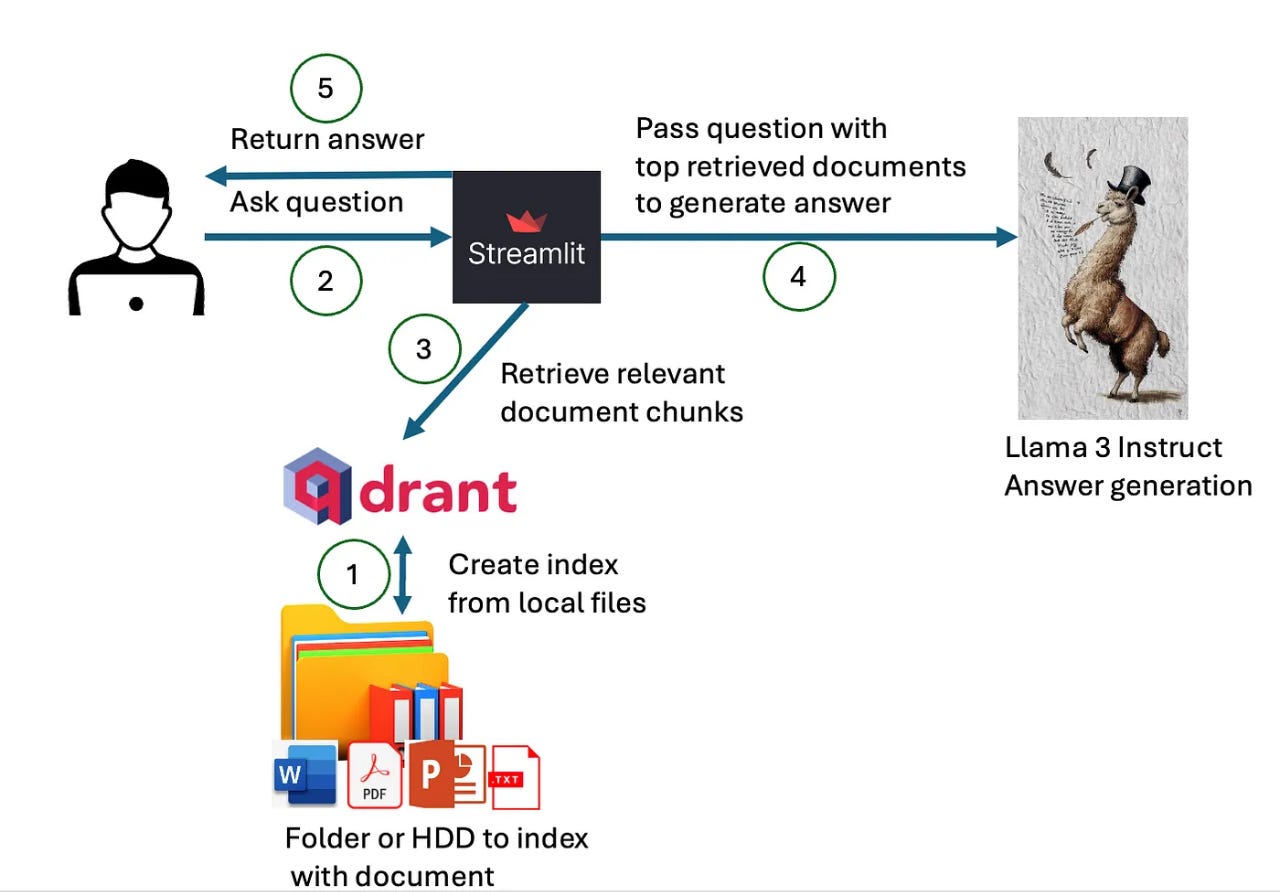

System Design

To make a local search engine or assistant that creates answers, we need:

An index of local file content and a search engine to find relevant documents for questions.

A language model to pick content from local documents and make summary answers.

A user interface.

Qdrant stores vector data. Streamlit is the user interface. Llama 3 can be downloaded through Nvidia NIM API (70B version) or HuggingFace (8B version). Langchain splits documents into chunks.

First, we index local files so we can search their content.

When a user asks a question, we use the index to find the most relevant documents that might have the answer.

We send these documents and the question to a large language model. It uses the document content to create an answer.

We also ask the language model to list the documents it used. Finally, we show everything to the user on the interface.