Autoregressive Models Show Scaling Potential in the Field of Image Generation

Explore the potential of autoregressive models in image generation, scaling laws, and unified generation-discrimination tasks. Cutting-edge insights and advancements await!

Autoregressive Methods: Observing Scaling Laws in Image Generation

"Has Scaling Law hit a wall?" This has recently become one of the most debated topics in the AI community.

The discussion began with an article from The Information, which claimed that the quality improvement of OpenAI's next-generation flagship model is less significant compared to its predecessors. This is attributed to the diminishing availability of high-quality text and other data, potentially threatening the traditional Scaling Law—training larger models with more data.

Following the article, many rebutted the claim, arguing that Scaling Law hasn’t yet reached its limits. Numerous teams training large models continue to see consistent performance improvements.

Moreover, what we now refer to as Scaling Law primarily pertains to the training phase. The inference phase of Scaling Law remains underexplored, and techniques like test-time computation could further elevate model capabilities.

Interestingly, some have pointed out that Scaling Law is increasingly evident in domains beyond text, such as time series forecasting, as well as image and video generation in the visual domain.

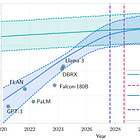

The following image is from a paper submitted to ICLR 2025. The study reveals that Scaling Law can also be observed when applying GPT-like autoregressive models to image generation.

Specifically, as model size increases, training loss decreases, generation performance improves, and the ability to capture global information strengthens.

Paper Title: Elucidating the Design Space of Language Models for Image Generation

Paper Link: https://arxiv.org/pdf/2410.16257

Code and Model: https://github.com/Pepper-lll/LMforImageGeneration

Dr. Qixian Biao of Yun Tian Li Fei, one of the authors, remarked in an interview with Synced:

"We don’t yet know the full extent of Scaling Law in image generation. For instance, if we scale an image generation model to the size of Llama 7B, would GPT-like autoregressive methods exhibit immense potential?"

This curiosity led them to conduct preliminary experiments. They found that even halfway through training, autoregressive models displayed strong Scaling Law behavior in image generation tasks.

This discovery bolstered their confidence in applying autoregressive methods to visual tasks. At least in the image and video generation, the Scaling Law remains robust and far from hitting a wall.

In another paper, Dr. Qixian Biao and colleagues identified room for improvement in traditional autoregressive methods when applied to image tasks.

They introduced an improved method called BiGR, built upon MAR (masked autoregressive) techniques by Kaiming He and colleagues. BiGR achieved advancements in several areas, becoming the first conditional generation model to unify generative and discriminative tasks within a single framework.

This means BiGR serves not only as an effective image generator but also as a powerful feature extractor, with the two functionalities mutually reinforcing each other.

Paper Title: BiGR: Harnessing Binary Latent Codes for Image Generation and Improved Visual Representation Capabilities

Paper Link: https://arxiv.org/pdf/2410.14672

Code and Model: https://github.com/haoosz/BiGR

These findings provide inspiration for the research community to further explore Scaling Laws in autoregressive models for visual tasks.

In this article, we will delve deeper into these studies. As a side note, the code and models for both projects have already been made publicly available.