Bitnet.cpp: Microsoft Open-Sources 1-Bit Inference Framework, Running 100B Models on CPUs

Microsoft's Bitnet.cpp open-source 1-bit inference framework enables running 100B models on CPUs 6x faster, reducing power use by up to 82%.

A few days ago, Microsoft open-sourced Bitnet.cpp, which is big news.

In short, this means you can now run massive models, like the 72B model or even larger, on a CPU.

The reason is that the precision of these models has been reduced to 1-bit. Bitnet.cpp is a highly efficient 1-bit LLM inference framework, allowing you to run 100B models on local devices up to six times faster while reducing power consumption by 82.2%.

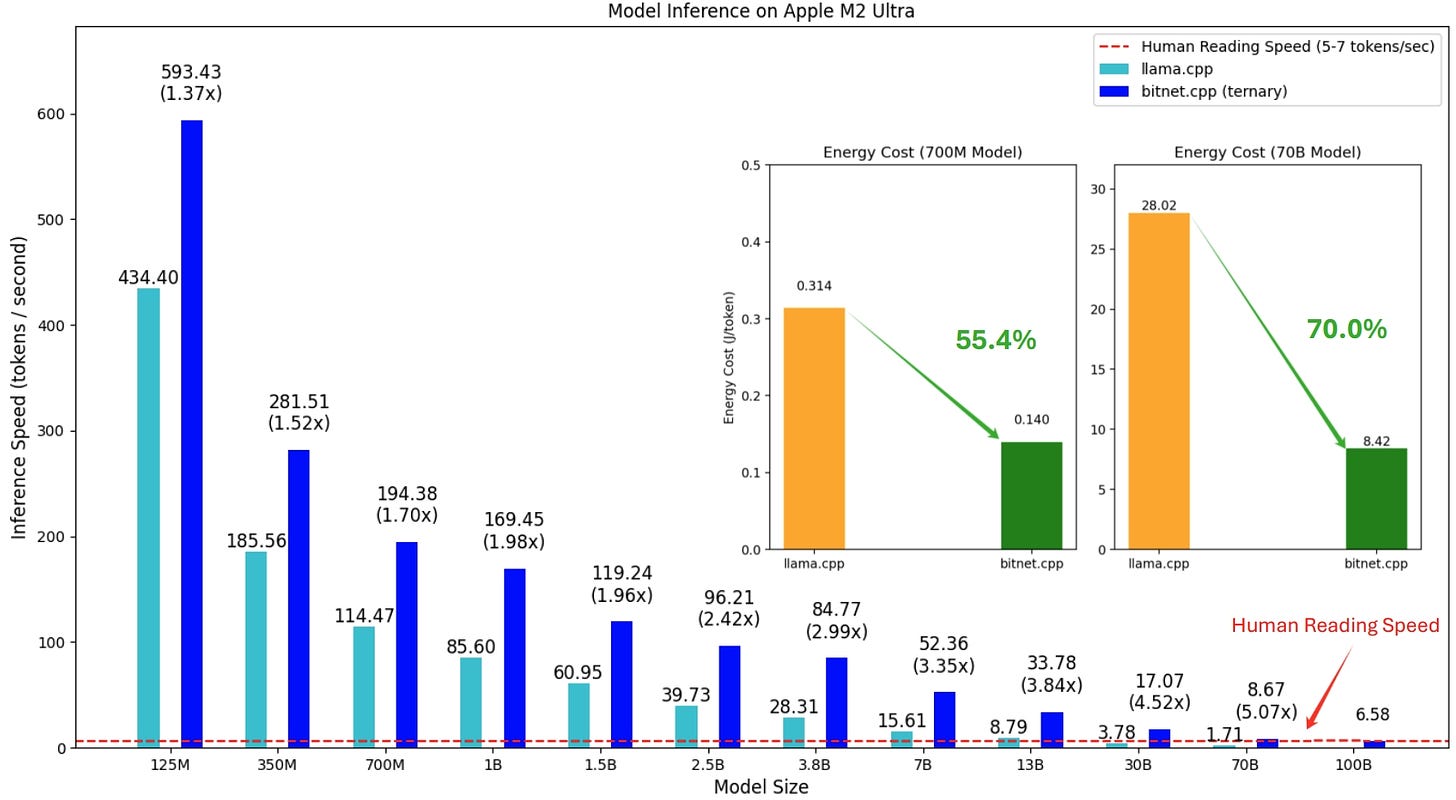

In the future, Bitnet.cpp will also support NPUs and GPUs. On ARM CPUs, it boosts model speed by 1.37 to 5.07 times, while reducing power consumption by 55.4% to 70%.

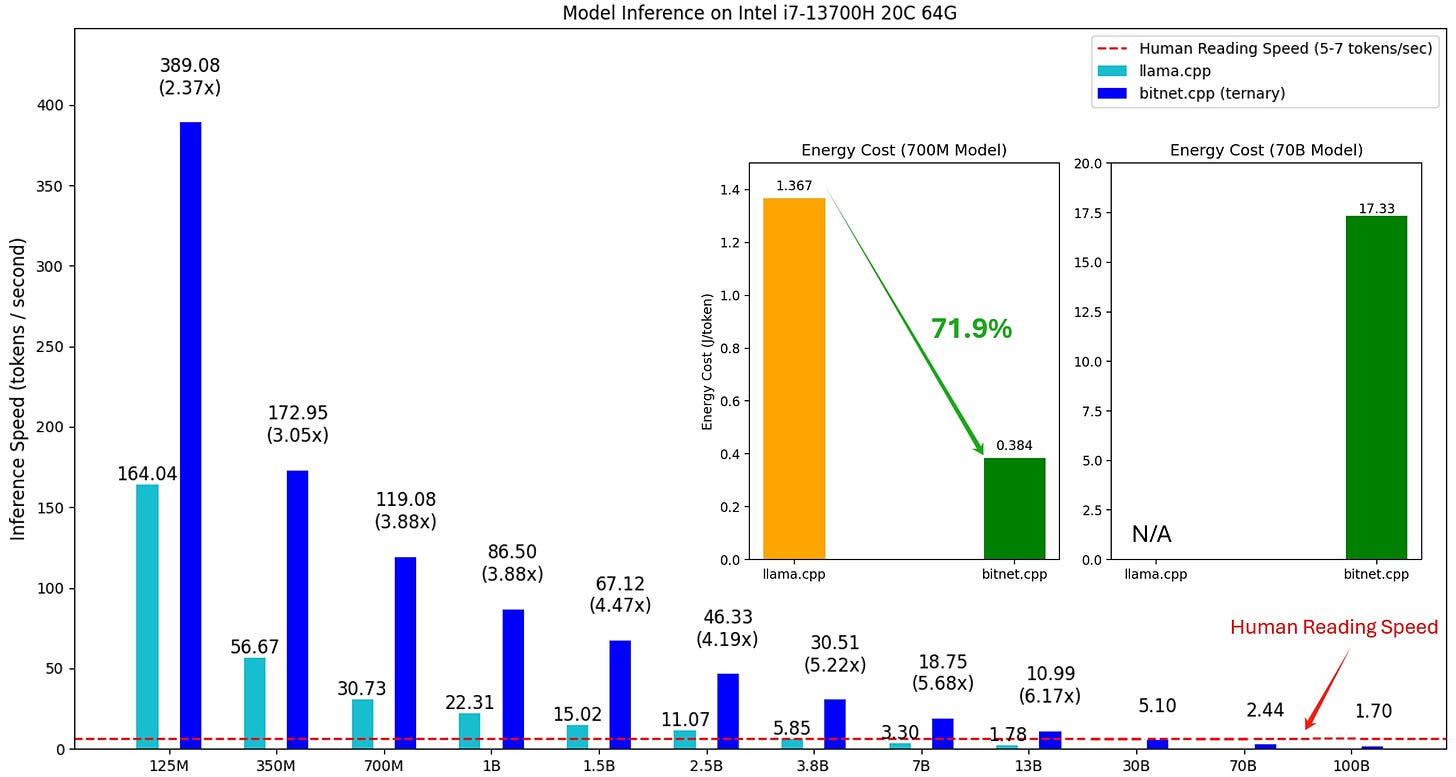

On x86 CPUs, the acceleration ranges from 2.37 to 6.17 times, and power consumption decreases by 71.9% to 82.2%.

This framework can run the 100B bitnet_b1_58 model (a 1-bit quantized language model within the BitNet framework) at a rate of 5-7 tokens per second on a single CPU.

This performance, comparable to human reading speed, significantly enhances the potential to run large language models on local devices.

You can now install it on Ubuntu Linux, Windows, or MacOS. Also, Bitnet.cpp supports both x86 and ARM architectures.

So, let’s see how it works.