Cosmos: NVIDIA's Open-Source SOTA Tokenizer for Video Generation and Robotics

Discover the role of tokenizers in image and video generation. Explore NVIDIA's Cosmos Tokenizer, offering SOTA performance, fast encoding, and high-quality results.

The Importance of Tokenizers in Image and Video Generation

When discussing image and video generation models, the focus often lies on model architectures, such as the renowned DiT. However, tokenizers are equally critical components.

Researchers at Google highlighted this in a paper titled "Language Model Beats Diffusion - Tokenizer is Key to Visual Generation". They demonstrated that integrating a robust tokenizer into a language model could achieve results surpassing state-of-the-art diffusion models at the time.

The paper's author, Lu Jiang, remarked in an interview, “Our research might make the community realize that tokenizers are an overlooked field, deserving more attention and development.”

In image and video generation models, the core role of tokenizers is to convert continuous, high-dimensional visual data (e.g., images and video frames) into a format that models can process—compact semantic tokens. The visual representation capabilities of these tokens are vital for the model’s training and generation processes.

As the authors noted, “The tokenizer’s purpose is to establish connections between tokens, enabling the model to clearly understand its task. The better these connections are, the more potential the LLM model can unleash.”

Tokenizers: A Key Component of Generative AI

Tokenizers, through unsupervised learning, discover latent spaces and transform raw data into efficient, compressed representations. Visual tokenizers specifically handle high-dimensional visual data like images and videos, converting them into compact semantic tokens to facilitate efficient training of large-scale models and reduce inference costs.

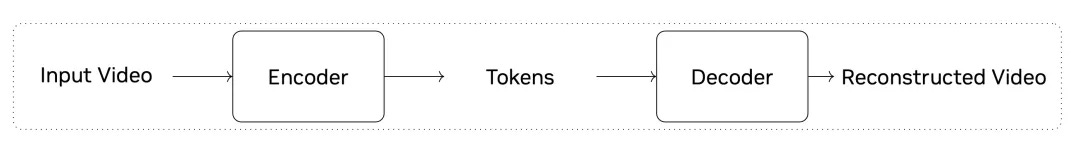

The diagram below illustrates the tokenization process for video data.

Despite the availability of many open-source video and image tokenizers, they often produce low-quality data representations, leading to issues like distorted images and unstable videos in the models utilizing them.

Moreover, inefficient tokenization processes can result in slower encoding and decoding speeds, longer training and inference times, and negative impacts on developer productivity and user experience.