Deep Learning Pioneer Hinton's Insight: Bigger Models for "Next Word Prediction"

Hinton's Interview: Insights on AI's Future, Large Models, and His Pioneering Journey

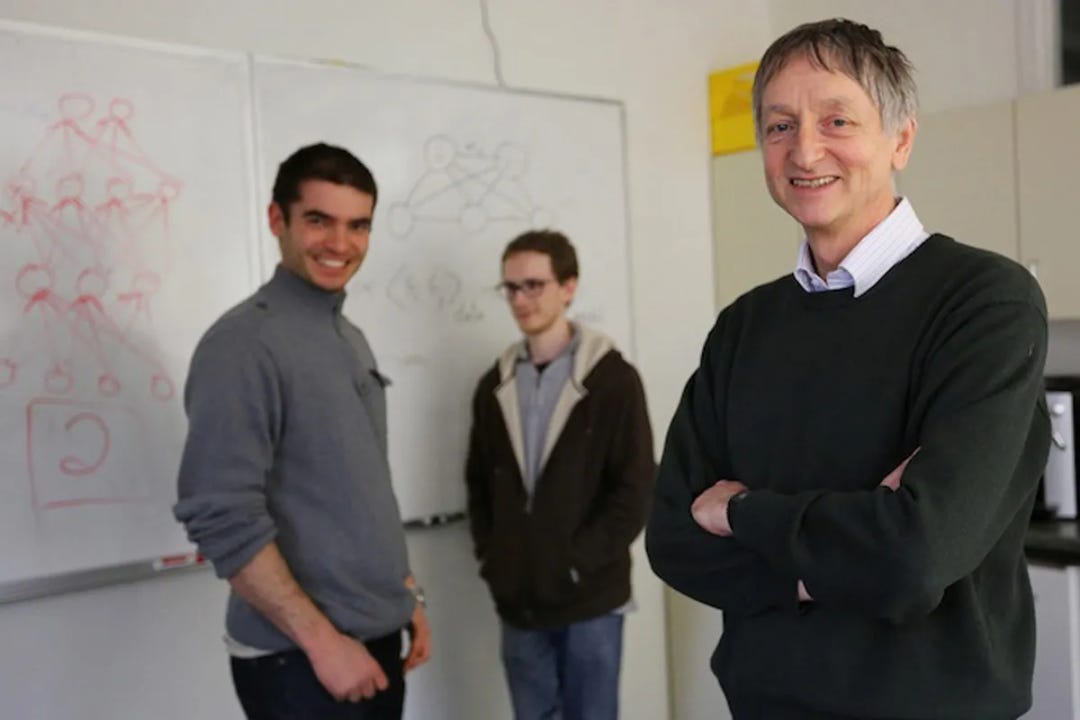

"Every second of this interview is gold." Recently, a video interview with Turing Award winner Geoffrey Hinton has received high praise online.

Hinton talked about big AI models, learning from various sources, digital computers, shared knowledge, and the feelings of intelligent systems. He also spoke about his co-workers and students.

Hinton thinks big language models learn by finding common patterns across different areas. This helps them compress information and gain deep understanding. They discover connections that humans have not yet found. This is the source of creativity. He also said predicting the next symbol requires reasoning. This goes against the belief that big models cannot reason. As models get bigger, their reasoning ability gets better. This is worth exploring further.

Reflecting on his work with his student Ilya, Hinton said Ilya had great intuition. Ilya saw the value in making models bigger early on, even when Hinton was unsure. Ilya's intuition was right.

The interview spans a significant period. To better understand the background, let's review Hinton's career:

Born on December 6, 1947, in Wimbledon, UK.

Graduated with a bachelor's degree in experimental psychology from the University of Cambridge in 1970.

From 1976 to 1978, served as a research fellow in the Cognitive Science Research Program at the University of Sussex.

Earned a Ph.D. in artificial intelligence from the University of Edinburgh in 1978.

From 1978 to 1980, he was a visiting scholar in the Cognitive Science Department at the University of California, San Diego.

From 1980 to 1982, I worked as a scientific manager at the MRC Applied Psychology Unit in Cambridge, UK.

From 1982 to 1987, he was an assistant and associate professor at Carnegie Mellon University's Computer Science Department.

From 1987 to 1998, he was a professor in the Computer Science Department at the University of Toronto.

Elected Fellow of the Royal Society of Canada in 1996.

Elected Fellow of the Royal Society of the UK in 1998.

From 1998 to 2001, was the founding director of the Gatsby Computational Neuroscience Unit at University College London.

From 2001 to 2014, was a professor in the Computer Science Department at the University of Toronto.

Elected Fellow of the Cognitive Science Society in 2003.

From 2013 to 2016, served as a Distinguished Researcher at Google.

From 2016 to 2023, served as Vice President and Engineering Fellow at Google.

Resigned from Google in 2023.

Starting Point: Understanding How the Brain Works

Hinton: I remember my first time at Carnegie Mellon University after coming from the UK. In British research institutions, people would go to the pub at 6 PM. But at Carnegie Mellon, a few weeks in, on a Saturday night, I had no friends and nothing to do, so I decided to go to the lab and program. I had a list machine that couldn’t be used at home. Around 9 PM, the lab was full of students working. They believed their work would change the future of computer science. It was a refreshing change from the UK.

Hellermark: Let's go back to your time at Cambridge. What was it like trying to understand how the brain works back then?

Hinton: It was a disappointing time. I was studying physiology, and during the summer term, they taught us how neurons transmit action potentials. It was interesting, but it didn’t explain how the brain works. So, it was disappointing. Then, I turned to philosophy, hoping it would explain how the mind works, but that was also disappointing. Finally, I went to Edinburgh University to study AI, which was more interesting. At least you could simulate things and test theories.