Detailed Analysis of the Qwen2.5-Coder Technical Report

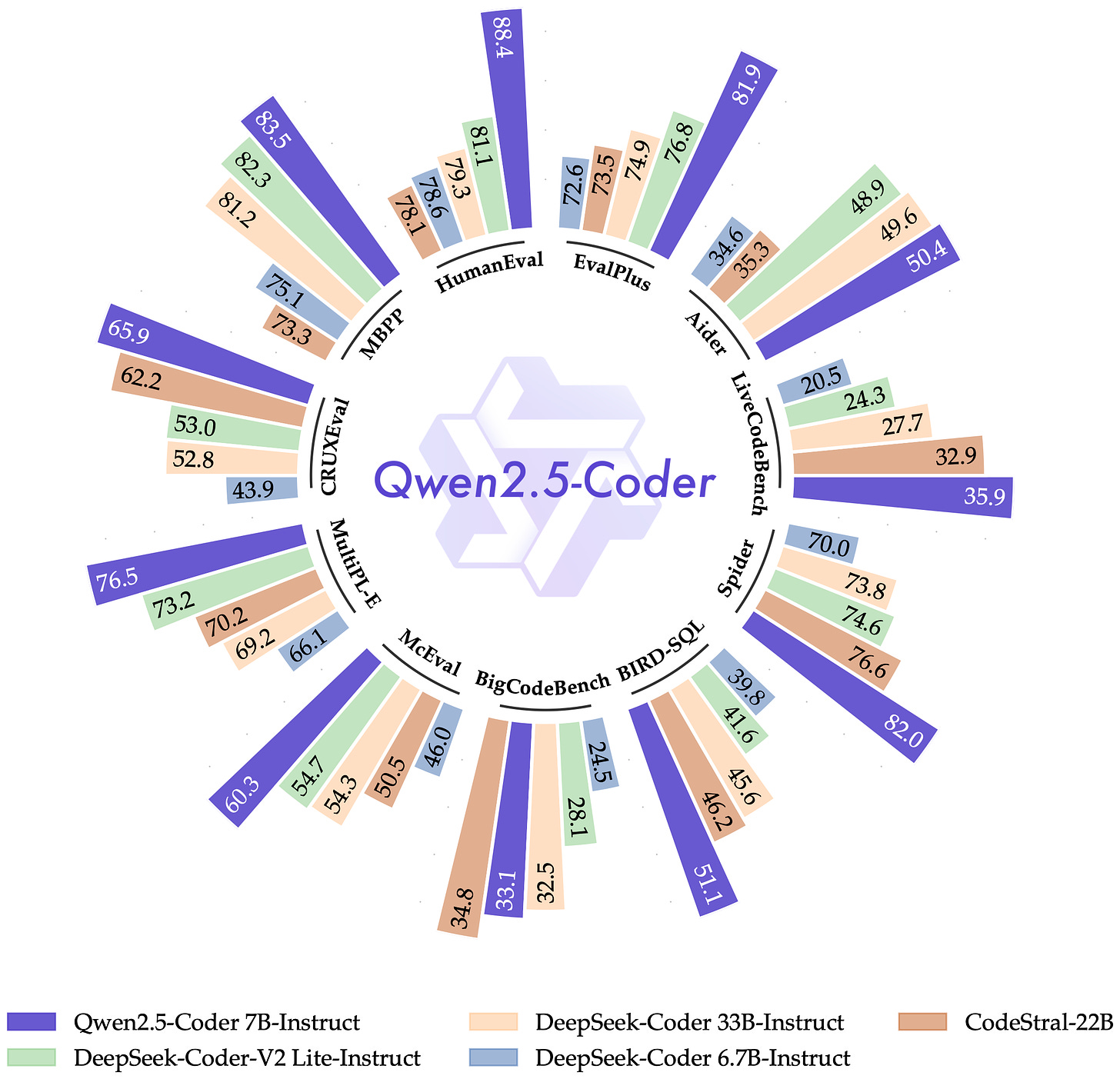

Qwen2.5-Coder's technical report explains its evolution, architecture, FIM data usage, and multi-language benchmarks, highlighting its unique features and improvements.

This Coder technical report differs significantly in style from Math. While Math’s style template aligns with the Qwen Technical Report and Qwen2 Technical Report, with author names listed alphabetically, Coder's style closely resembles DeepSeek's. It highlights the first author and only Junyang Lin is marked as a corresponding author, making it feel less "formal."

Similar to Math, the naming of the Code model has changed. Initially (in earlier reports and the 1.5 version), it was called Code-Qwen. But in the 2.5 version, it's now named Qwen2.5-Coder, emphasizing that both Math and Code models belong to the Qwen Series. However, unlike Math-Qwen being renamed to Qwen-Math, Code-Qwen became "Coder" to better fit the "pair programming" scenarios mentioned in the 1.5 version.

The current release includes models 1.5B, 7B, and 32B (coming soon), but unlike Qwen and Math models, the largest size, 72B, is not available. I believe that Code models will either shrink over time for specialized deployment and frequent usage or merge their capabilities into flagship models like GPT, Claude, or DeepSeek. Hence, there's probably no need for a larger, dedicated code model.