LFM: The Liquid Neural Network That’s Finally Here – No Transformer Needed!

Liquid AI introduces LFM, a new multimodal AI model inspired by nematodes, achieving SOTA performance with lower memory usage in resource-constrained environments.

A new architecture inspired by nematodes delivers SOTA performance across three model sizes and can be deployed in resource-constrained environments.

Mobile robots may benefit more from a brain modeled after a worm.

In the era of large models, Google’s 2017 groundbreaking paper, *Attention Is All You Need*, introduced Transformers as the dominant architecture.

However, Liquid AI, a startup founded by former MIT CSAIL researchers, is taking a different approach.

Liquid AI aims to "explore methods beyond generative pre-trained Transformers (GPT) models."

To achieve this, Liquid AI introduced its first multimodal AI model: Liquid Foundation Models (LFM).

These are next-generation generative AI models built from first principles, achieving SOTA performance at 1B, 3B, and 40B scales while maintaining lower memory usage and more efficient inference.

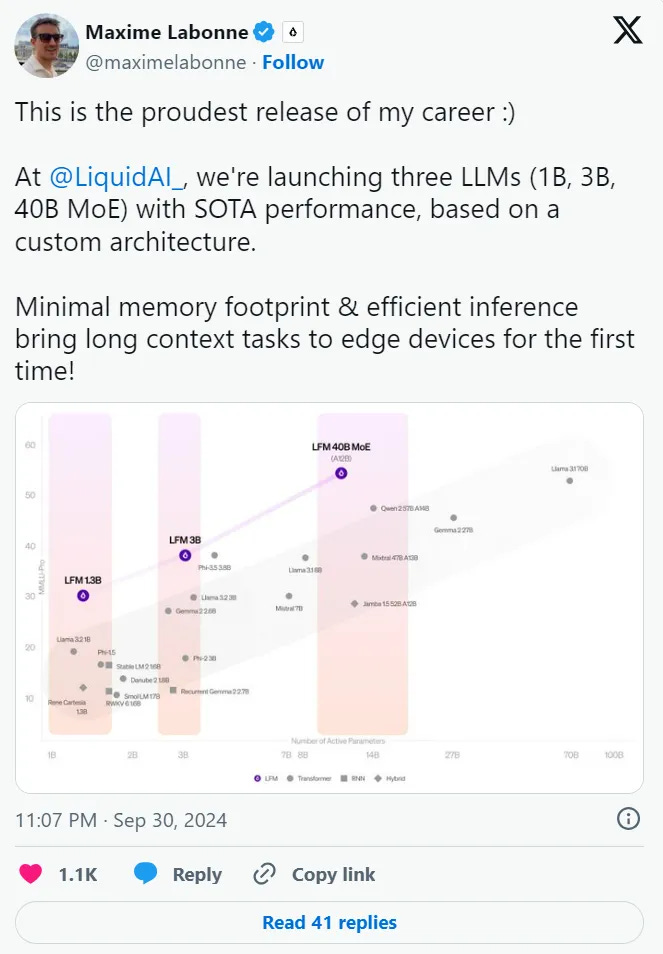

Maxime Labonne, Liquid AI’s Head of Post-Training, called LFM his proudest work. LFM outperforms Transformer-based models while using less memory.

Some call LFM the "Transformer killer."

Others praise it as a game-changer.

Many believe it's time to "move beyond Transformers," saying this new architecture holds great promise.