Llama 3.2 is Here! Now Supports Image Inference and a Mobile Version!

Discover the latest updates from Meta's Llama models! Llama 3.2 introduces powerful image reasoning capabilities and lightweight versions for mobile use. Explore new APIs and enhanced security measure

This morning brought big news.

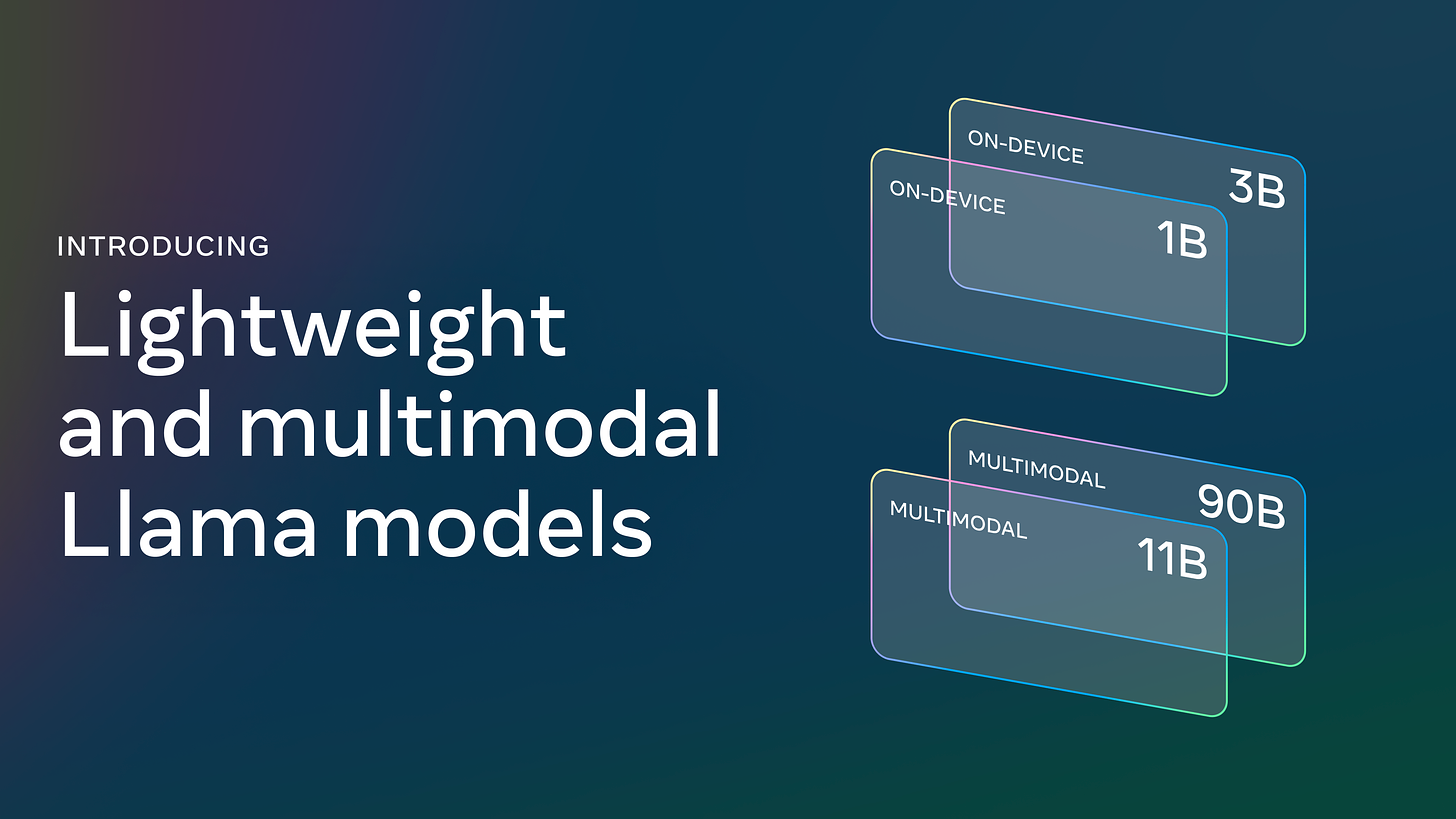

On one hand, OpenAI is facing more upheaval at the top. On the other hand, Meta hailed as "True Open AI," has released significant updates to its Llama models: introducing new Llama 11B and 90B models that support image reasoning, along with lightweight Llama 3.2 1B and 3B models for edge and mobile devices.

Additionally, Meta launched Llama Stack Distribution, which packages multiple API providers for easier tool and external model access.

They also announced new security measures.

True Open AI proves its worth! Users and businesses alike are excited and expressing their support.

It's only been two months since the release of Llama 3.1 on July 23.

Meta's Chief AI Scientist, Yann LeCun, joyfully exclaimed, "Good little llama!"

Meta reiterated its stance: "Open-source AI ensures these innovations benefit the global community. We will continue to push for open-source standards with Llama 3.2."

The Llama family was upgraded at today's Meta Connect 2024 event.

Now, we finally have lightweight LLMs (Llama 3.2 1B and 3B) that run locally on edge and mobile devices!

The smaller and medium versions have also been significantly updated, with more parameters, as they can now process visual data. Their model cards now feature a Vision label.

Llama 3.1 8B upgrades to Llama 3.2 11B Vision

Llama 3.1 70B upgrades to Llama 3.2 90B Vision

In just a year and a half, the Llama models have achieved remarkable success.

Meta reports a tenfold growth this year, establishing Llama as a standard for "responsible innovation."