Mistral AI Releases Two Models: 7B Math Reasoning Model and Mamba2 Architecture Code Generation Model

Mistral AI launches Mathstral 7B, a powerful math reasoning model, and Codestral Mamba, a cutting-edge code generation model with Mamba2 architecture.

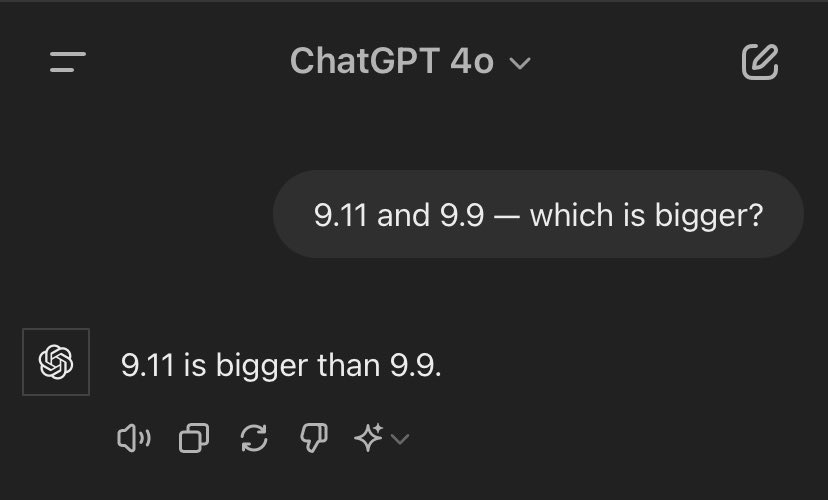

Curious Netizens Ask if Mathstral Can Solve "Which is Bigger, 9.11 or 9.9?"

Yesterday, the AI community was stumped by the seemingly simple question, "Which is bigger, 9.11 or 9.9?" Even major language models like OpenAI's GPT-4 and Google's Gemini failed.

This incident highlights that large language models struggle with certain numerical problems, unable to understand and provide correct answers like humans can. Specialized models are better suited for complex math problems.

Today, the French AI unicorn Mistral AI released "Mathstral," a 7B model focused on mathematical reasoning and scientific discovery, designed for complex, multi-step logic problems.

Built on the Mistral 7B framework, Mathstral supports a 32k context window and follows the Apache 2.0 license. It aims for a balance of performance and speed, especially with fine-tuning.

Mathstral is an instruction-based model, available for use or fine-tuning. Its weights are available on HuggingFace:

https://huggingface.co/mistralai/mathstral-7B-v0.1

The image below shows MMLU performance differences between Mathstral 7B and Mistral 7B by subject.