Mistral Pixtral 12B: Comprehensive Guide to Local Deployment, Image Analysis, and OCR

Learn how to locally install the Pixtral 12B model, explore its image analysis and OCR capabilities, and test its performance with various images.

In this article, I’ll show you how to install the Pixtral model locally and test it with various images.

I’ll also introduce some amazing features of this model, which is developed by the French company, Mistral.

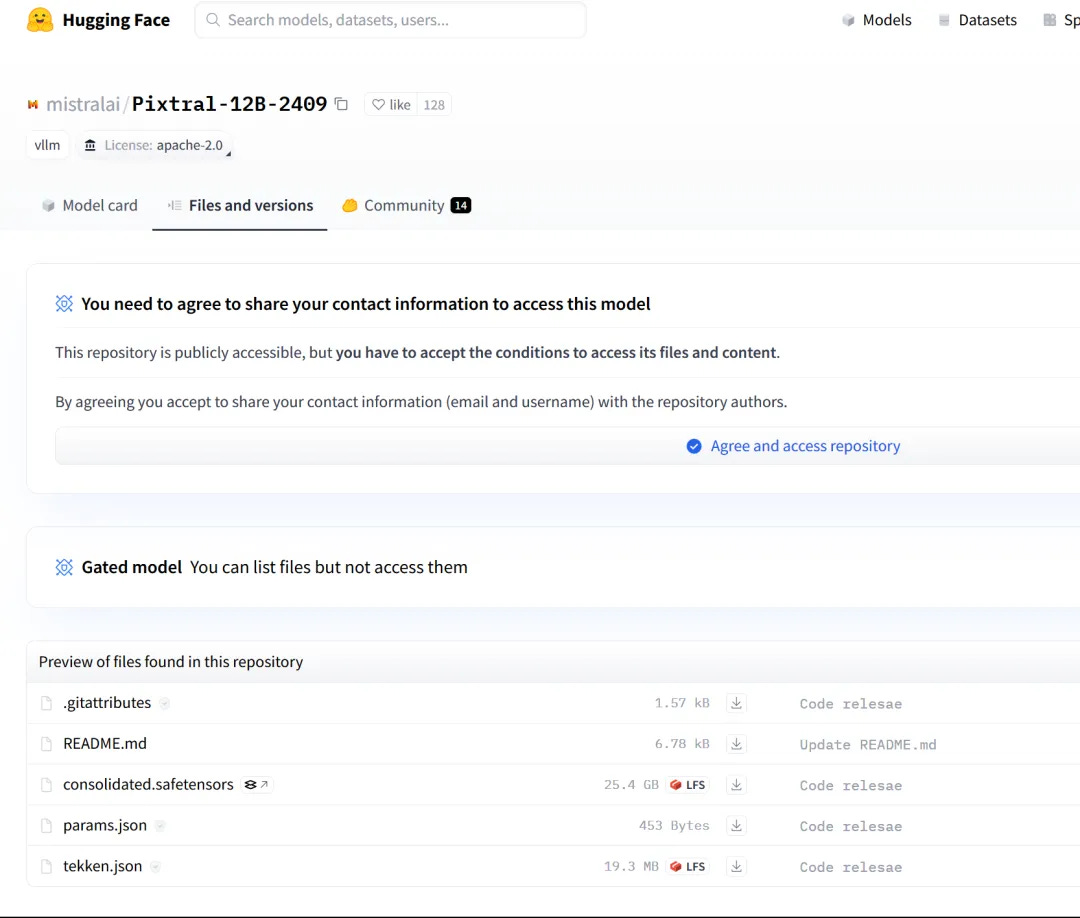

Before that, let me show you the Pixtral model page on Hugging Face.

Why is it so special?

Because Mistral is already well-known for its open-source models and their quality, Pixtral 12B (12 billion parameters) is their first multimodal model.

This model can understand both images and text, supports various image resolutions, and can handle large documents with both images and text.

It also has a 128k context window and is open-source, with weights available under the Apache 2 license. I’ll include the link to the model card in the description.

Based on some benchmark tests, this model outperforms other open-source models like 53 Vision, LLaVA (7B parameters), and Claude 3 Haiku.

However, most comparisons are with models having 7 billion parameters, while Pixtral has 12 billion. So, I take these benchmarks with caution, and we will run our own tests.