NVIDIA Open-Sources Large Model Alignment Framework - NeMo-Aligner

Unlocking the Future: Introducing NeMo-Aligner for Enhanced Model Alignment

Large language models are widely used now. However, their outputs are not always safe or reliable. It is hard for developers to align these huge models using normal methods.

To fix this problem, NVIDIA made NeMo-Aligner. It is a toolkit for aligning models efficiently. NeMo-Aligner has many techniques like RLHF, DPO, SteerLM, and SPIN. These techniques help developers make their models safer, better, and more stable.

Open-source repository: https://github.com/nvidia/nemo-aligner

Research paper: https://arxiv.org/abs/2405.01481v1

Here are two commonly used and effective model alignment methods in NeMo-Aligner:

RLHF

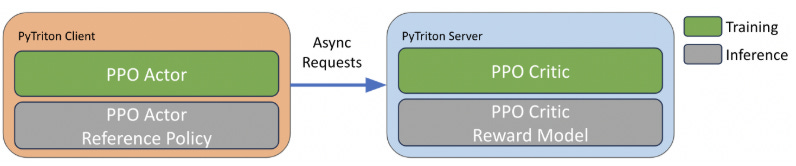

RLHF is a core module in NeMo-Aligner. It uses human feedback to guide large models. This helps the models' output text that matches human values and preferences. RLHF uses the PPO algorithm to optimize the language model's behavior.

The training has three main steps:

1. Supervised fine-tuning starting from a pretrained base model. This ensures the model can generate responses based on instructions.

2. Training a reward model on a set of human preference data. This reward model predicts if outputs match human preferences.

3. Policy optimization training using the trained reward model and PPO algorithm.

SteerLM

SteerLM mainly achieves safe alignment by guiding the generation process of large models. It uses a "guiding signal" strategy. Developers can inject the desired output patterns into the model's training. This guides the model to generate responses that better match expectations.

First, prepare a dataset of input prompts and expected outputs. The input prompts can be instructions or questions from users. The expected outputs are responses generated by the model.

Generate guiding signals based on the input prompts and expected outputs. Guiding signals can be generated in different ways. For example, using rules, rule-based policies, or other heuristic methods. This can control the style, topic, sentiment, etc. of the generated text.

For example, in AI talks, you can guide the model to give answers users want.

For text summaries, you can guide the model to make summaries that are right and tell more.

For translations, you can make the model translate better and smoother.