OmAgent: The Open-Source Alternative to NVIDIA AI Blueprint, Now "Watching" Videos Like Humans

Discover how NVIDIA AI Blueprint and OmAgent empower AI to understand videos, enabling precise Q&A and real-world applications for smarter device interaction.

When you're watching a thrilling action movie, you might suddenly wonder:

“Which episode did that character say that line in?”

“What’s the background music here?”

Or during a football match, you miss a decisive goal and want to replay it. Such demands, if handled manually, can be incredibly time-consuming.

But AI can act as the eyes and brain for machines, enabling them to understand videos and grasp storylines. For ordinary people, this not only improves search efficiency but also expands how we interact with the digital world.

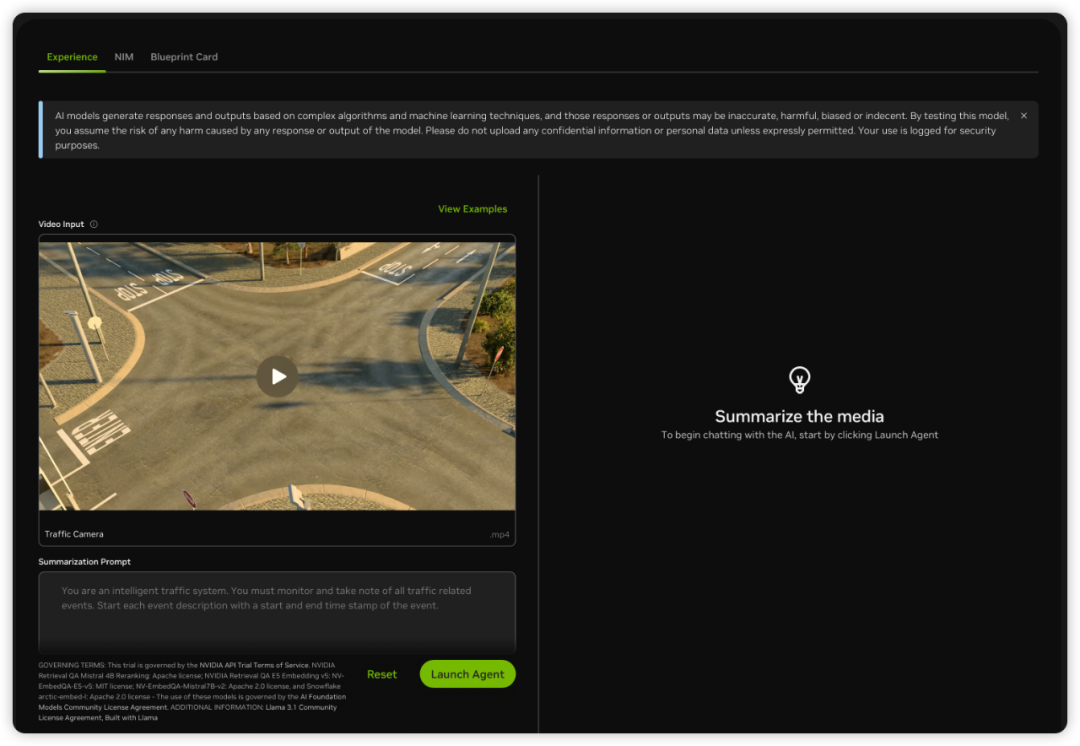

NVIDIA's newly launched NVIDIA AI Blueprint aims to solve this problem. It is a pre-trained, customizable AI workflow that provides developers with a complete solution to build and deploy generative AI applications for common use cases.

For example, in NVIDIA’s demo interface, you can select one of three video clips and ask content-related questions.

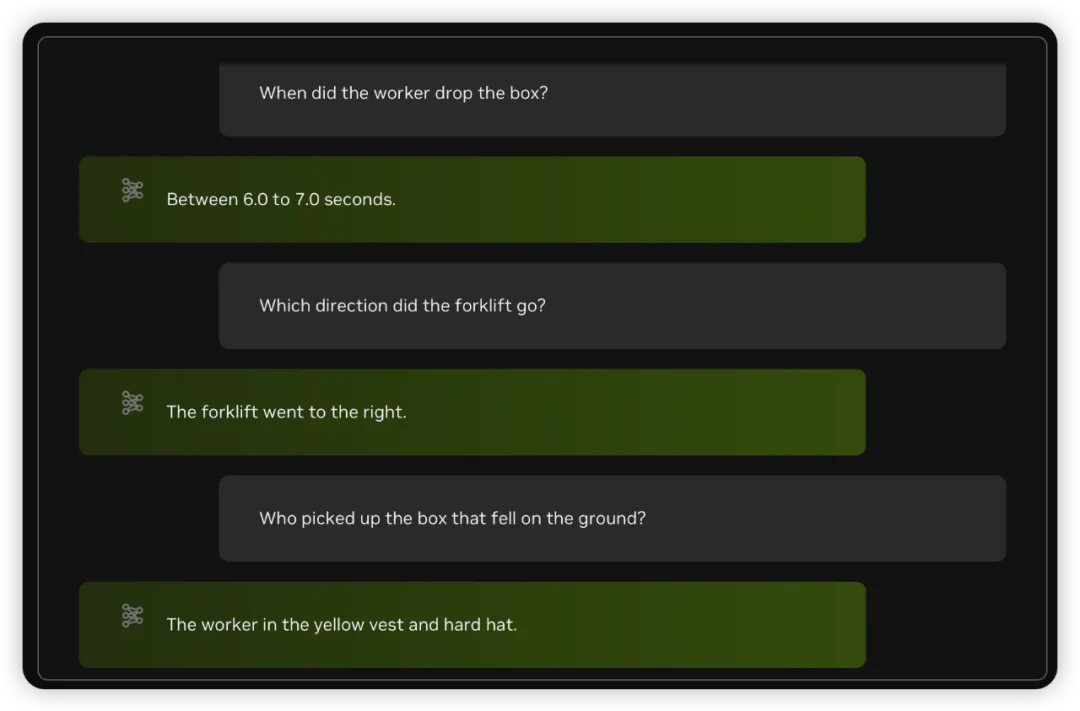

After several tests, we found that Blueprint delivers reasonable performance for video-based Q&A.

You can ask about the timing of an event or the state of an object.

For example, when we asked, “When did the worker drop the box?” Blueprint correctly identified the time range.

For questions about continuous processes, like “Which direction did the forklift go?” Blueprint also answered accurately.

However, for detailed questions like “Who picked up the box that fell on the ground?” Blueprint gave incorrect answers.

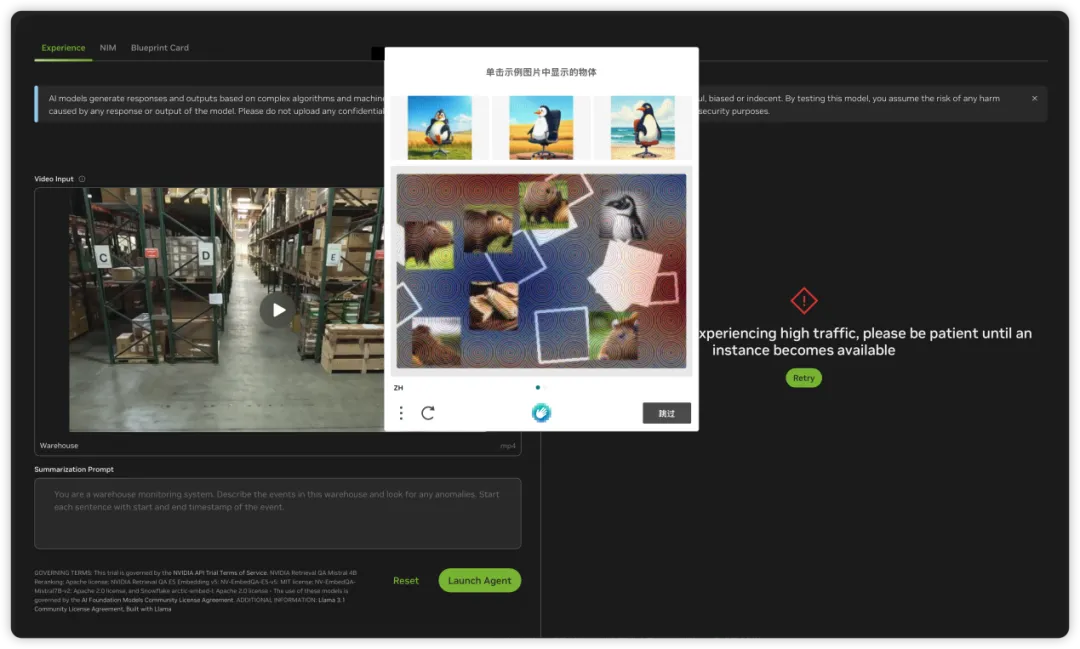

One major downside was the frequent traffic limits and endless verification issues during the trial, making the experience far from smooth.

Additionally, Blueprint is still in an early access phase, requiring an application to use it, so there’s no quick way to start.