OmniGen Unifies Image Generation with a Highly Simplified and User-Friendly Architecture

OmniGen unifies image generation tasks into a simplified, user-friendly model, supporting text-to-image, editing, and more without additional plugins.

The emergence of large language models (LLMs) has unified language generation tasks and revolutionized human-computer interaction.

However, in the field of image generation, a unified model capable of handling various tasks within a single framework has largely remained unexplored.

Recently, Zhiyuan released a new diffusion model architecture called OmniGen, a novel multimodal model for unified image generation.

OmniGen has the following features:

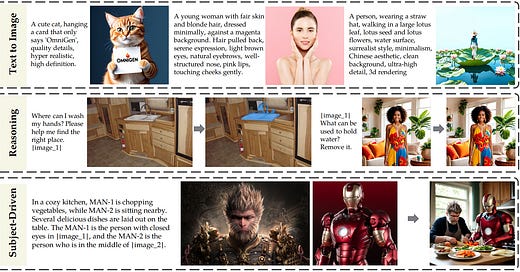

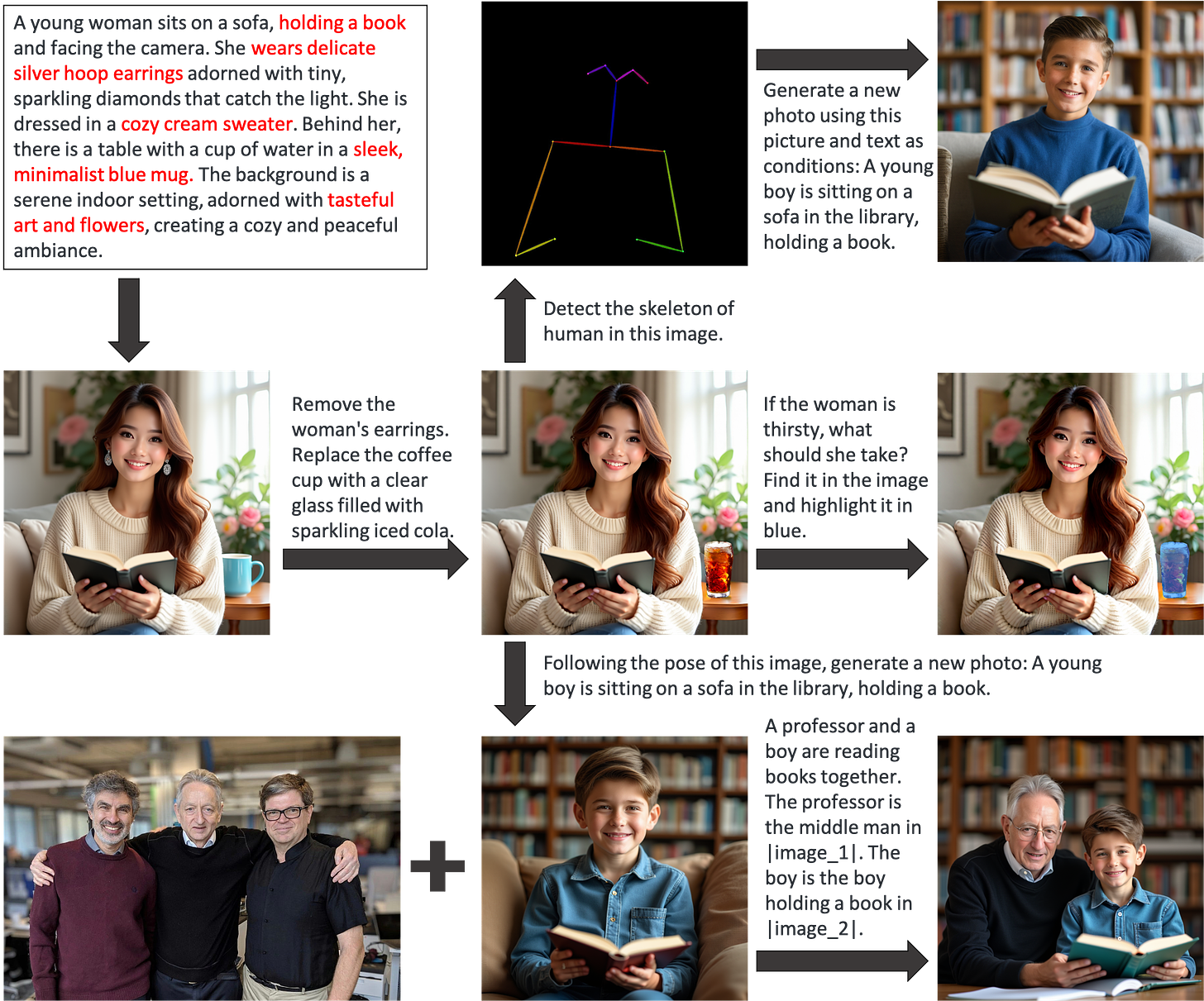

Unification: OmniGen natively supports various image generation tasks, such as text-to-image, image editing, theme-driven generation, and visually conditioned generation. Additionally, OmniGen can handle classical computer vision tasks, transforming them into image generation tasks.

Simplicity: OmniGen's architecture is highly simplified. Compared to existing models, it is more user-friendly, allowing complex tasks to be performed via instructions without lengthy processing steps and additional modules (e.g., ControlNet or IP-Adapter), significantly simplifying workflows.

Knowledge Transfer: Benefiting from learning in a unified format, OmniGen effectively transfers knowledge across different tasks, handles unseen tasks and domains, and exhibits novel functionalities. We also explore the model's potential applications of reasoning ability and chain-of-thought mechanisms in the field of image generation.

Introduction

In recent years, many text-to-image models have stood out in the wave of generative AI.

However, these impressive proprietary models can only generate images based on text.

When users require more flexible, complex, and detailed image generation, additional plugins and operations are often needed.