Open Source Today (2024-07-11): NuminaMath 7B TIR, AI Math Olympiad Winner

Explore award-winning AI models and tools like NuminaMath 7B TIR, MobileLLM, Paints-UNDO, and more for advanced problem-solving and creative tasks.

I am sharing some interesting AI open-source models and frameworks today.

Project: NuminaMath 7B TIR

NuminaMath 7B TIR is a language model trained to solve math problems. It won an award at the AI Math Olympiad (AIMO) and scored 29/50 on both public and private test sets.

The model is based on deepseek-math-7b-base. It went through two stages of fine-tuning. First, it was fine-tuned on a dataset of math problem solutions. Then, it was fine-tuned on a synthetic dataset of multi-step generation examples using tool integration reasoning. This allows the model to combine natural language reasoning and Python REPL calculations to solve math problems.

https://huggingface.co/AI-MO/NuminaMath-7B-TIR

Project: MobileLLM

MobileLLM is a sub-billion parameter language model optimized for on-device use, developed by Meta.

The project uses SwiGLU activation functions, deep-thin architecture, shared embeddings, and grouped-query attention. These design choices help create a high-quality language model with fewer than a billion parameters.

MobileLLM-125M/350M shows significant accuracy improvements in zero-shot commonsense reasoning tasks compared to previous best models of the same size.

https://arxiv.org/abs/2402.14905

https://github.com/facebookresearch/MobileLLM

Project: Paints-UNDO

Paints-UNDO aims to provide base models of human drawing behaviors in digital paintings. The goal is for future AI models to better align with the real needs of human artists.

The project takes an image as input and outputs the drawing sequence of that image. It simulates various human drawing behaviors like sketching, coloring, shading, and transforming.

https://github.com/lllyasviel/Paints-UNDO

Project: ebook to chatml conversion

ebook-to-chatml-conversion is a tool for creating multi-turn conversation datasets. It converts eBooks (.txt or .epub) into ChatML formatted group chats.

This format can create datasets. The script uses koboldcpp for GBNF grammar processing and alpaca for all prompts, which can be seen in prompts.py.

The script works well with 7B models but requires some editing.

By editing config.yaml, you can use any desired context size (4096, 8192, or even 32K context size), but 8192+ context is recommended.

https://github.com/statchamber/ebook-to-chatml-conversion

Project: LLM Structured Output Benchmarks

LLM Structured Output Benchmarks is an open-source project. It aims to benchmark different LLM structured output frameworks.

The project covers tasks like multi-label classification, named entity recognition, and synthetic data generation. It evaluates the reliability and latency of each framework.

https://github.com/stephenleo/llm-structured-output-benchmarks

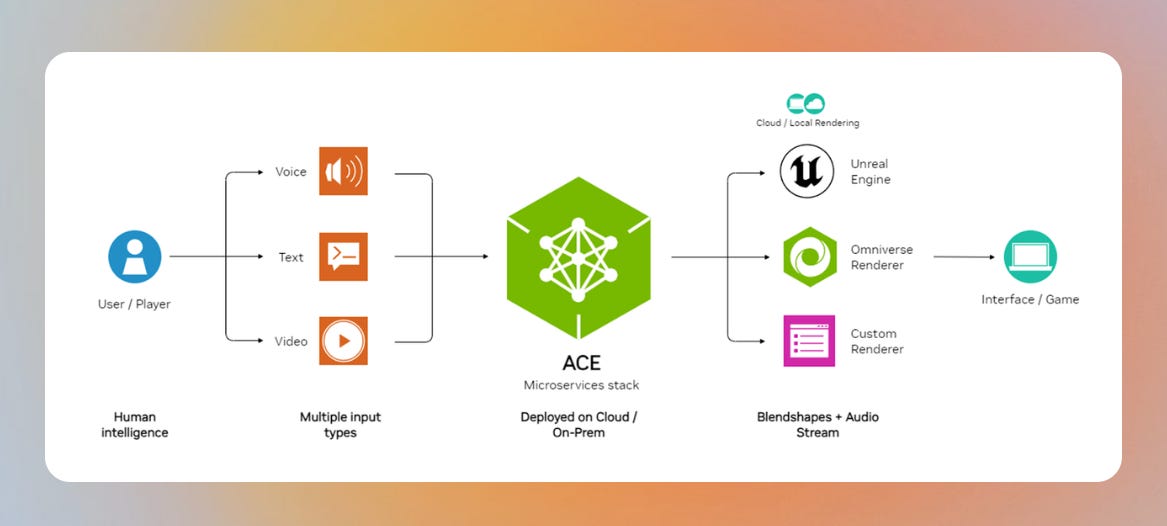

Project: ACE

NVIDIA ACE is a set of technologies to help developers use generative AI to create digital humans.

ACE NIMs are microservices designed to run on the cloud or on a PC.

In this GitHub repository, you can find samples and reference applications using ACE NIMs and microservices.

These microservices are available for evaluation licensing through NVIDIA AI Enterprise (NVAIE) on NGC.

Great tools!