Open Source Today (2024-07-19): Mistral Collaborates with NVIDIA to Open Source 12B Model

Explore cutting-edge AI models like Mistral-Nemo and DeepSeek-V2, designed for advanced reasoning and chatbot performance. Discover more on Hugging Face!

I'll share some interesting AI open-source models and frameworks today.

Project: Mistral Nemo

Mistral-Nemo, co-trained by Mistral AI and NVIDIA, is a large model with 12B parameters and supports 128k context. It includes Base and Instruct versions.

Among models of similar size, Mistral-Nemo excels in reasoning, world knowledge, and code accuracy.

https://huggingface.co/mistralai/Mistral-Nemo-Base-2407

https://huggingface.co/mistralai/Mistral-Nemo-Instruct-2407

Project: DeepSeek-V2/DeepSeek-V2-Chat-0628

DeepSeek-V2-Chat-0628 is an improved version of DeepSeek-V2-Chat. This model has achieved outstanding results on the LMSYS Chatbot Arena leaderboard, surpassing all other open-source models.

DeepSeek-V2 is an open-source MOE model by the Deepseek team, with 236B total parameters, activating 21B per token, and supports 128K tokens context length.

https://huggingface.co/deepseek-ai/DeepSeek-V2

https://github.com/deepseek-ai/DeepSeek-V2/blob/main/deepseek-v2-tech-report.pdf

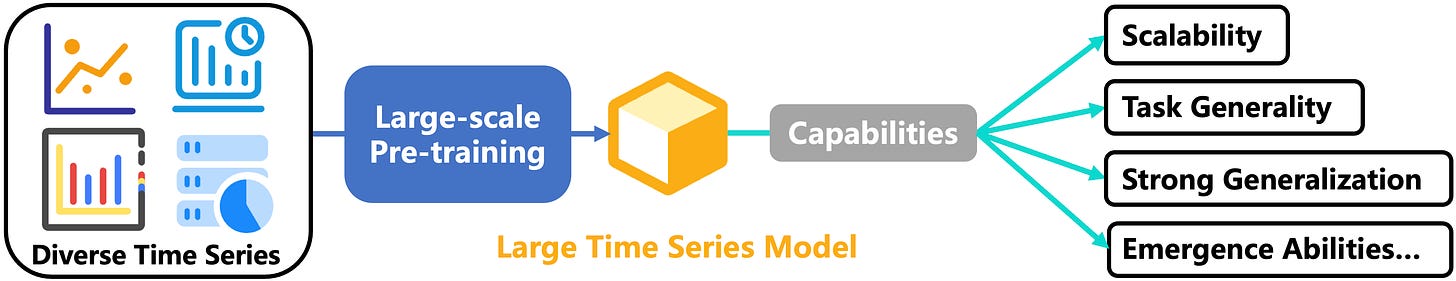

Project: Timer

Timer is a generative pre-trained Transformer model for general time series analysis.

The project provides official code, datasets, and checkpoints, aiming to achieve zero-shot forecasting, data imputation, and anomaly detection through large-scale time series data pre-training.

The project has been accepted by ICML 2024 and has released pre-trained datasets on Hugging Face.

https://github.com/thuml/Large-Time-Series-Model

https://arxiv.org/abs/2402.02368

https://huggingface.co/datasets/thuml/UTSD

Project: PraisonAI

PraisonAI is a low-code solution that combines frameworks like AutoGen and CrewAI, designed to simplify the building and management of multi-agent large language model (LLM) systems.

The project focuses on ease of use, customization, and efficient human-agent collaboration, supporting interactions with the entire codebase.

https://github.com/MervinPraison/PraisonAI

Project: Awesome-Tabular-LLMs

This project collects papers on "large language models (LLMs) for table-related tasks," such as using LLMs for table QA tasks.

It aims to organize and classify recent research on Tabular LLMs and multimodal LLMs, covering tasks like table understanding, table generation, and table fact verification.

https://github.com/SpursGoZmy/Awesome-Tabular-LLMs

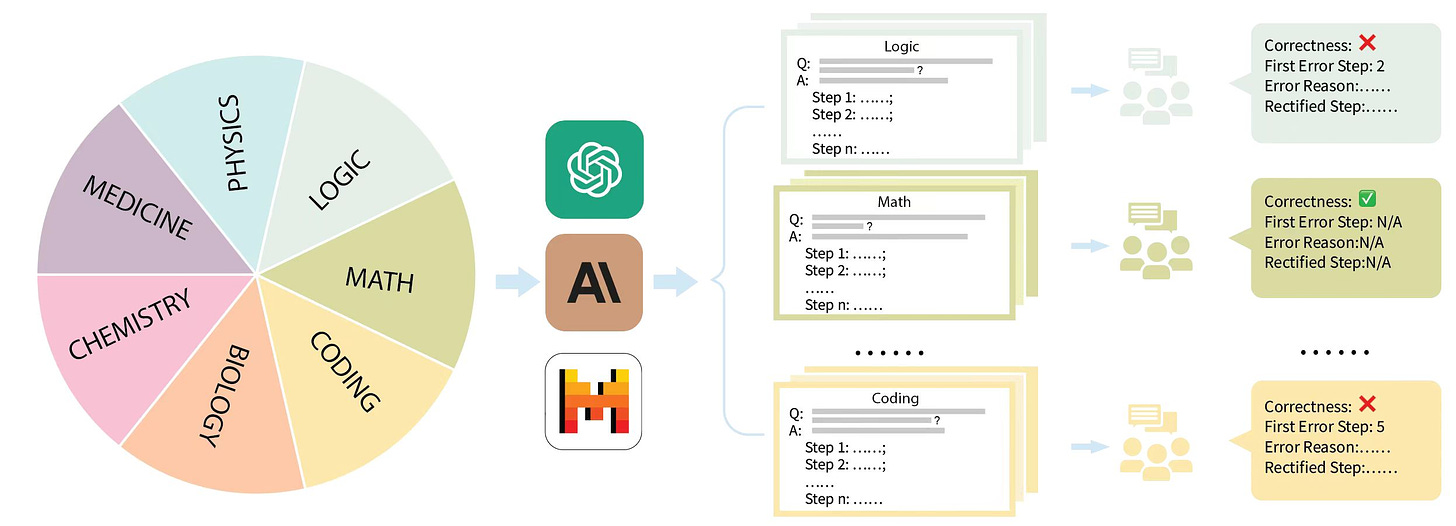

Project: Mr-Ben

Mr-Ben is a comprehensive meta-reasoning benchmark designed to assess the reasoning abilities of LLMs.

The project evaluates LLMs in a teacher role, assessing their ability to correctly reason, analyze errors, and make corrections.

Each data point includes a question, a chain-of-thought (CoT) answer, and an error analysis.

The evaluation requires models to judge the correctness of the solution and report the first error step and its cause.

The project uses MR-Score as a unified scoring standard, including three sub-indicators: the Matthews correlation coefficient (MCC) of solution correctness, the proportion of correctly predicting the first error step, and the proportion of correctly predicting the error cause.

https://arxiv.org/abs/2406.13975

https://huggingface.co/datasets/Randolphzeng/Mr-Ben