Open Source Today (2024-10-25): Zhipu Open-Sources GLM-4-Voice

Zhipu AI's GLM-4-Voice supports real-time bilingual speech generation with adjustable emotion, tone, speed, and dialect for dynamic conversations.

Here are some interesting AI open-source models and frameworks I wanted to share today:

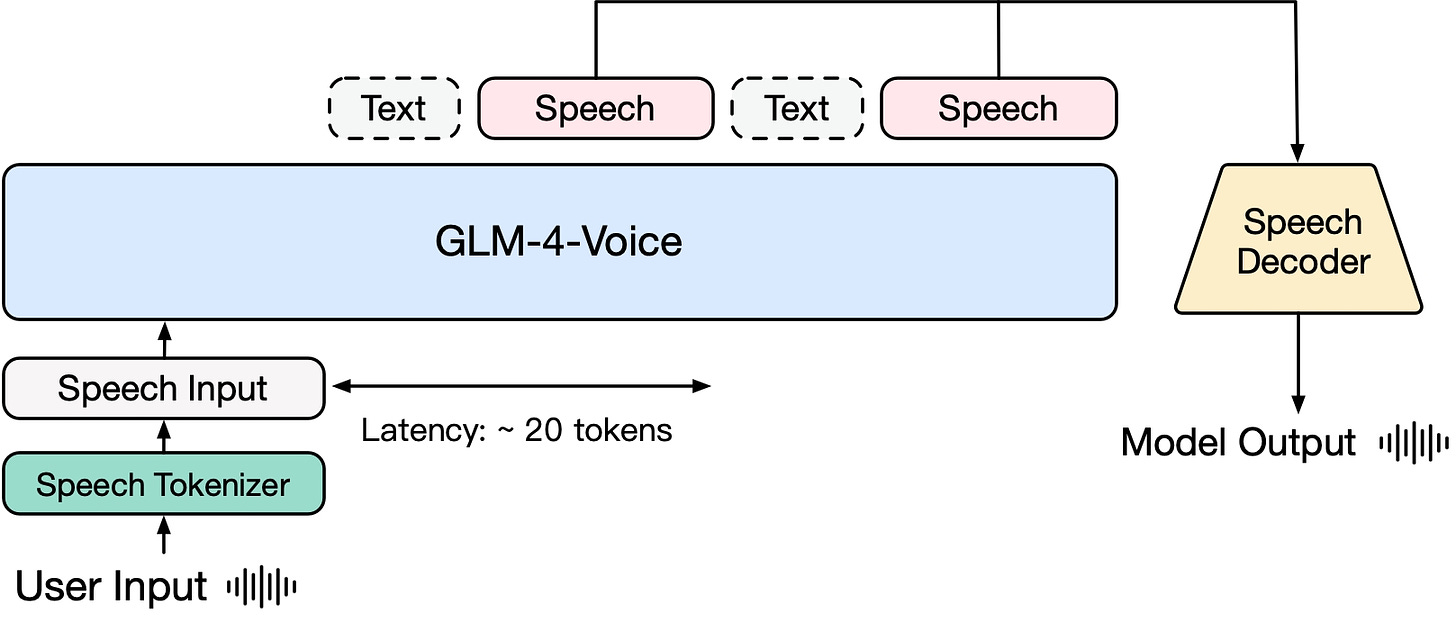

Project: GLM-4-Voice

GLM-4-Voice is an end-to-end voice model developed by Zhipu AI, capable of directly understanding and generating Chinese and English speech for real-time voice conversations.

This model supports adjusting attributes such as emotion, tone, speed, and dialect of the voice based on user commands.

GLM-4-Voice consists of three main components: GLM-4-Voice-Tokenizer, GLM-4-Voice-Decoder, and GLM-4-Voice-9B, which are responsible for discretizing speech input, generating speech output, and pre-training and aligning the speech modality, respectively.

https://github.com/THUDM/GLM-4-Voice

https://huggingface.co/THUDM/glm-4-voice-decoder

Project: Aya Expanse

Aya Expanse 32B is an open-source research model with highly advanced multilingual capabilities.

It integrates a high-performance pre-trained command series model and one year of focused research from Cohere For AI, including data arbitrage, multilingual preference training, safety tuning, and model merging. Supporting 23 languages, this model aims to provide strong support for multilingual large language models.

https://huggingface.co/CohereForAI/aya-expanse-32b

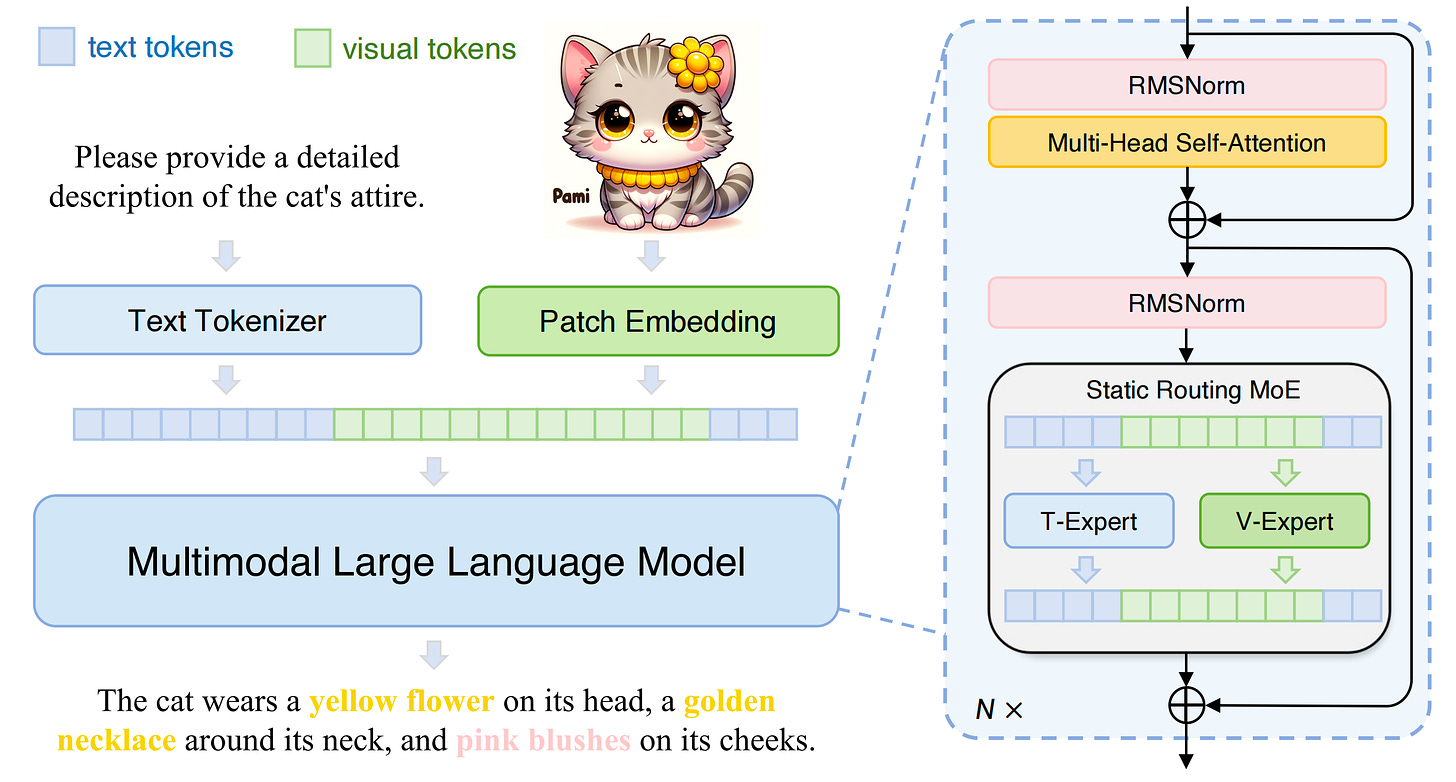

Project: Mono-InternVL

Mono-InternVL-2B is a single-modal large language model (MLLM) that integrates visual encoding and text decoding into one model.

By embedding visual experts through the Mixture-of-Experts mechanism and freezing the language model, it optimizes visual capabilities without compromising language knowledge.

The introduction of innovative Endogenous Visual Pretraining (EViP) enables coarse-to-fine learning of visual content.

Compared to models like Mini-InternVL-2B-1.5, Mono-InternVL-2B excels in both performance and deployment efficiency.

https://huggingface.co/OpenGVLab/Mono-InternVL-2B

Project: KaibanJS

KaibanJS is a native JavaScript framework designed to build and manage multi-agent systems using a Kanban approach.

It helps users create, visualize, and manage AI agents, tasks, tools, and teams, supporting seamless AI workflow orchestration and real-time workflow visualization.

Users can track task progress in real time through KaibanJS and collaborate more efficiently in AI projects.

https://github.com/kaiban-ai/KaibanJS

Project: Agent-0

The Agent-0 project is a proof-of-concept aimed at replicating the reasoning capabilities of OpenAI’s newly released O1 model.

The O1 model reflects on its solutions through Chain-of-Thought prompting and reinforcement learning, improving its responses through iterative reasoning.

This project uses the Gemini API or any model with function calling capabilities, proposing solutions through a sequential agent system and applying Chain-of-Thought and reflection techniques at each stage for iterative optimization.

https://github.com/PromtEngineer/Agent-0

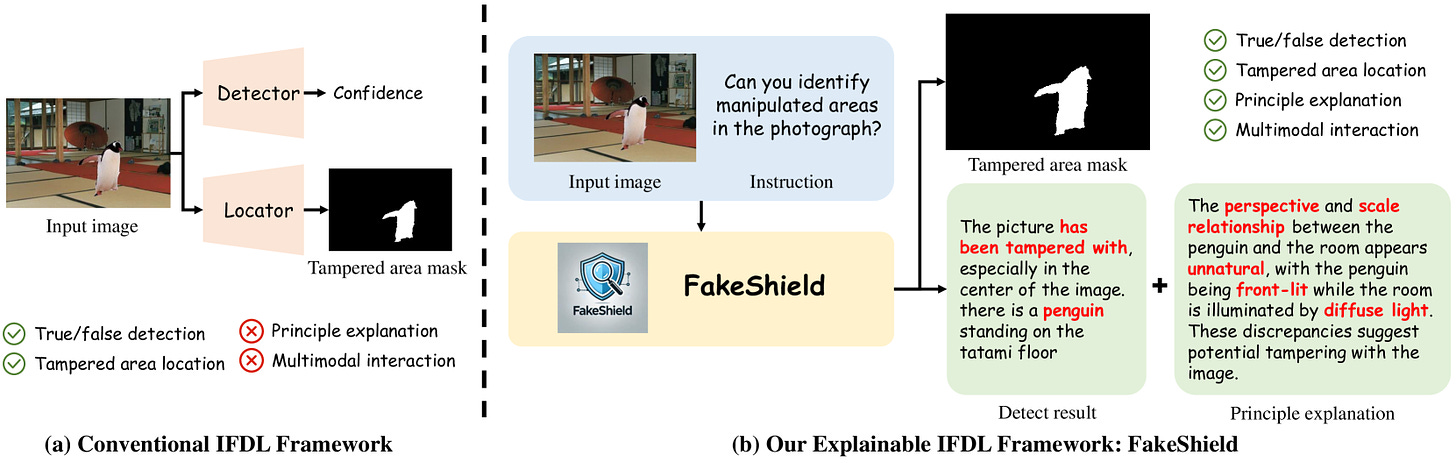

Project: FakeShield

FakeShield is a multimodal framework designed for interpretable image forgery detection and localization using multimodal large language models.

FakeShield can evaluate image authenticity, generate masks for tampered areas, and provide judgments based on pixel-level and image-level tampering clues.

The project enhances the existing IFDL dataset with GPT-4o and introduces a Domain-Tag-Guided Explainable Forgery Detection Module (DTE-FDM) and a Multimodal Forgery Localization Module (MFLM) to achieve forgery localization guided by detailed text descriptions.

https://github.com/zhipeixu/FakeShield

Project: Parrot-TTS

Parrot-TTS is a text-to-speech (TTS) system that uses a Transformer-based sequence-to-sequence model to map character tokens to HuBERT quantized units, and an improved HiFi-GAN vocoder for speech synthesis.

The project provides installation instructions, demo execution, and guidelines for training the TTS model on your own data.