OpenAI GPT-4o mini Released: LLM Costs Drop 99% in 2 Years, Evaluation Speed Increased

Discover OpenAI's GPT-4o mini, a cost-effective AI model outperforming competitors with superior speed and performance. Ideal for real-time applications!

OpenAI launches a new model, replacing the aging GPT-3.5.

Currently, GPT-4o mini ranks ninth on the WildBench test, outperforming Google's Gemini-flash and Anthropic's Claude 3 Haiku.

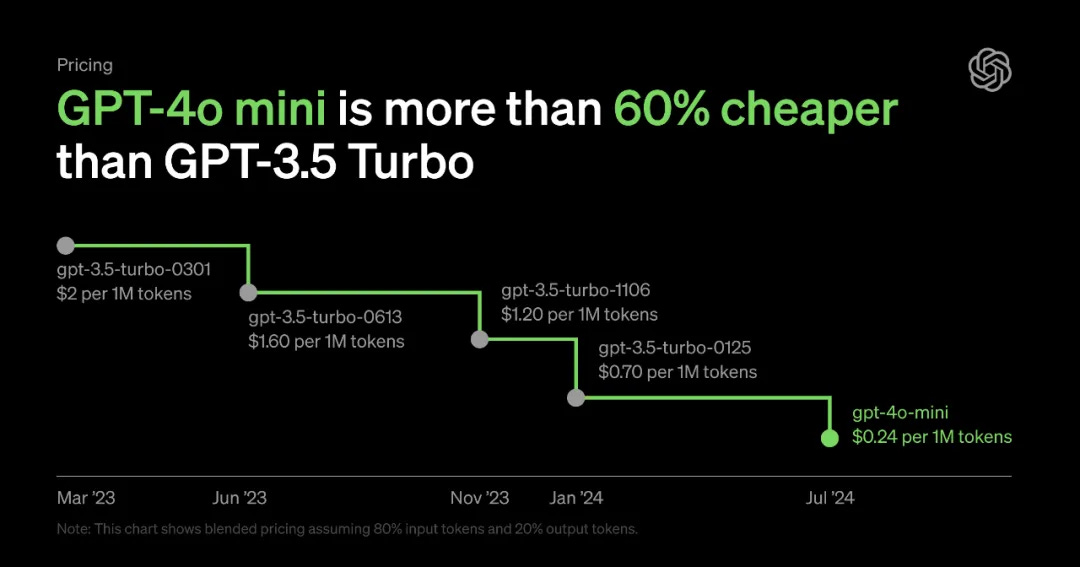

GPT-4o mini is almost as capable as the original GPT-4 but much cheaper:

$0.15 per million input tokens

$0.60 per million output tokens

In ChatGPT, GPT-3.5 is now fully retired. Free users can use the GPT-4o mini model. The corresponding API is also available, supporting 128k input tokens (image and text) and 16k output tokens. In comparison, Claude 3.5 Sonnet just upgraded to 8k output tokens a few days ago.

Thanks to its low cost and latency, it’s recommended for various applications:

Chain or parallel model calls

Large context input to the model (e.g., entire codebases or conversation history)

Fast, real-time text response interaction (e.g., customer service)