OpenAI's Sam Altman plugged GPT2 thrice - but why?

The untold story behind Sam Altman's GPT-2 references

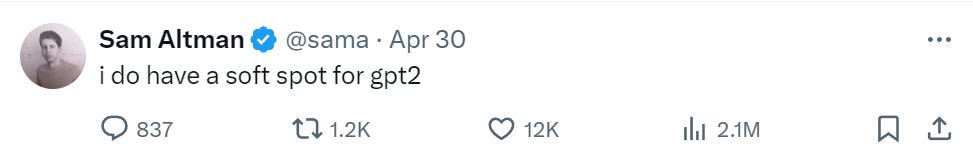

On May 6th, Sam Altman tweeted, "I am a great GPT-2 chatbot." Sam Altman is the CEO of OpenAI. On April 30th, he also said he really liked GPT-2. GPT-2 is an old AI model from 2019.

Many people were surprised that Altman talked about GPT-2 twice. GPT-2 did very well on a chatbot test. It was as good as new models like the GPT-4. Some thought this meant OpenAI made a new version of GPT-2. But on May 2nd, Altman said this was not true.

OpenAI has found a new way to train models. They can make small models work as well as big ones. This is like what Microsoft did with their new Phi-3 models.

This might be a model for phones and tablets. It would work well but not use a lot of power. Microsoft, Google, and Meta have made models like this. But OpenAI has not made one yet.

Apple wants to use OpenAI and Google's models in the new iOS to make it better. OpenAI's leader, Altman, is promoting this to get Apple to trust them.

It shows how good their models are. It also puts pressure on Google. Google made models for phones called Gemini, but not many people are using them.

Making models for phones is tricky. The models can't be too big. Microsoft's smallest new model has 1.3 billion parameters. Google has 1.8 billion.

Bigger models need more computer power. Phones have small batteries and limited space. A huge model would use up the battery very fast.

Phones need fast models. The models are slower on phones than computers now. Small models help phones do things better. They can do voice commands, translations, and answer questions.

So it makes sense for OpenAI to test their small GPT-2 model from 2019. It uses the same basic setup as their bigger GPT-3 and GPT-4 models.

OpenAI might make a small AI model for phones. They will likely give it a name like "GPT-4 mini" or "GPT-4 little". They probably won't call it GPT-2.