OpenAI's Whisper Faces Severe Hallucination Issues: Half of 100 Hours of Transcriptions Are Nonsense

OpenAI's Whisper faces severe hallucination issues, with half of its transcriptions inaccurate, raising concerns about its use in medical settings.

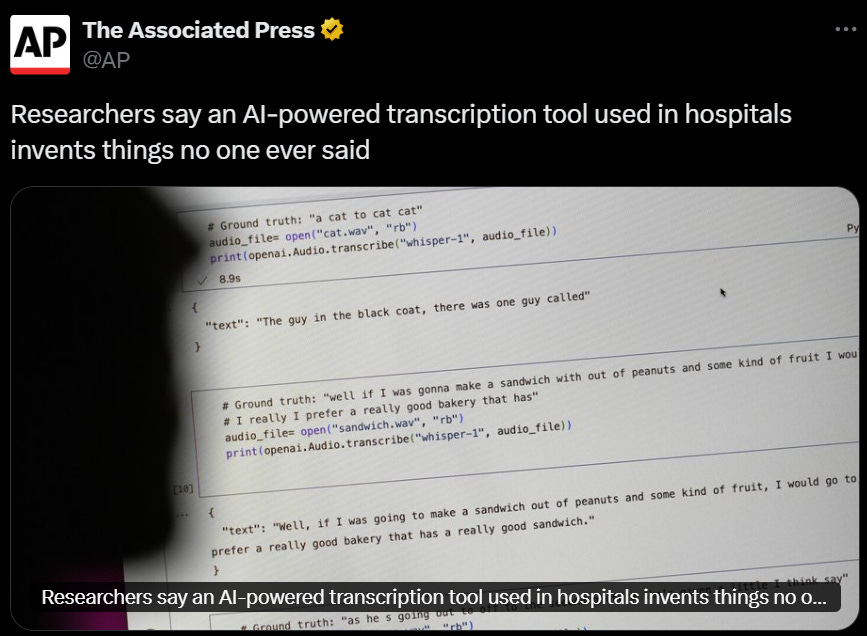

OpenAI's AI speech transcription tool, Whisper, known for its "human-level" capabilities, has been found to produce serious hallucinations—engineers discovered that about half of over 100 hours of transcriptions were inaccurate.

More concerning, the Associated Press revealed that medical institutions have been using Whisper to transcribe doctor-patient consultations, attracting significant public attention.

Reports indicate that over 30,000 clinical doctors and 40 health systems, including Minnesota's Mankato Clinic and Children's Hospital Los Angeles, have begun using a tool based on Whisper developed by the French AI health company Nabla.

They have reportedly transcribed approximately 7 million medical visits, raising alarm among users.

Notably, OpenAI had previously warned against using this tool in "high-risk areas."

In response to the recent revelations, an OpenAI spokesperson stated that the company would incorporate a feedback mechanism in future model updates.