Prime Intellect: World's First Decentralized Large Model, Fully Open-Sourced

Prime Intellect announces the open-source release of its decentralized 10B model, INTELLECT-1. Learn about its training process, architecture, and future AGI plans.

On November 22, Prime Intellect announced the completion of training a 10B model using a decentralized approach.

On the 30th, they open-sourced everything, including the base model, checkpoints, post-training models, data, the PRIME training framework, and a technical report.

Reportedly, this is the first-ever 10B-scale model trained in a decentralized manner.

Some stats on our decentralized training run:

30 compute contributors

10 billion parameters

5 countries - 3 continents

1 trillion tokens

Prime Intellect stated that compared to previous research, INTELLECT-1 achieved a 10-fold scale improvement.

This breakthrough demonstrates that training large-scale models is no longer the exclusive domain of big corporations—it can also be achieved through decentralized, community-driven efforts.

Their next step is to expand the model to frontier scales, with the ultimate goal of creating an open-source AGI.

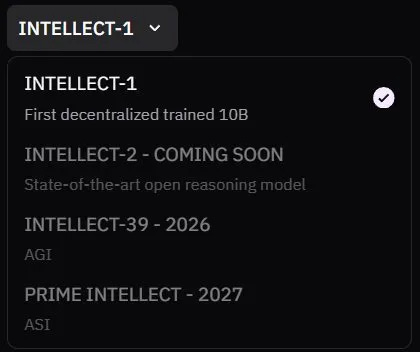

This ambition is hinted at in their online demo's model options, which include potential choices for open inference models, AGI, and even ASI. Clearly, this is an ambitious team.

After the model's release, there were some skeptical voices, but the AI community has generally responded with very positive feedback.