Qwen 2.5 Local Installation and Testing

Qwen 2.5 introduces 13 powerful new models for general-purpose, coding, and math tasks. Explore open-source AI breakthroughs today!

Qwen just released 13 new models, called Qwen 2.5.

The release includes three types of models: general-purpose, coding, and math models.

The general-purpose models come in seven sizes: 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B.

The coding models are available in 1.5B and 7B sizes, with a 32B version coming soon. The math models are offered in 1.5B, 7B, and 72B sizes.

All models are open-source under the Apache 2.0 license, except the 3B and 72B versions.

They also released closed-source Qwen 2.5 Plus and Turbo models, available only through their API. Releasing so many models at once is impressive.

Let’s take a closer look. The new models were trained on up to 180 trillion tokens, and the coding models on 5.5 trillion tokens of code-related data.

They support features like Chain of Thought, which is great.

Overall, these models outperform Qwen 2, which was already impressive. The new models show significant improvements, especially in coding and math.

The models excel in following instructions, generating long texts, understanding structured data, and producing structured outputs (like JSON).

They support a context length of up to 128K tokens and can generate around 8K tokens, which is very useful.

The models also retain multilingual support for 29 languages.

The long-awaited Qwen 2.5 coding models are here. The previous version, Qwen 1.5, was released about a year ago. The new versions are trained on 5.5 trillion tokens of code, enabling smaller models to compete with larger ones in coding benchmarks.

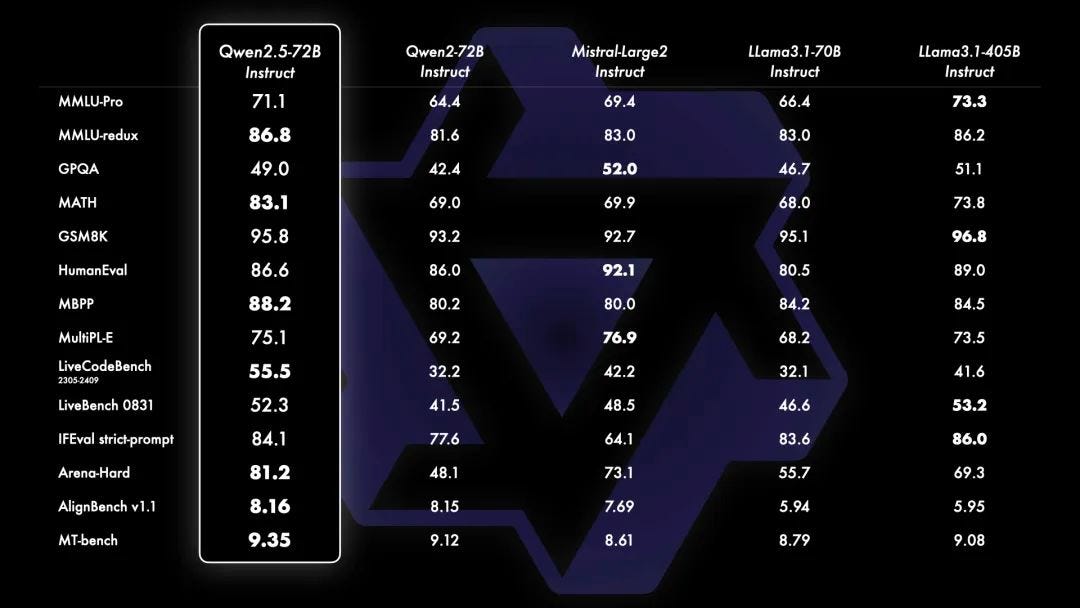

Now, let’s talk about the benchmarks.

The 72B model outperforms Qwen 2 and Llama 3.1 in almost all benchmarks, even surpassing higher-parameter models in some cases, which is impressive.

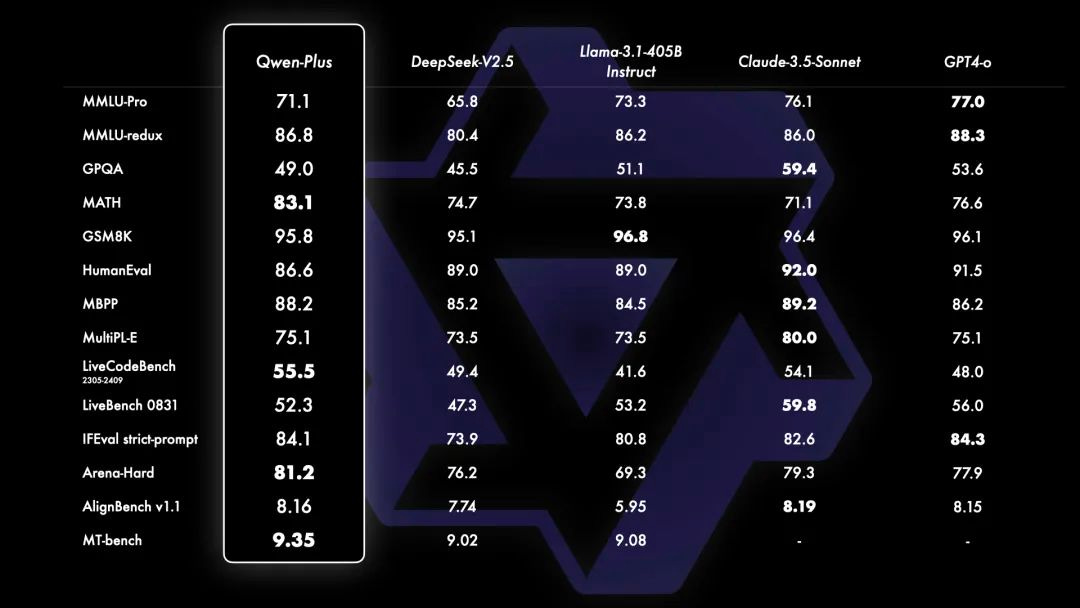

They also released a Qwen Plus model, available only through their API, which performs exceptionally well compared to other closed-source models.

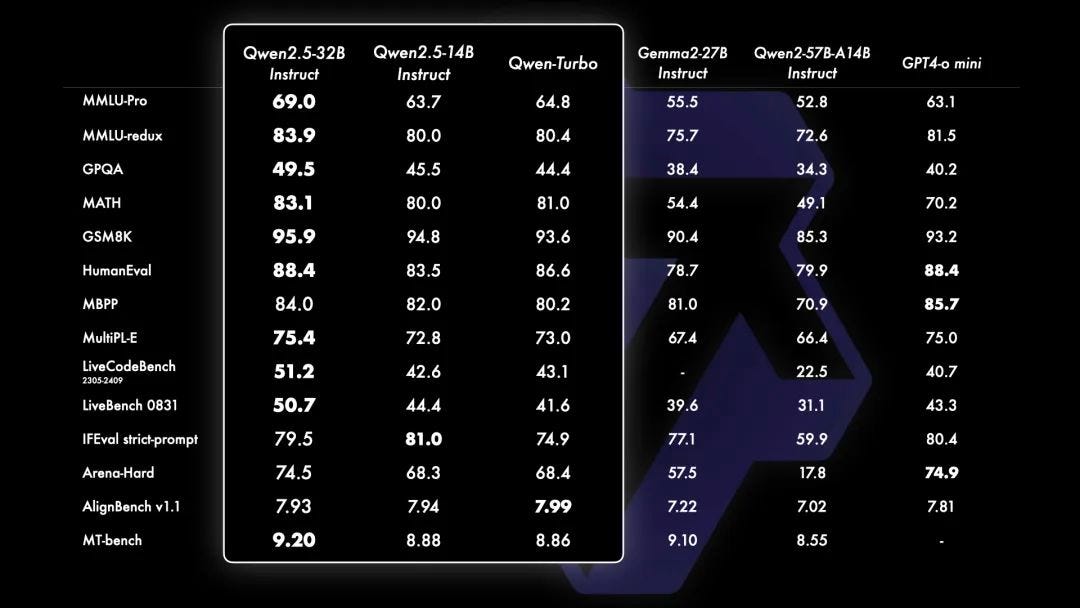

Additionally, the 32B Qwen 2.5 model competes impressively with both open-source models like Gemma and closed-source ones like GPT 40 Mini.

Only in a few benchmarks like ieval Arena Hard and aLine Bench does it fall slightly behind.

The 18B model also performs well, staying close to GPT 40 Mini in most benchmarks.

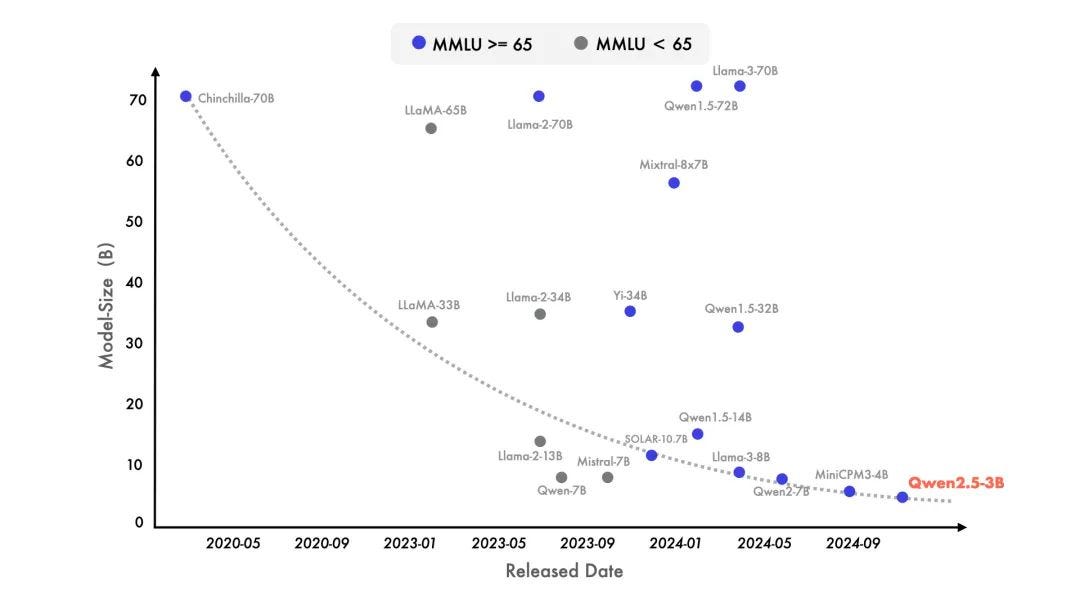

They shared a chart showing that MMLU scores improve as model sizes shrink. The 3B model now scores similarly to the previous 72B model, which is surprising.

All models support generating up to 8K tokens of long text and better-structured outputs.

Now let’s discuss the coding models of Qwen 2.5.

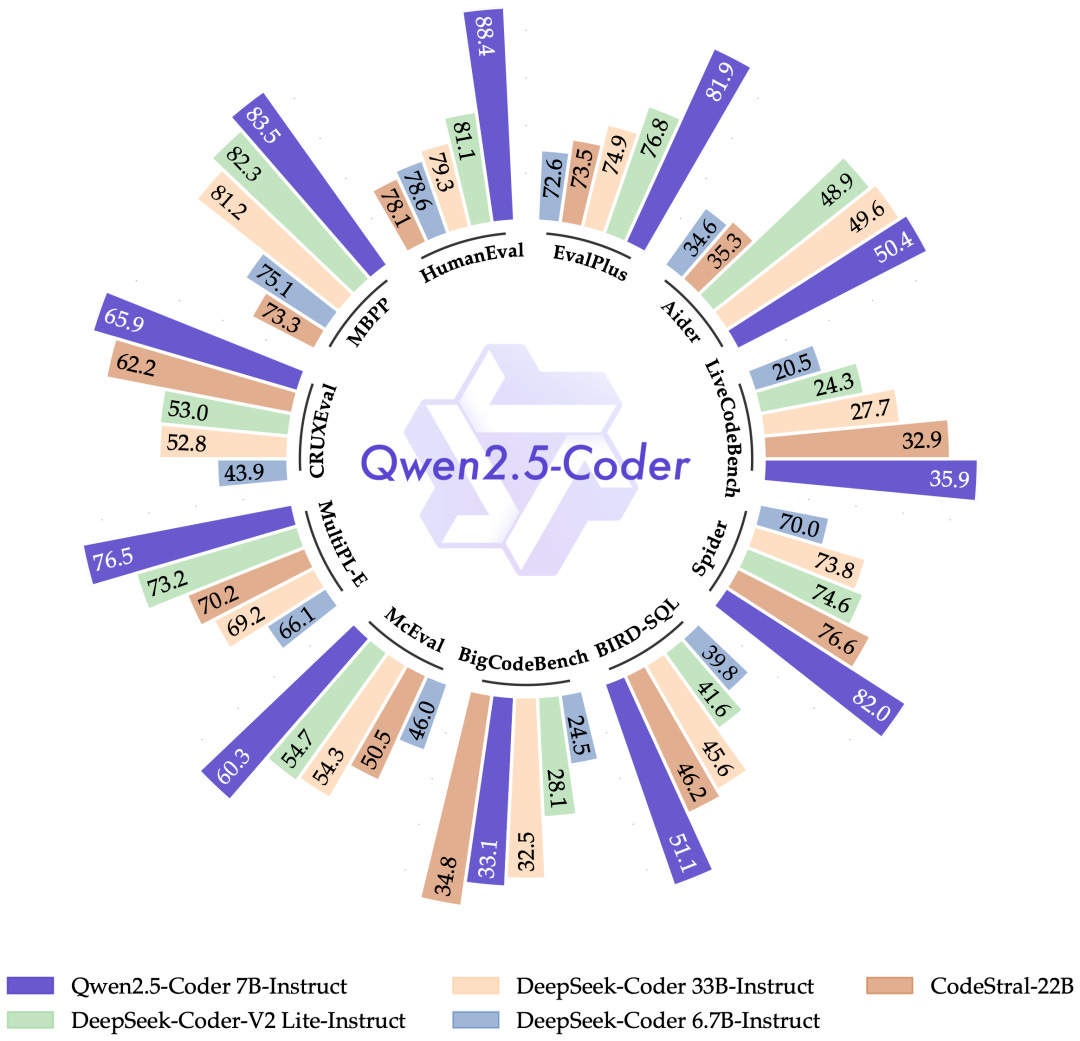

According to them, Qwen 2.5 coding models are now among the best. The 7B model beats models like Cod Astral and Deep Seek V2 Coder Light in most benchmarks, even though those models are three times larger.

In the HumanEval and MBPP benchmarks, it performs excellently. Other benchmarks show similar results, except for BigCode Bench, where Deep Seek scores slightly higher. However, Qwen is close, and instruction-tuned models also show great results.

They tested it with multiple programming languages, and Qwen excels in most, which is amazing to see.