Running Microsoft's Latest Phi-3.5-mini Model on Mac + Developing an Agent

Run Microsoft's Phi-3.5-mini locally, develop agents, and replace OpenAI APIs using lightweight LlamaEdge with no complex toolchains required.

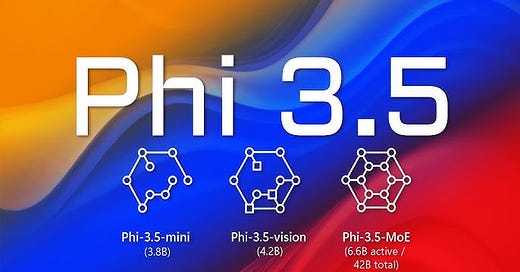

Phi-3.5-mini is a lightweight version of Microsoft's well-known Phi-3 model, designed to handle long contexts up to 128K tokens with unmatched efficiency.

The model is built from a mix of synthetic data and carefully selected web content, excelling at high-quality, reasoning-intensive tasks.

Advanced techniques like supervised fine-tuning and innovative optimization strategies, such as Proximal Policy Optimization and Direct Preference Optimization, were used to develop Phi-3.5-mini.

These powerful features ensure excellent instruction adherence and strong safety protocols, setting a new standard in AI.

In this article, we'll cover:

How to run the -instruct model locally as a chatbot

Replacing OpenAI directly in your app or agent

We'll use LlamaEdge (Rust + Wasm tech stack) to develop and deploy applications using this model.

No need for complex Python packages or C++ toolchains! Learn why we chose this technology.