Thread and Run State Analysis in OpenAI Assistants(Development of large model applications 3)

Understanding the Functionality and Workflow of Threads and Run States in OpenAI Assistants

Hello everyone, welcome to the Development of Large Model Applications series.

In the last lesson, we covered how to use OpenAI's Playground to create an Assistant and retrieve it via a Python program to complete a simple order total calculation task.

Today, we will dive deeper into two important concepts in OpenAI Assistant: Thread and Run, along with their lifecycle and various states.

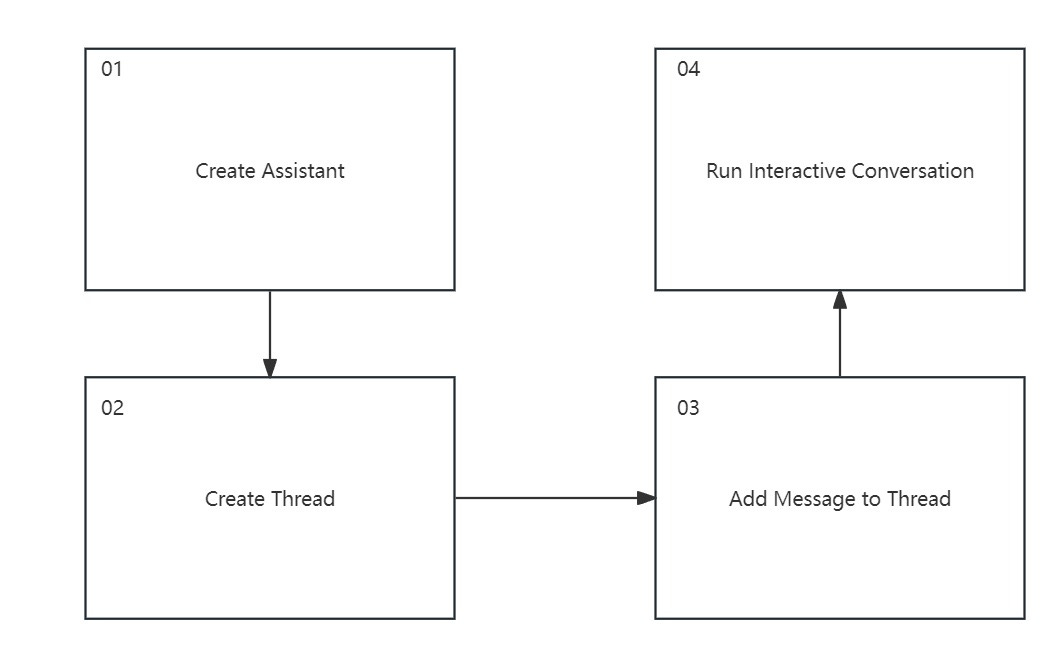

There are four key objects in the technical architecture of OpenAI Assistants: Assistant, Thread, Run, and Message. The basic steps for their operation are as follows:

Among these, Assistant and Message are self-explanatory.

So, how do we understand Thread and Run?

What Exactly Are Thread and Run?

In OpenAI Assistant design, a Thread represents a complete conversation between the Assistant and the user. It stores the back-and-forth Messages between the Assistant and the user and automatically handles context truncation to fit the model's context length limit.

It's similar to a chat page with ChatGPT or any language model on a web page. During the conversation, the Thread remembers the previous chat context and alerts you if your input is too long.

A Run, on the other hand, represents the process of invoking the Assistant on a Thread.

The Assistant performs tasks by calling the model and tools based on its configuration and the Messages in the Thread.

During a Run, the Assistant also adds new Messages to the Thread.

It's akin to an interaction with ChatGPT or any language model on a web page.

Interaction Process of Assistant, Thread, and Run

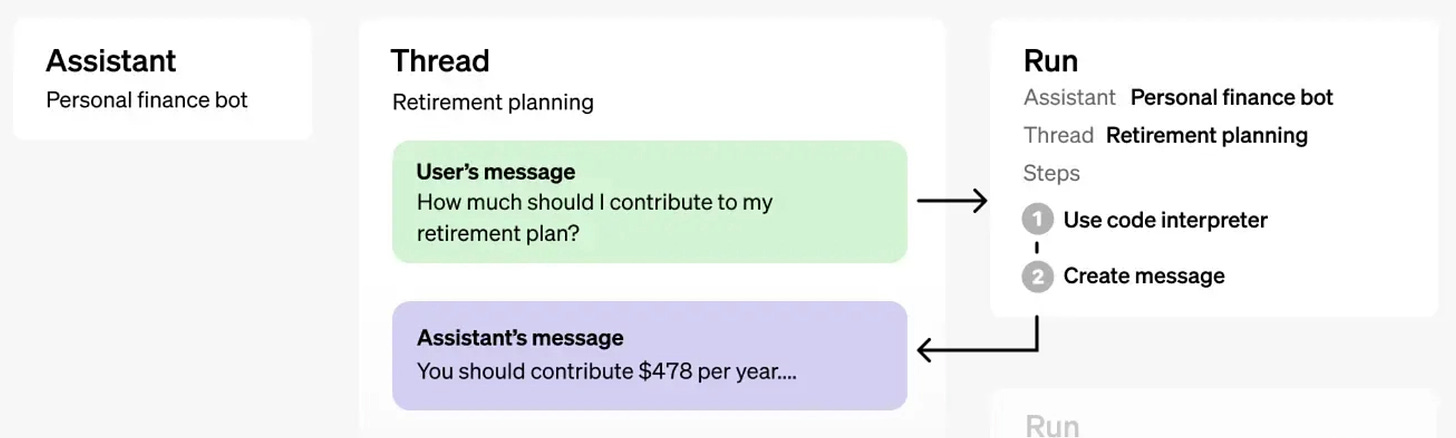

The relationship and interaction process among the three core concepts—Assistant, Thread, and Run—are illustrated below.

In this example, an Assistant named "Personal finance bot" is configured to provide retirement planning advice.

When a user sends a message to this Assistant saying, "How much should I contribute to my retirement plan?", a new Thread is created to handle this retirement planning conversation.

To answer the user's question, the system starts a new Run on this Thread.

During the Run, the Assistant generates a reply in two steps:

First, it uses a code interpreter tool to calculate a suggested contribution amount.

Then, it generates a response message based on the calculation, such as "You should contribute $478 per year...".

Finally, the Assistant adds the generated reply to the Thread and sends it to the user.

In this way, the Assistant, Thread, Run, and Message work together to complete a human-machine interaction.

Note that in the last lesson, when we created a thread, we didn't specify the Assistant's ID, so OpenAI's threads and assistants are considered independent of each other.

In OpenAI's API design, threads are created and managed to maintain a coherent conversation flow, while assistants provide responses and interactions within these threads.

Assistants handle specific requests, while threads focus on organizing and managing the conversation.

This means a thread can have multiple assistants, and an assistant can have multiple threads.

This design increases system flexibility and broadens application scenarios.

For example, in a complex dialogue system, different assistants may focus on different types of tasks or questions.

One assistant might handle weather-related queries, while another handles travel advice.

Within the same conversation thread, the system can route requests to different assistants based on the user's questions.