Today's Open Source (2024-07-08): CodeGeeX4-ALL-9B, Kolors

6 AI Models Transforming Tech in 2024

Here are some interesting AI open-source models and frameworks shared today:

Project: CodeGeeX4-ALL-9B

CodeGeeX4-ALL-9B is a multilingual code generation model by Zhipu AI. Based on GLM-4-9B, it enhances code generation capabilities and supports a 128K context length. This single model supports code completion, generation, interpretation, web search, function calls, and repository-level code Q&A. It performs well on benchmarks like BigCodeBench and NaturalCodeBench, balancing inference speed and model performance.

Project: Kolors

Kolors is a large-scale text-to-image generation model by Kuaishou's Kolors team. Trained on billions of text-image pairs, it excels in visual quality, complex semantic accuracy, and text rendering in both Chinese and English. It supports both languages for input and shows strong performance in generating content specific to Chinese.

Project: SenseVoice

SenseVoice is a speech model by Alibaba with capabilities like automatic speech recognition (ASR), language identification (LID), speech emotion recognition (SER), and audio event detection (AED). Trained on over 400,000 hours of data, it supports more than 50 languages, surpassing Whisper in recognition performance. The SenseVoice-Small model uses a non-autoregressive end-to-end framework, processing 10 seconds of audio in just 70ms, 15 times faster than Whisper-Large.

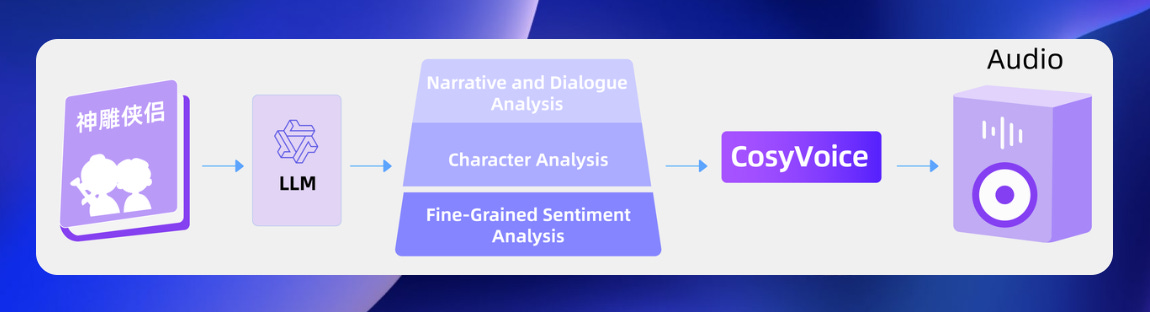

Project: CosyVoice

CosyVoice is a speech synthesis model by Alibaba's DAMO Academy. It supports natural speech generation, multiple languages, voice styles, and emotional control. Trained on over 150,000 hours of data, it supports synthesis in Chinese, English, Japanese, Cantonese, and Korean, outperforming traditional models. CosyVoice enables one-shot voice cloning with just 3-10 seconds of audio, capturing nuances like prosody and emotion. It also excels in cross-lingual voice synthesis.

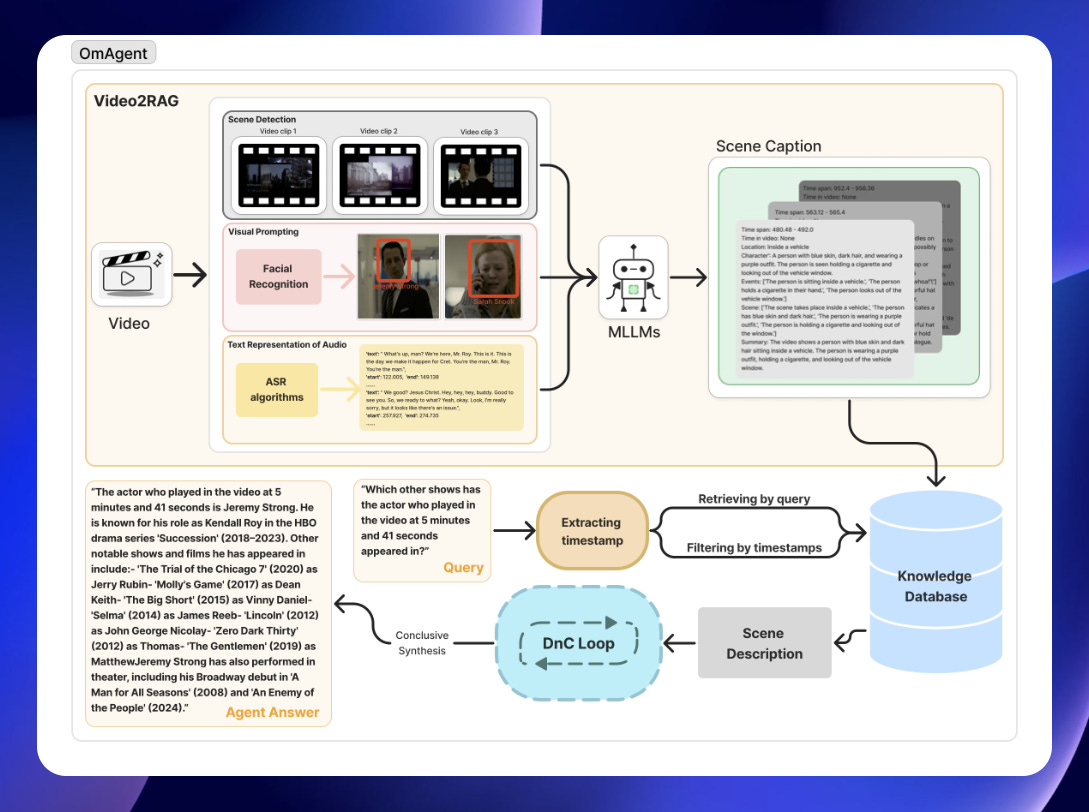

Project: OmAgent

OmAgent is an advanced multimodal agent development project, utilizing large multimodal language models and other algorithms to complete tasks such as long video understanding. It includes a lightweight intelligent agent framework, omagent_core, designed for multimodal challenges.

Project: LightRAG

LightRAG is a RAG development framework. It is simple, modular, and robust, focusing on simplicity over complexity, quality over quantity, and optimization overbuilding. It provides minimal abstractions for maximum developer customization.