Today's Open Source (2024-07-09): nanoLLaVA-1.5, 1B Parameter Edge Device Model

Discover cutting-edge AI models and frameworks like nanoLLaVA-1.5, ControlNet++, and PERSONA HUB for multimodal tasks, image editing, and synthetic data creation.

Here are some interesting AI open-source models and frameworks I discovered today:

Project: nanoLLaVA-1.5

nanoLLaVA-1.5 is a compact yet powerful multimodal model. With only 1 billion parameters, it's lightweight and efficient for edge devices. It performs well in VQA and OCR tasks. The base language model is Quyen-SE-v0.1 (Qwen1.5-0.5B) and the visual encoder is google/siglip-so400m-patch14-384.

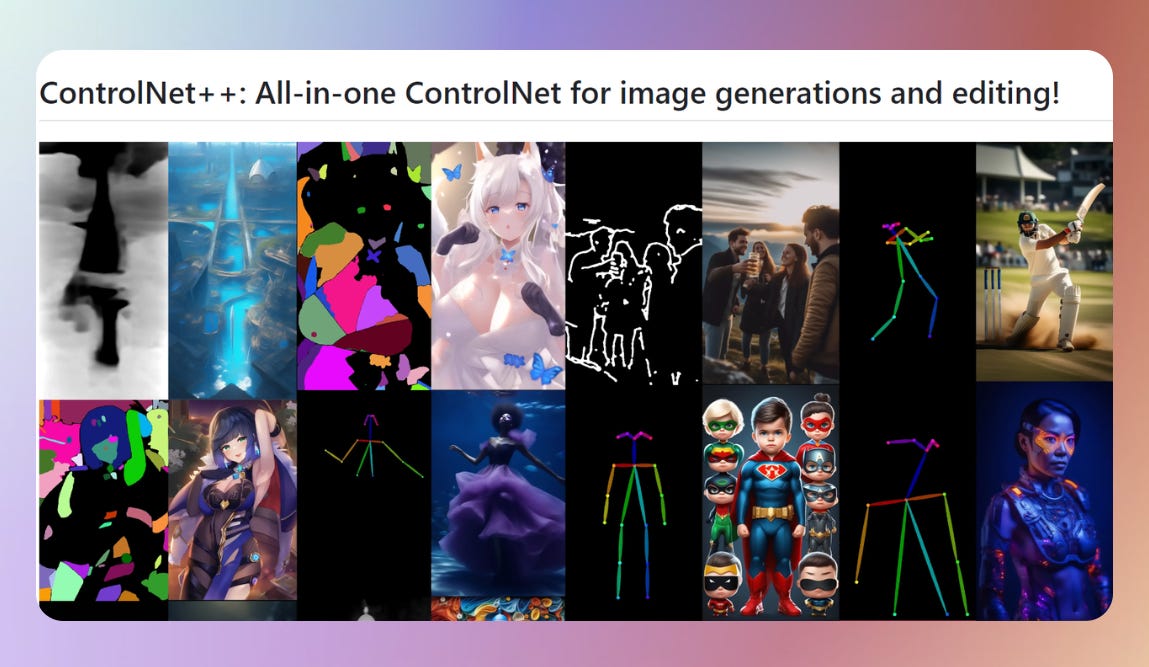

Project: ControlNet++

ControlNet++ is an all-in-one architecture for image generation and editing, supporting over 10 control types. It can produce high-resolution images comparable to midjourney. It extends the original ControlNet architecture with two new modules: 1) It allows the use of the same network parameters for different image conditions. 2) It supports multi-condition inputs without increasing computational load, which is crucial for detailed image editing. Experiments on SDXL show good control and aesthetic performance.

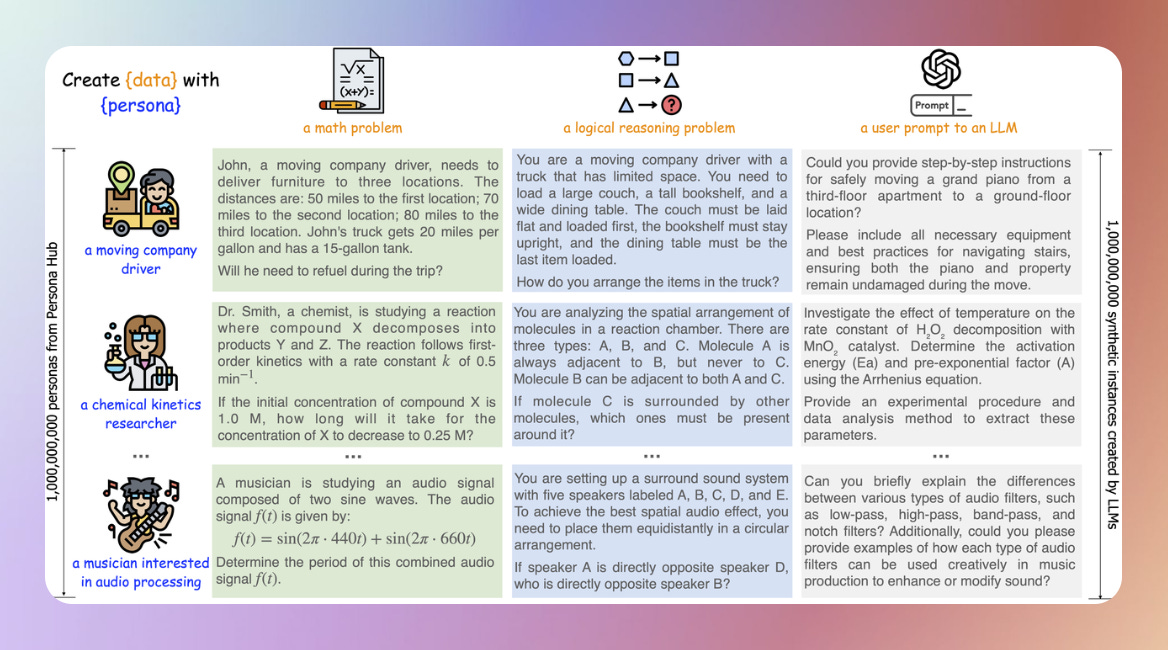

Project: PERSONA HUB

PERSONA HUB is an innovative data synthesis technology based on roles, using diverse perspectives from LLMs to generate varied synthetic data. The project integrates 1 billion roles, serving as distributed knowledge points, enabling large-scale, multi-scenario data synthesis. Use cases include creating high-quality math and logic problems, generating user prompts with instructions, producing rich textual content, designing game NPCs, and developing utility functions. PERSONA HUB's features suggest it may lead to new trends in synthetic data creation and practical applications, significantly impacting LLM research and development.

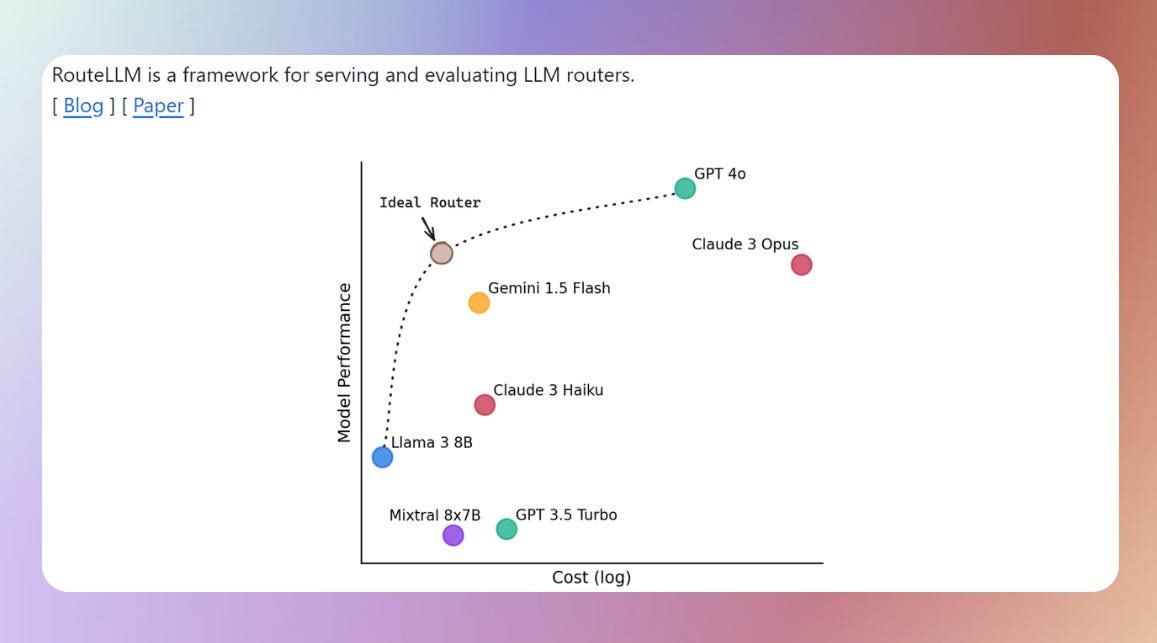

Project: RouteLLM

RouteLLM is an open-source framework for intelligent routing between models of different capabilities to reduce costs while maintaining answer quality. It can route simple queries to cheaper models without compromising quality. The framework provides pre-trained routers and is easily extendable to include new routers and compare their performance across multiple benchmarks.

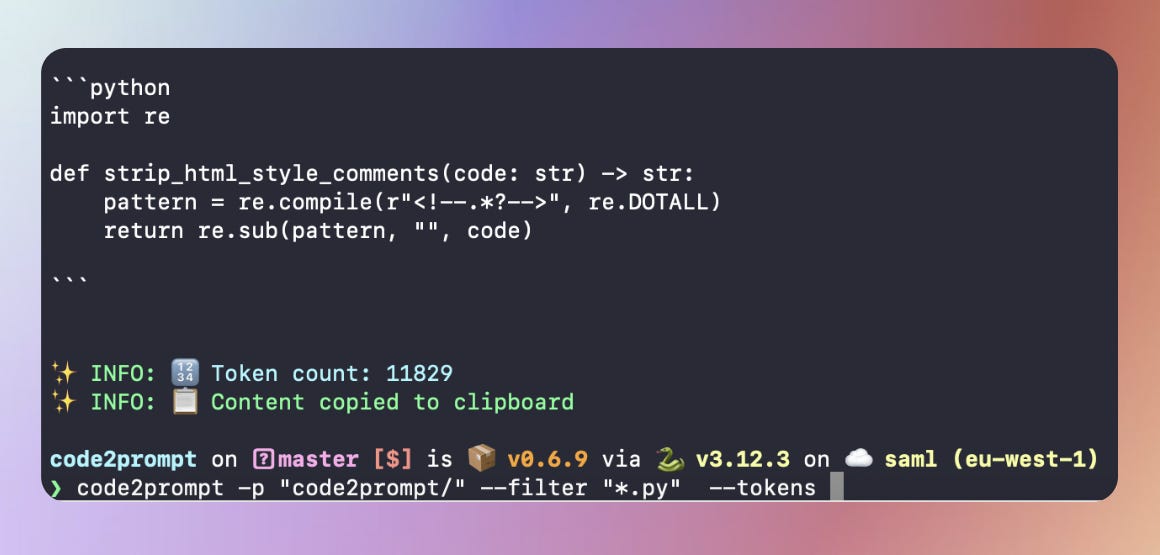

Project: Code2Prompt

Code2Prompt is a powerful command-line tool that simplifies providing context to LLMs. It generates comprehensive Markdown files containing codebase content, helping developers use LLMs for code analysis, documentation generation, and improvement tasks.

Project: Awesome Multi-Agent Papers

awesome-multi-agent-papers is a collection of top academic papers in the multi-agent field. The project offers a list of papers covering various aspects of multi-agent systems, providing a high-quality academic resource for researchers and developers.