Today's Open Source (2024-07-16): H2O-Danube3 for Smartphones

Discover the latest in AI: H2O-Danube3 for smartphones, MotionClone for video generation, Cradle for game AI, and more. Explore now!

Here are some interesting AI open-source models and frameworks I want to share today.

Project: H2O Danube3

H2O-Danube3 is a series of small language models, including H2O-Danube3-4B and H2O-Danube3-500M, trained on 6T and 4T tokens, respectively. These models are pretrained on high-quality web data, mostly in English, through three stages of data mixing and finally fine-tuned for chat adaptation.

Due to their compact architecture, H2O-Danube3 can run efficiently on modern smartphones, enabling local inference and fast processing, even on mobile devices.

https://arxiv.org/abs/2407.09276

https://huggingface.co/h2oai/h2o-danube3-500m-base

https://huggingface.co/h2oai/h2o-danube3-4b-base

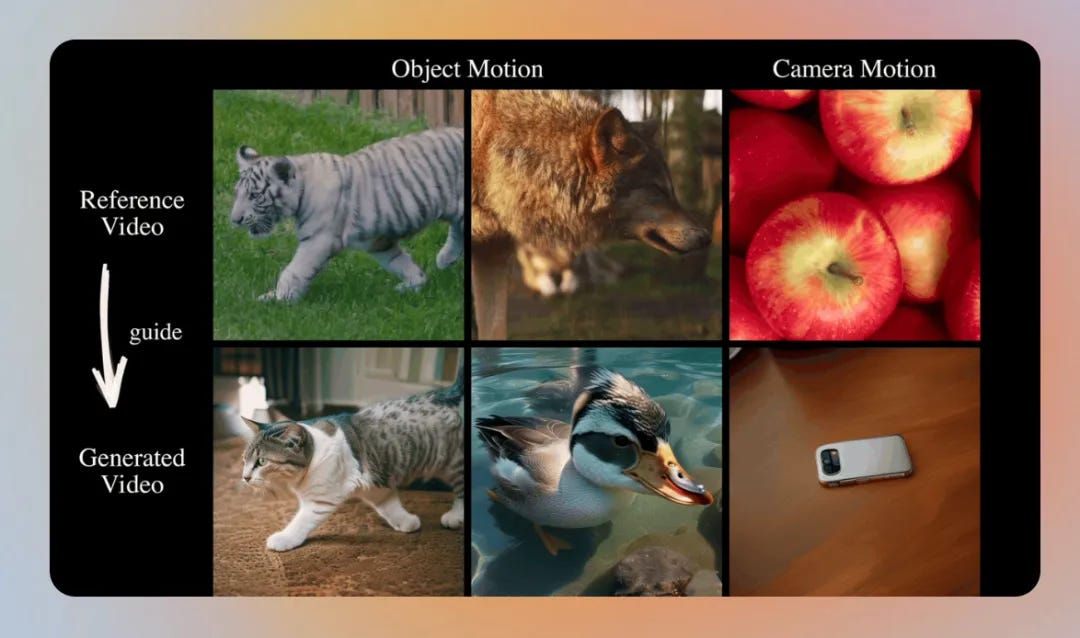

Project: MotionClone

MotionClone is a training-free framework that clones actions from reference videos to control text-to-video generation.

It uses temporal attention in video inversion to capture actions in reference videos and introduces main temporal attention guidance to reduce noise or tiny actions in attention weights.

Additionally, it proposes a position-aware semantic guidance mechanism, using rough foreground positions and original classifier-free guidance features from reference videos to aid video generation.

https://arxiv.org/abs/2406.05338

https://github.com/Bujiazi/MotionClone

Project: Cradle

Cradle, developed by Kunlun Wanwei and the Beijing Academy of Artificial Intelligence, is an open-source AI framework that can play various commercial games and operate software applications.

This new general computer control framework allows AI agents to control keyboards and mice like humans, without relying on any internal APIs, enabling interactions with any software.

It provides powerful reasoning, self-improvement, and skill management in a standardized general environment, supporting agents to complete any computing task with minimal environmental requirements.

https://arxiv.org/abs/2403.03186

https://github.com/BAAI-Agents/Cradle

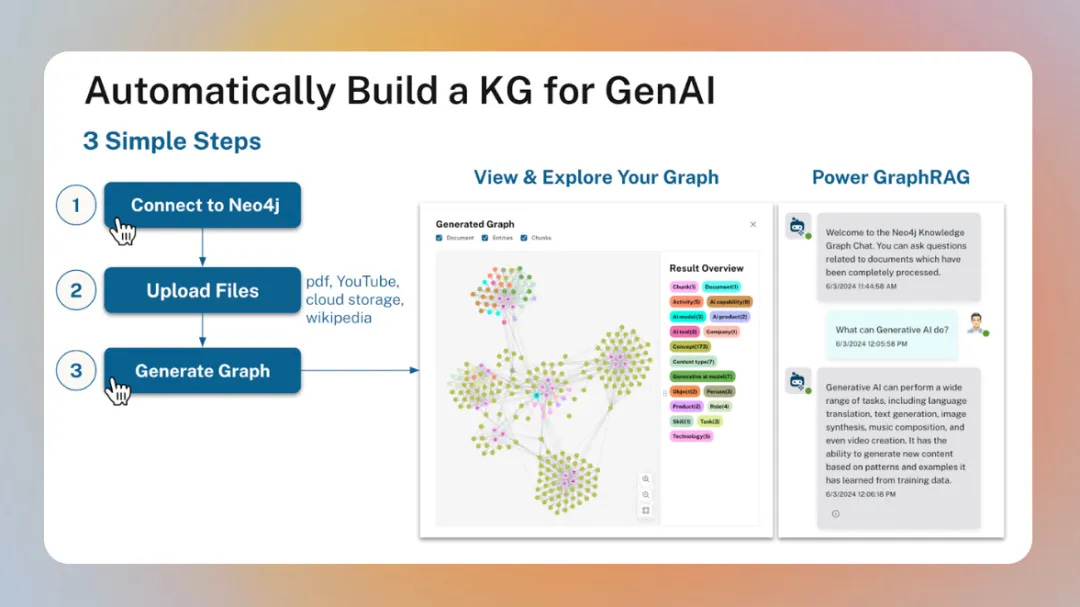

Project: LLM Graph Builder

LLM Graph Builder by Neo4j is an open-source generator that creates knowledge graphs from unstructured data, PDFs, documents, texts, YouTube videos, web pages, and more, stored in Neo4j.

It uses large models like OpenAI, Gemini, Llama3, Diffbot, Claude, and Qwen to extract nodes, relationships, and their attributes from unstructured data.

https://github.com/neo4j-labs/llm-graph-builder

Project: mllm

mllm is a fast and lightweight multimodal large language model inference engine designed for mobile and edge devices.

It is implemented in pure C/C++ with no dependencies, supports ARM NEON and x86 AVX2, and offers 4-bit and 6-bit integer quantization.

mllm enables smart personal assistants, text-based image retrieval, and screen visual question answering on devices, ensuring data privacy.

https://github.com/UbiquitousLearning/mllm

Project: Embodied AI Paper List

Embodied AI Paper List is a curated collection of excellent papers and reviews on Embodied AI.

Maintained by Sun Yat-sen University's HCPLab team, this project aims to compile and share the latest Embodied AI research and resources.

It includes papers and books from various subfields such as multimodal large models, vision-language-action models, and general robot learning, helping researchers stay updated with the field's advancements.

https://github.com/HCPLab-SYSU/Embodied_AI_Paper_List

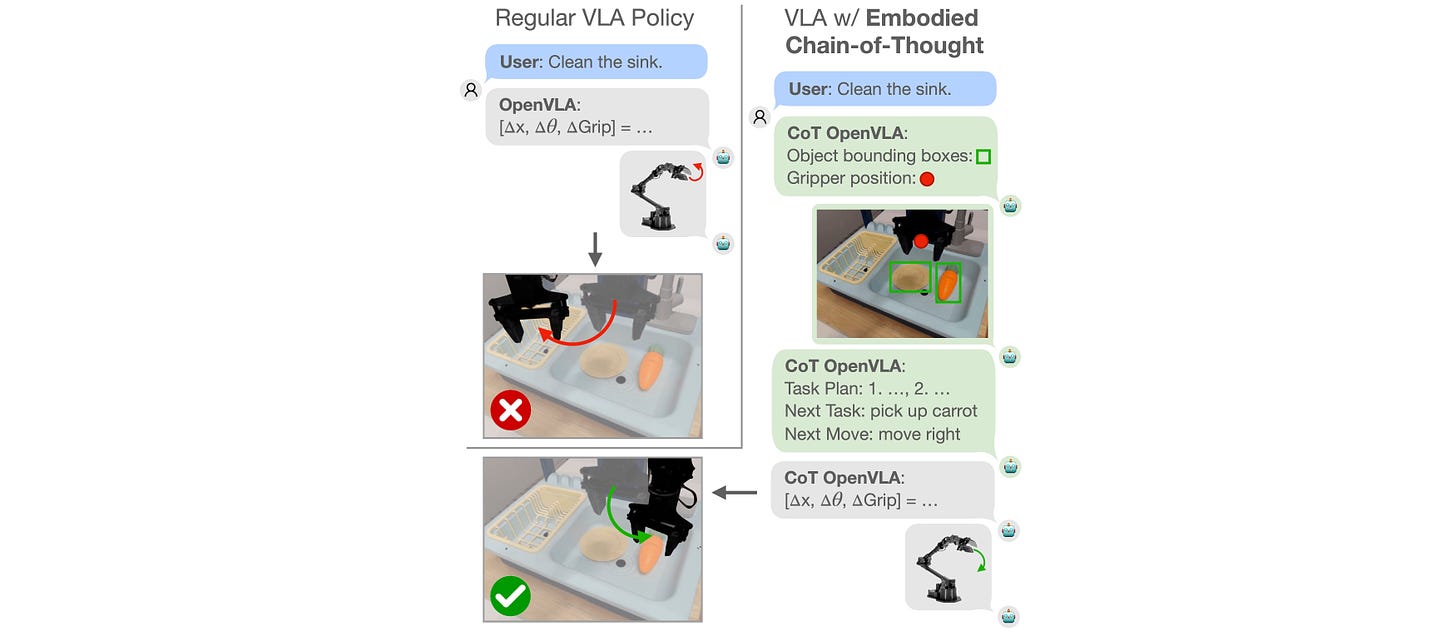

Project: embodied-CoT

Embodied Chain of Thought (ECoT) is a novel method for training robot strategies.

This method uses vision-language-action models to generate reasoning steps in response to instructions and images, then selects robot actions, improving performance, interpretability, and generalization.

The project code is based on OpenVLA and provides detailed documentation on code and dependencies.