Today’s Open Source (2024-07-22): RWKV-6-World 14B Supports 100+ Languages and Code

Explore cutting-edge AI open-source models like RWKV-6-World, xLAM, and Athene-70B. Discover innovative solutions for language processing and virtual dressing!

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: RWKV/RWKV-6-World 14B

The RWKV Foundation has released the RWKV-6-World 14B model, the strongest dense pure RNN large language model to date.

In recent performance tests, the RWKV-6-World 14B model's English performance is equivalent to Llama2 13b.

Additionally, among models with the same parameters, RWKV-6-World 14B has the strongest multilingual performance, supporting over 100 languages and code.

https://huggingface.co/BlinkDL/rwkv-6-world

Project: xLAM

xLAM, developed by Salesforce, is a series of large language models focused on function calls, available in 1b and 7b parameter sizes.

These models aim to enhance decision-making by translating user intent into executable actions, suitable for automation workflows in various fields.

Optimized for efficient deployment on personal devices, they support offline functionality and enhanced privacy.

https://huggingface.co/Salesforce/xLAM-7b-fc-r

https://huggingface.co/Salesforce/xLAM-1b-fc-r

Project: Athene-70B

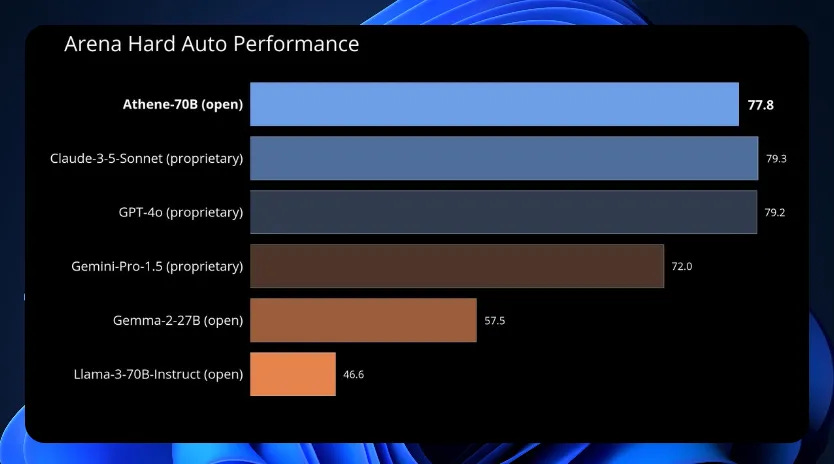

Athene-70B is a large model based on Llama-3-70B-Instruct, trained with Reinforcement Learning from Human Feedback (RLHF).

Developed by the Nexusflow team, it scored highly in the Chatbot Arena benchmark Arena-Hard-Auto, suitable for dialogue generation tasks.

https://huggingface.co/Nexusflow/Athene-70B

Project: IMAGDressing

IMAGDressing is an interactive modular apparel generation project for virtual dressing.

It uses a clothing UNet, combining CLIP's semantic features and VAE's texture features for user-controllable editing.

The project also released the IGPair dataset with over 300,000 pairs of clothing and wearing images and established a standard data assembly pipeline.

IMAGDressing can be combined with extensions like ControlNet, IP-Adapter, T2I-Adapter, and AnimateDiff to enhance diversity and controllability.

https://arxiv.org/abs/2407.12705

https://github.com/muzishen/imagdressing

Project: Tricycle

Tricycle is a fast, from-scratch deep learning library using Python and Numpy.

It can train a GPT-2 (124M) model on a GPU, processing 2.3 billion tokens in less than three days.

Tricycle aims to help anyone with some Python experience understand deep learning, providing a complete implementation from an autograd engine to GPU support.

https://github.com/bclarkson-code/Tricycle

Project: Metron

Metron is a tool for benchmarking LLM inference systems. It offers various evaluation methods and resource versions, supporting integrations with multiple open-source and public API systems, helping developers and researchers assess and optimize LLM performance.

https://arxiv.org/abs/2407.07000

https://github.com/project-metron/metron

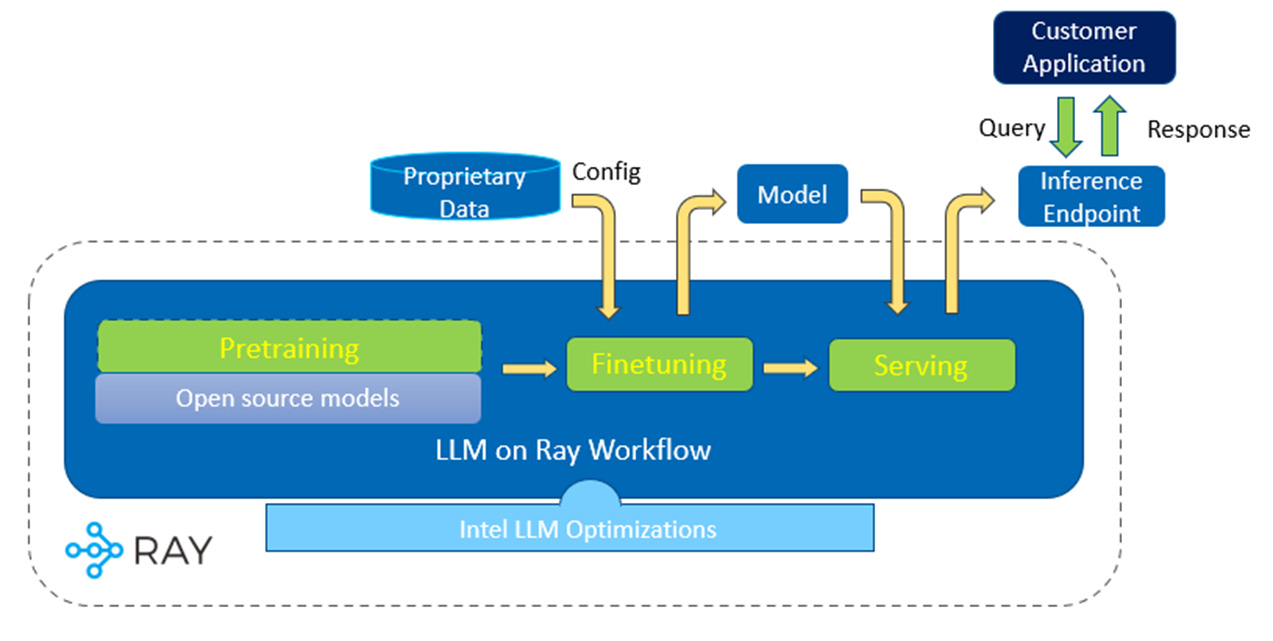

Project: LLM-on-Ray

LLM-on-Ray is a comprehensive solution designed to help users build, customize, and deploy LLMs on Intel platforms using the Ray framework. Whether starting from scratch with pretraining, fine-tuning an existing model, or deploying a production-ready LLM endpoint service, this project simplifies complex processes into manageable steps. LLM-on-Ray leverages Ray's distributed computing capabilities, ensuring efficient fault tolerance and cluster resource management, making LLM projects more reliable and scalable.