Today's Open Source (2024-08-08): Llama-3.1 Model for Parallel Function Execution

Discover top AI open-source models like Functionary V3.2, Lumina-mGPT, CatVTON, LLM2Vec, RAGFoundry, and JamAIBase. Boost your projects today!

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: Functionary/Functionary V3.1 & V3.2

Functionary has released open-source versions V3.1 and V3.2, based on the Llama-3.1 function call model. V3.1 includes small and medium versions, while V3.2 currently has a small version available.

In benchmarks, functionary-small-v3.2 slightly outperforms functionary-small-v3.1 and is stronger than all previous versions.

Functionary, developed by the MeetKai team, is a language model designed to interpret and execute functions/plugins. It excels at tool calling, deciding when to execute functions in parallel or serially, and understanding their output, triggering functions only when necessary.

https://github.com/MeetKai/functionary

https://huggingface.co/meetkai/functionary-small-v3.2

https://huggingface.co/meetkai/functionary-small-v3.1

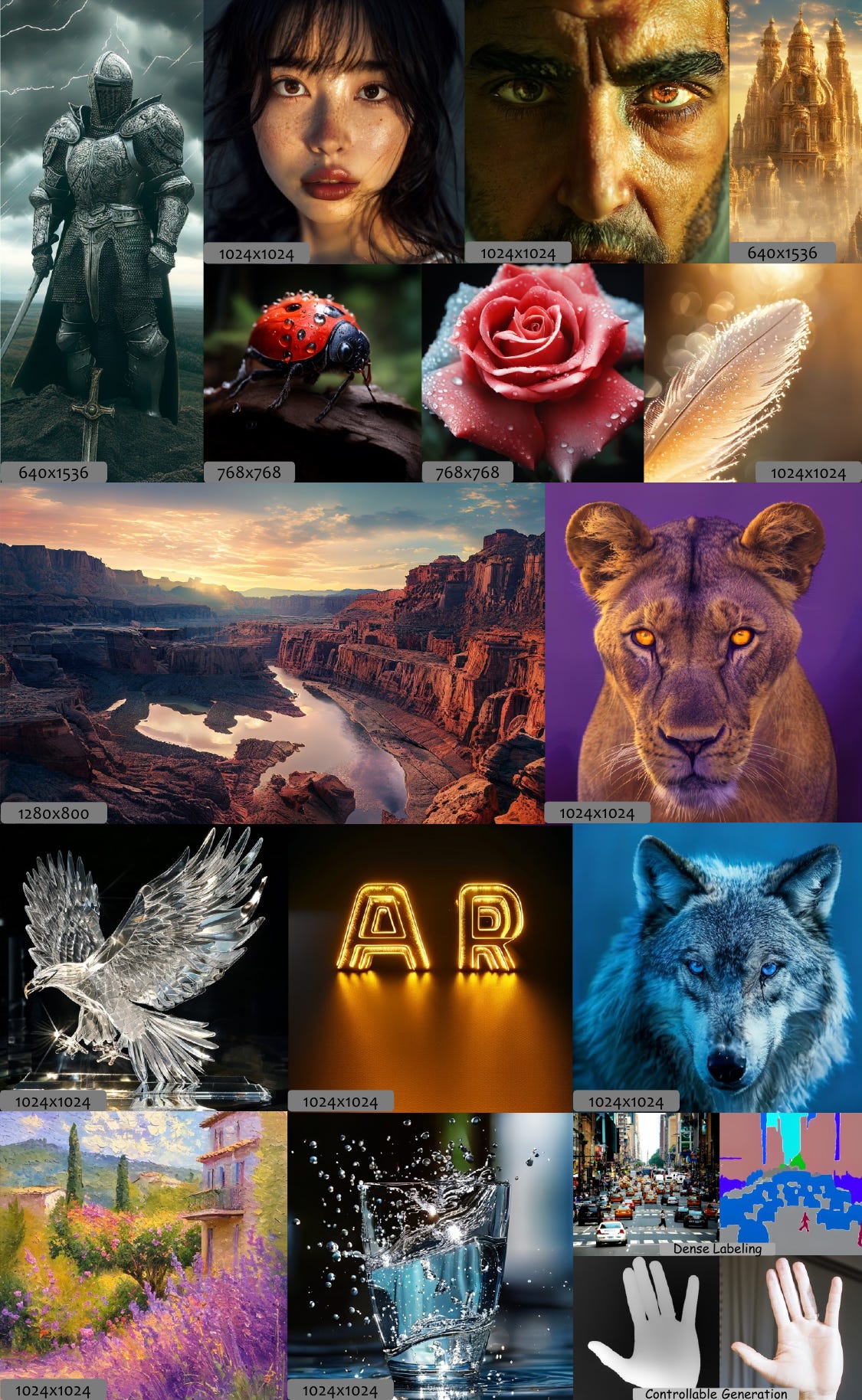

Project: Lumina-mGPT

Lumina-mGPT, released by Shanghai AI Lab and others, is a series of multimodal autoregressive models. It can perform various visual and language tasks and is particularly good at generating flexible, realistic images from text descriptions.

This project relies on the xllmx module, which evolved from LLaMA2-Accessory to support multimodal tasks centered around large language models.

https://github.com/Alpha-VLLM/Lumina-mGPT

https://arxiv.org/pdf/2408.02657

Project: CatVTON

CatVTON is an efficient virtual try-on diffusion model released by Sun Yat-sen University and Pixocial. It features a lightweight network (899.06M total parameters), parameter-efficient training (49.57M trainable parameters), and simplified inference (less than 8GB memory requirement at 1024x768 resolution).

https://github.com/zheng-chong/catvton

https://arxiv.org/abs/2407.15886

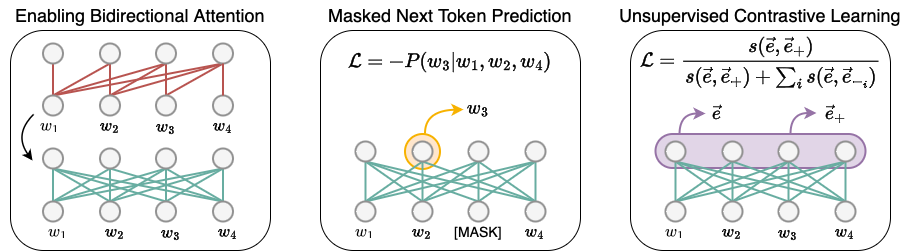

Project: LLM2Vec

LLM2Vec is an innovative method that turns a decoder-only large language model into a text encoder. It involves three steps: enabling bidirectional attention, training with masked next-word prediction, and unsupervised contrastive learning. The model can be fine-tuned further to achieve state-of-the-art performance.

In Q&A systems, LLM2Vec efficiently encodes questions and documents to quickly find the most relevant answer passages.

https://github.com/mcgill-nlp/llm2vec

Project: RAGFoundry

RAG Foundry is a library designed to enhance LLM performance on RAG (Retrieval-Augmented Generation) tasks by fine-tuning models. It helps train models by creating specialized RAG datasets and using parameter-efficient fine-tuning (PEFT) techniques.

RAG Foundry offers modular functions for data selection and filtering, processing, retrieval, ranking, query operations, prompt generation, training, inference, output processing, and evaluation. It supports rapid prototyping and experimentation.

https://github.com/IntelLabs/RAGFoundry

https://arxiv.org/abs/2408.02545

Project: JamAIBase

JamAIBase is an open-source backend platform for RAG (Retrieval-Augmented Generation), placing powerful generation models at the core of databases. It allows developers to easily build AI agents, enhance applications with generation tables, and create impressive user interface experiences.