Today's Open Source (2024-08-19): DeepSeek Prover with AlphaGo-Style Reinforcement Learning

Explore the latest AI open-source models like DeepSeek-Prover-V1.5, NVIDIA’s Minitron 4B, and more for theorem proving, controlled generation, and multilingual dubbing.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: DeepSeek-Prover-V1.5

DeepSeek-Prover-V1.5 is an open-source mathematical theorem-proving model from DeepSeek. It introduces a reinforcement learning system similar to AlphaGo, specifically designed for theorem proving in Lean 4. This model builds upon DeepSeek-Prover-V1, enhancing it through optimized training and inference.

The model is pre-trained on DeepSeekMath-Base and fine-tuned on an improved formal theorem-proving dataset. Further improvements are achieved through reinforcement learning from proof assistant feedback (RLPAF).

https://github.com/deepseek-ai/DeepSeek-Prover-V1.5

https://huggingface.co/deepseek-ai/DeepSeek-Prover-V1.5-Base

https://arxiv.org/abs/2408.08152

Project: Minitron/Llama-3.1-Minitron 4B

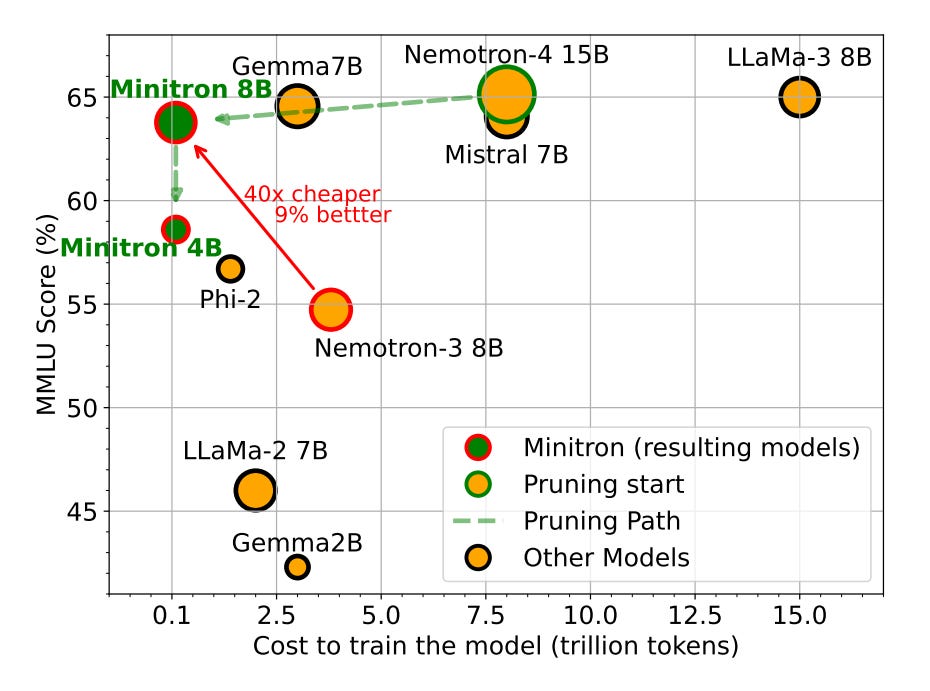

NVIDIA’s latest open-source model, Llama-3.1-Minitron 4B, is part of the Minitron series. It’s trained using pruning and distillation methods on the Llama 3.1 8B model.

Llama-3.1-Minitron 4B outperforms other state-of-the-art open-source models of similar size, including Minitron 4B, Phi-2 2.7B, Gemma2 2.6B, and Qwen2-1.5B. Minitron is a series of small language models (SLMs) derived from NVIDIA’s Nemotron-4 15B model through pruning.

First, the model’s embedding size, attention heads, and MLP intermediate dimensions were pruned. Then, the model was distilled to produce Minitron 8B and 4B.

https://huggingface.co/nvidia/Minitron-4B-Base

https://huggingface.co/nvidia/Minitron-8B-Base

https://huggingface.co/nvidia/Llama-3.1-Minitron-4B-Width-Base

Project: ControlNeXt

ControlNeXt is a project for controlled image and video generation, supporting various types of control inputs.

This project introduces a new method that reduces training parameters by 90% compared to ControlNet, achieving faster convergence and excellent efficiency.

This method can be combined directly with other LoRA techniques to change styles and ensure more stable outputs. The project is still under active development, and the code and models may be updated anytime.

https://github.com/dvlab-research/controlnext

https://arxiv.org/abs/2408.06070

Project: nano-GraphRAG

nano-GraphRAG is a simple, easy-to-modify implementation of GraphRAG. It offers a smaller, faster-running version while retaining core functionalities.

The project consists of about 800 lines of code, supports asynchronous operations, and is fully typed. Users can perform global and local graph searches with simple Python code, with support for incremental insertion and custom storage.

https://github.com/gusye1234/nano-graphrag

Project: Linly-Dubbing

Linly-Dubbing is an AI-powered tool for multilingual video dubbing and translation, inspired by YouDub-webui and further expanded and optimized.

By integrating Linly-Talker’s digital lip-sync technology, it offers a variety of high-quality dubbing options.

https://github.com/Kedreamix/Linly-Dubbing

Project: moffee

moffee is an open-source tool for creating PPTs from Markdown documents, converting them into clean, professional slides. moffee handles layout, pagination, and styling, allowing users to focus on content creation.

It uses simple syntax to organize and style content, providing a real-time web interface where users can update slides as they type, start presentations, or export to PDF.