Today's Open Source (2024-09-06): MiniCPM3-4B by OpenBMB, Outperforms GPT-3.5-Turbo

Discover top open-source AI projects like MiniCPM3-4B, SoulChat2.0, FluxMusic, and more. Explore cutting-edge models for AI art, text-to-music, and psychological counseling.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: MiniCPM3-4B

MiniCPM3-4B is the third generation of the MiniCPM series.

It outperforms models like Phi-3.5-mini-Instruct and GPT-3.5-Turbo-0125 and is comparable to recent 7B~9B models.

Compared to MiniCPM1.0 and MiniCPM2.0, MiniCPM3-4B has more powerful and versatile features, including support for function calls and a code interpreter.

With a 32k context window and LLMxMapReduce, it can theoretically handle unlimited context without using excessive memory.

https://github.com/OpenBMB/MiniCPM

https://huggingface.co/openbmb/MiniCPM3-4B

https://huggingface.co/openbmb/MiniCPM-Embedding

https://huggingface.co/openbmb/MiniCPM-Reranker

https://huggingface.co/openbmb/MiniCPM3-RAG-LoRA

https://huggingface.co/openbmb/MiniCPM3-4B-GPTQ-Int4

Project: SoulChat2.0

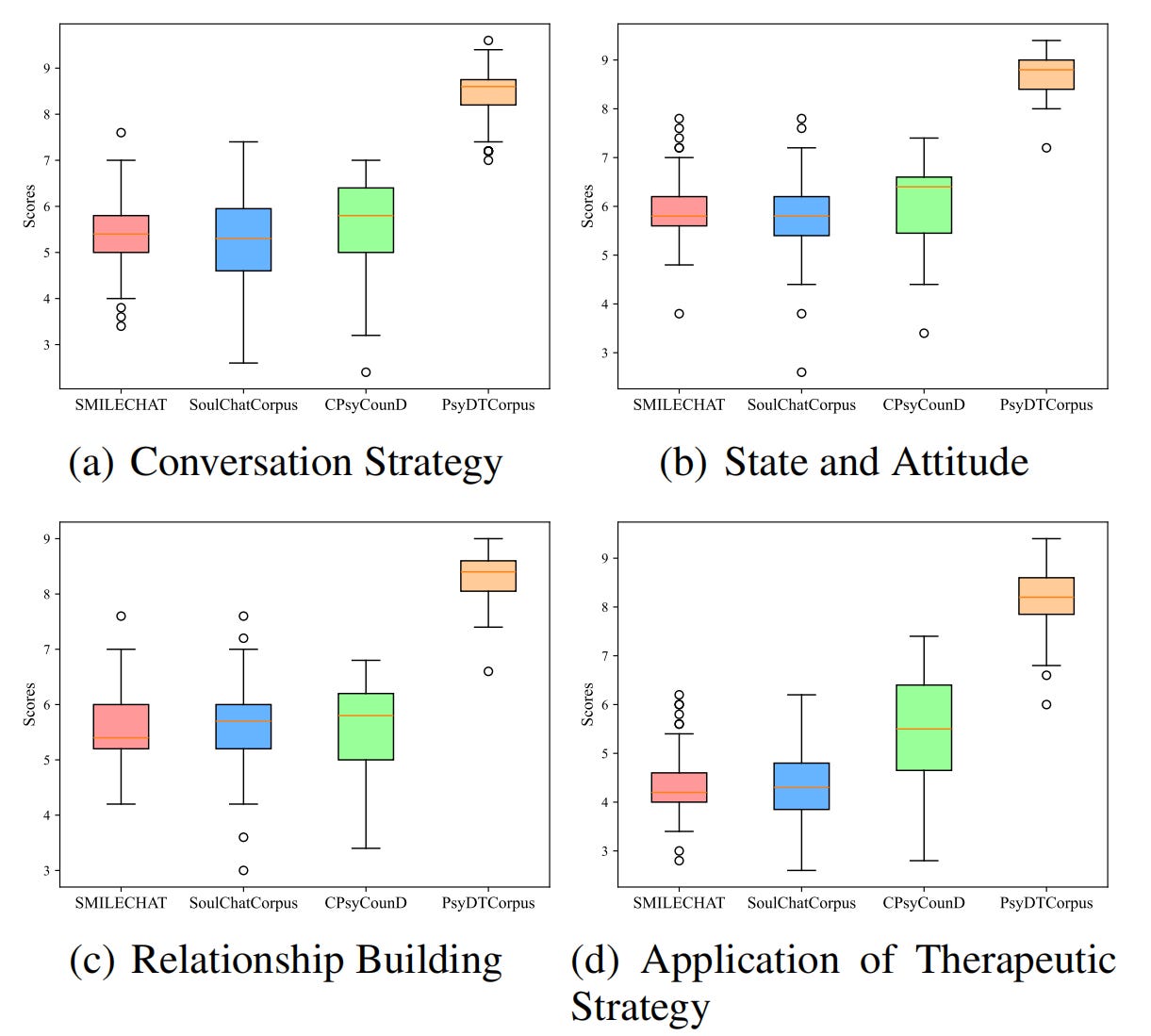

SoulChat2.0 is a digital twin model for psychological counseling developed by South China University of Technology's Institute of Future Technology and the Guangdong Provincial Digital Twin Lab.

This project generates high-quality counseling conversations from a small set of cases, simulating the language style and techniques of specific counselors.

SoulChat2.0 has been evaluated on multiple professional dimensions, and its fine-tuned large model significantly improves its performance in psychological counseling.

https://github.com/scutcyr/SoulChat2.0

https://modelscope.cn/models/YIRONGCHEN/SoulChat2.0-Llama-3.1-8B

https://modelscope.cn/models/YIRONGCHEN/SoulChat2.0-Qwen2-7B

https://modelscope.cn/models/YIRONGCHEN/SoulChat2.0-internlm2-7b

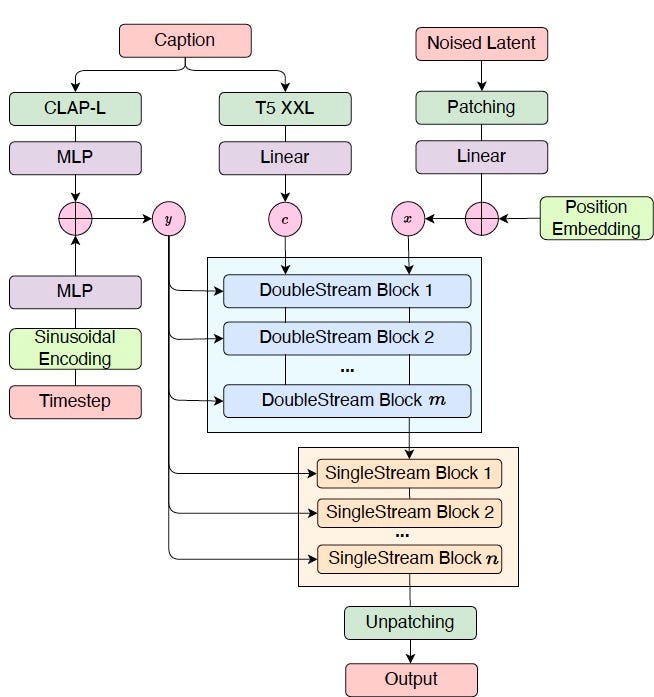

Project: FluxMusic

FluxMusic is a text-to-music generation project based on the Rectified Flow Transformer. It explores how to extend the diffusion-based Rectified Flow Transformer for music generation. The project provides PyTorch model definitions, pre-trained weights, and training and sampling code.

https://github.com/feizc/FluxMusic

https://arxiv.org/abs/2409.00587

Project: Reflection 70B

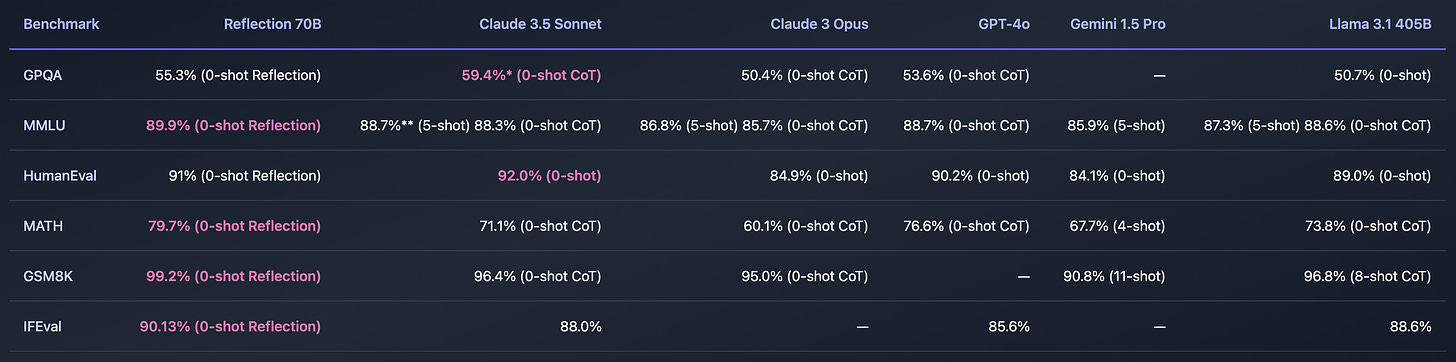

Reflection Llama-3.1 70B is a model fine-tuned on Llama 3.1 70B Instruct using a new technique called reflection tuning. This allows the model to detect and correct its reasoning errors. It was trained on synthetic data generated by Glaive and is suited for tasks requiring complex reasoning and reflection abilities.

https://huggingface.co/mattshumer/Reflection-Llama-3.1-70B

Project: LongLLaVA

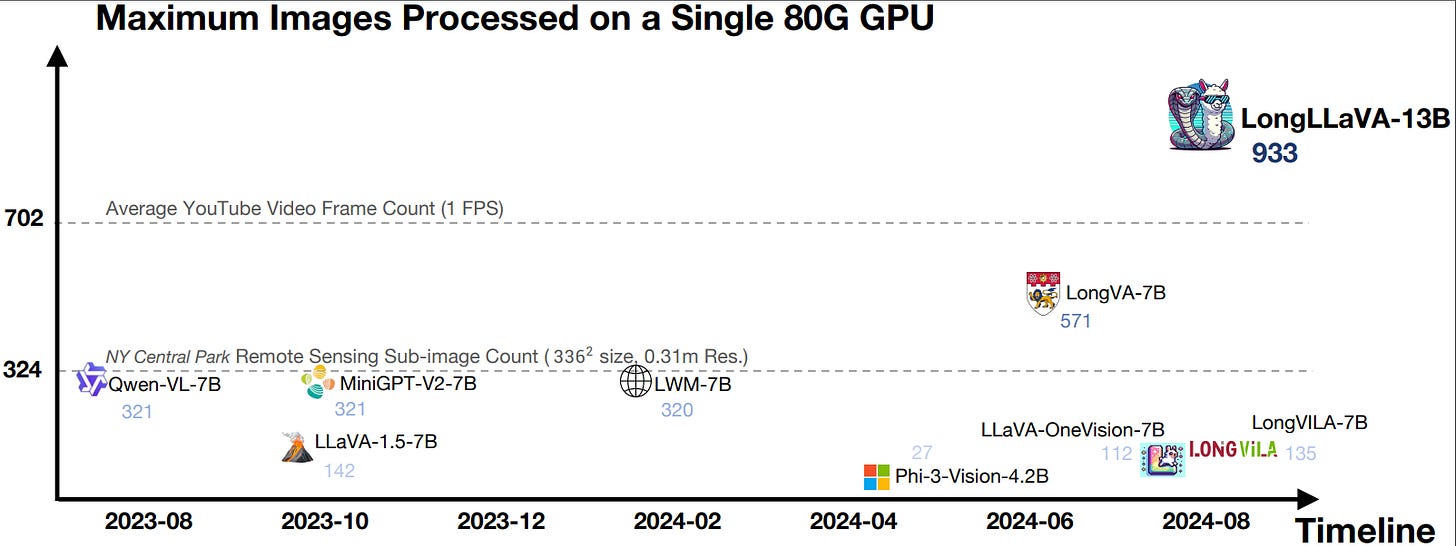

LongLLaVA is a multimodal large language model project designed to extend long-context capabilities for multimodal large language models (MLLMs).

The architecture blends Mamba with Transformer blocks and leverages temporal and spatial dependencies between multiple images. It employs a progressive training strategy, balancing efficiency and effectiveness.

LongLLaVA not only achieves competitive results on various benchmarks but also maintains high throughput and low memory usage. It can process nearly 1,000 images on a single A100 80GB GPU, showcasing its potential across a wide range of tasks.

https://huggingface.co/FreedomIntelligence/LongLLaVA

https://github.com/FreedomIntelligence/LongLLaVA

https://arxiv.org/pdf/2409.02889

Project: HF-LLM.rs🦀

HF-LLM.rs is a command-line tool for accessing large language models (LLMs) hosted on Hugging Face, such as Llama 3.1, Mistral, Gemma 2, and Cohere. Users can interact with these models directly from the terminal by providing inputs and receiving responses.