Today's Open Source (2024-10-21): DeepSeek Releases Janus 1.3B

Discover the latest AI open-source models, including Janus, Spirit-LM, Qwen 2.5 Code Interpreter, BitNet, Lingua, and VideoAgent for efficient multimodal solutions.

Here are some interesting AI open-source models and frameworks I wanted to share today:

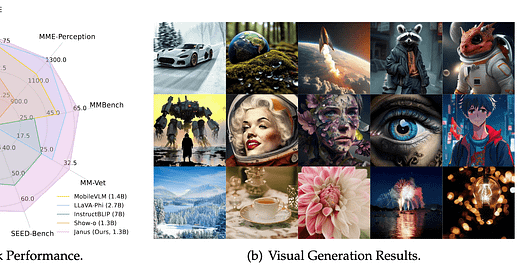

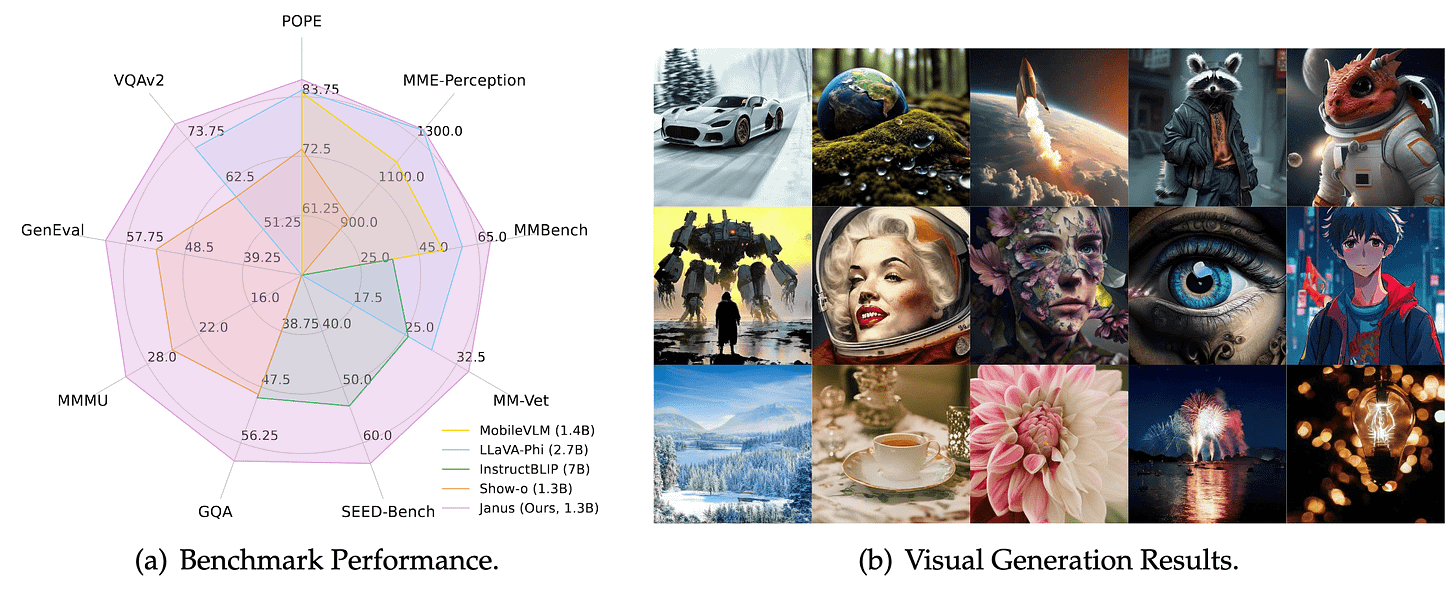

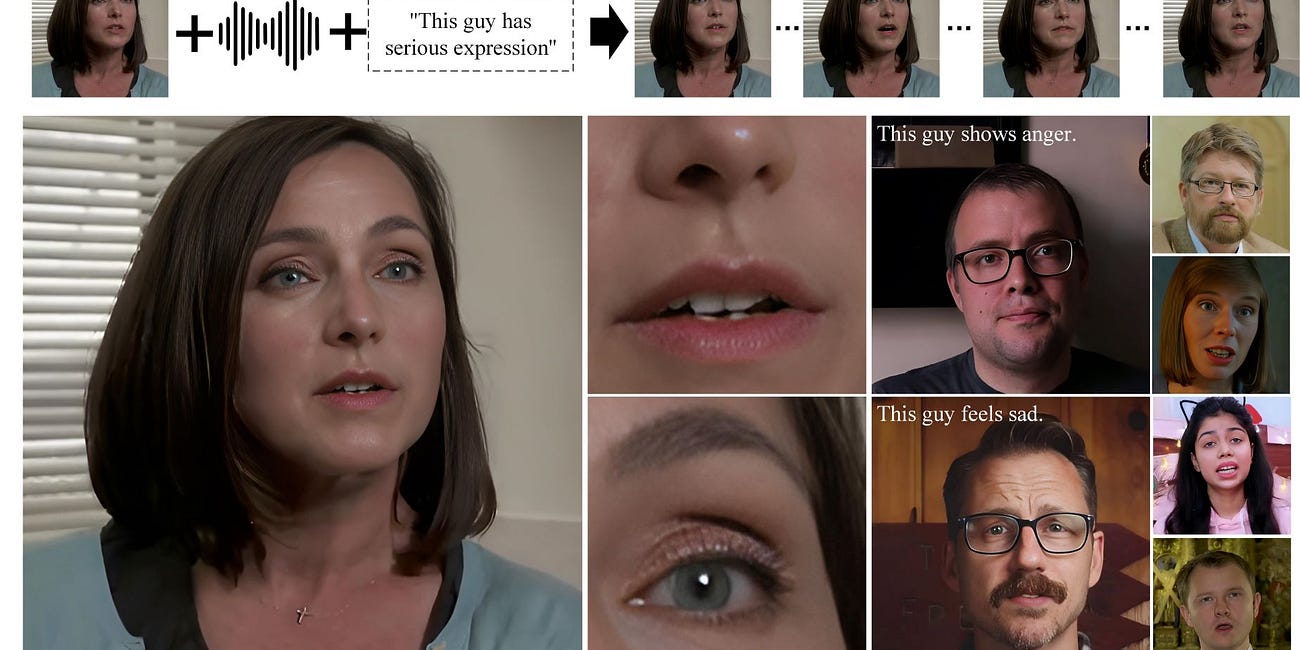

Project: Janus

Janus is a novel autoregressive framework that unifies multimodal understanding and generation. By decoupling visual encoding into separate pathways while utilizing a single unified Transformer architecture, it overcomes the limitations of previous methods.

Janus not only alleviates the role conflict of visual encoders in both understanding and generation but also enhances the flexibility of the framework.

Its simplicity, high flexibility, and effectiveness make it a strong candidate for the next generation of unified multimodal models.

https://huggingface.co/deepseek-ai/Janus-1.3B

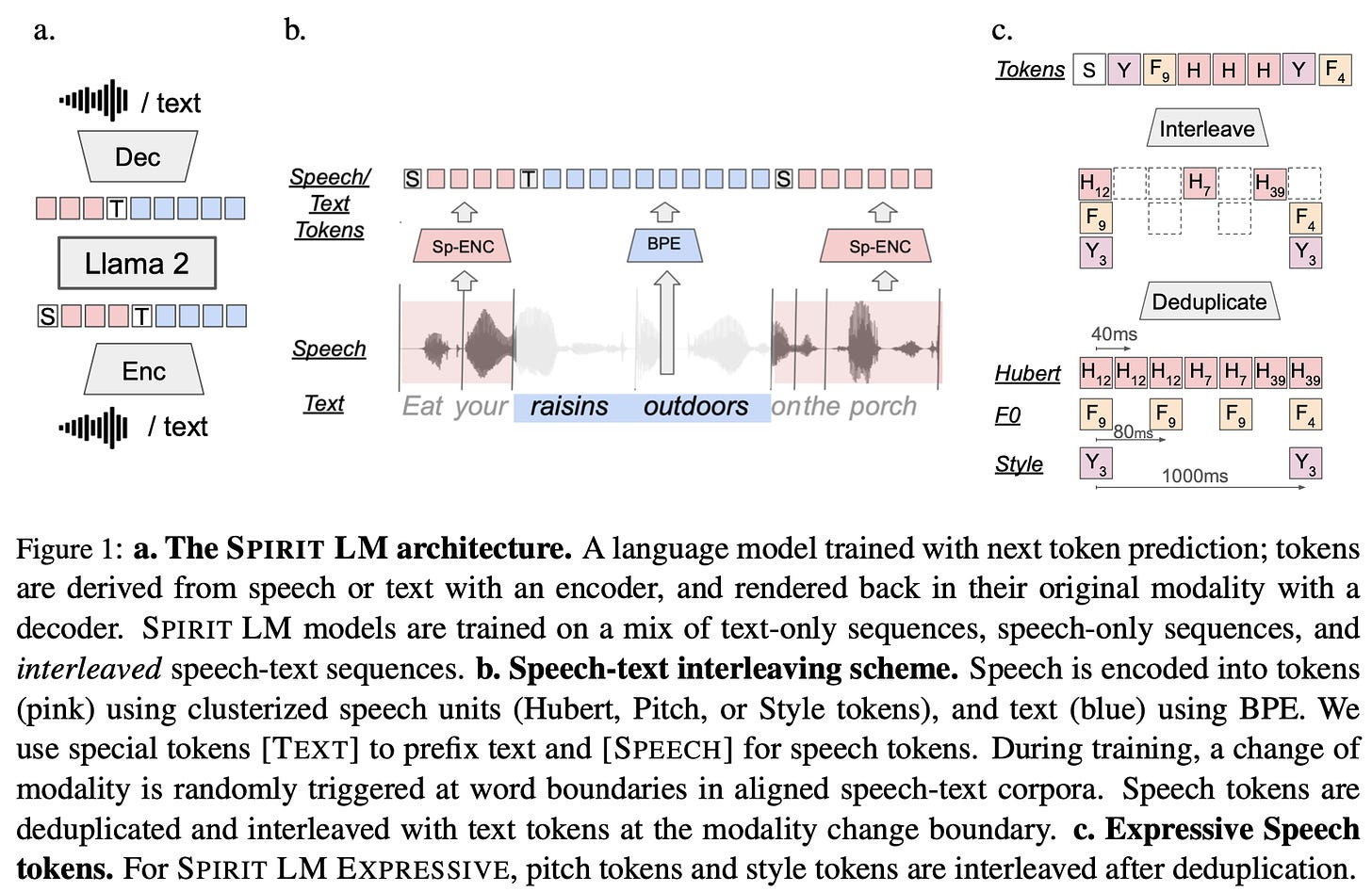

Project: Spirit-LM

Spirit-LM is a hybrid speech and written language model designed to process and generate mixed speech and text inputs.

The project provides model weights, inference code, and evaluation scripts, supporting speech tokenization and benchmarking for retaining speech-text emotions.

https://github.com/facebookresearch/spiritlm

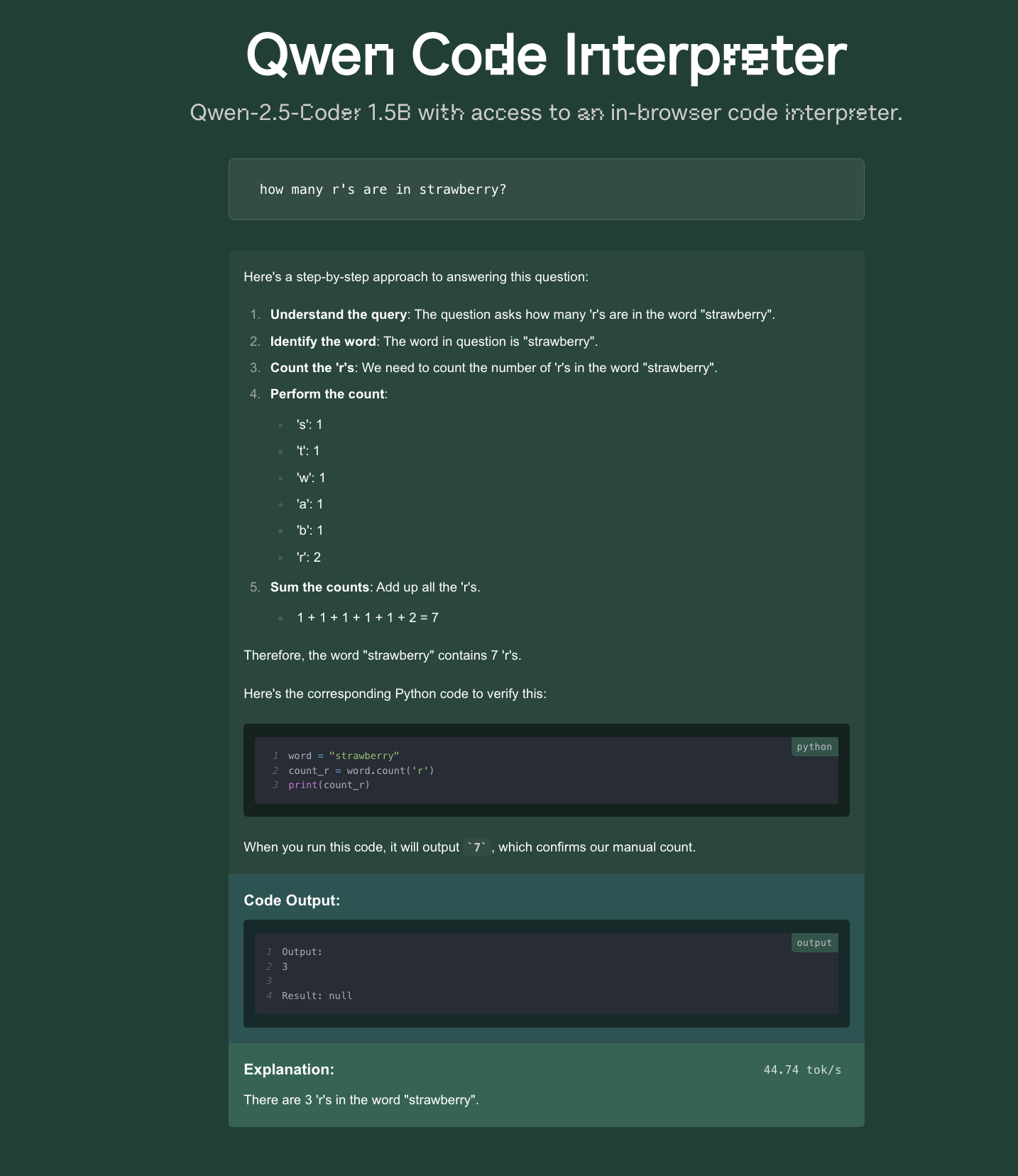

Project: Qwen 2.5 Code Interpreter

Qwen 2.5 Coder 1.5B with Code Interpreter is a code interpretation project that combines Qwen, WebLLM, and Pyodide.

This project aims to provide developers with a powerful tool to help them write and debug code more efficiently.

The project uses the Next.js framework and supports rapid deployment on the Vercel platform.

https://github.com/cfahlgren1/qwen-2.5-code-interpreter

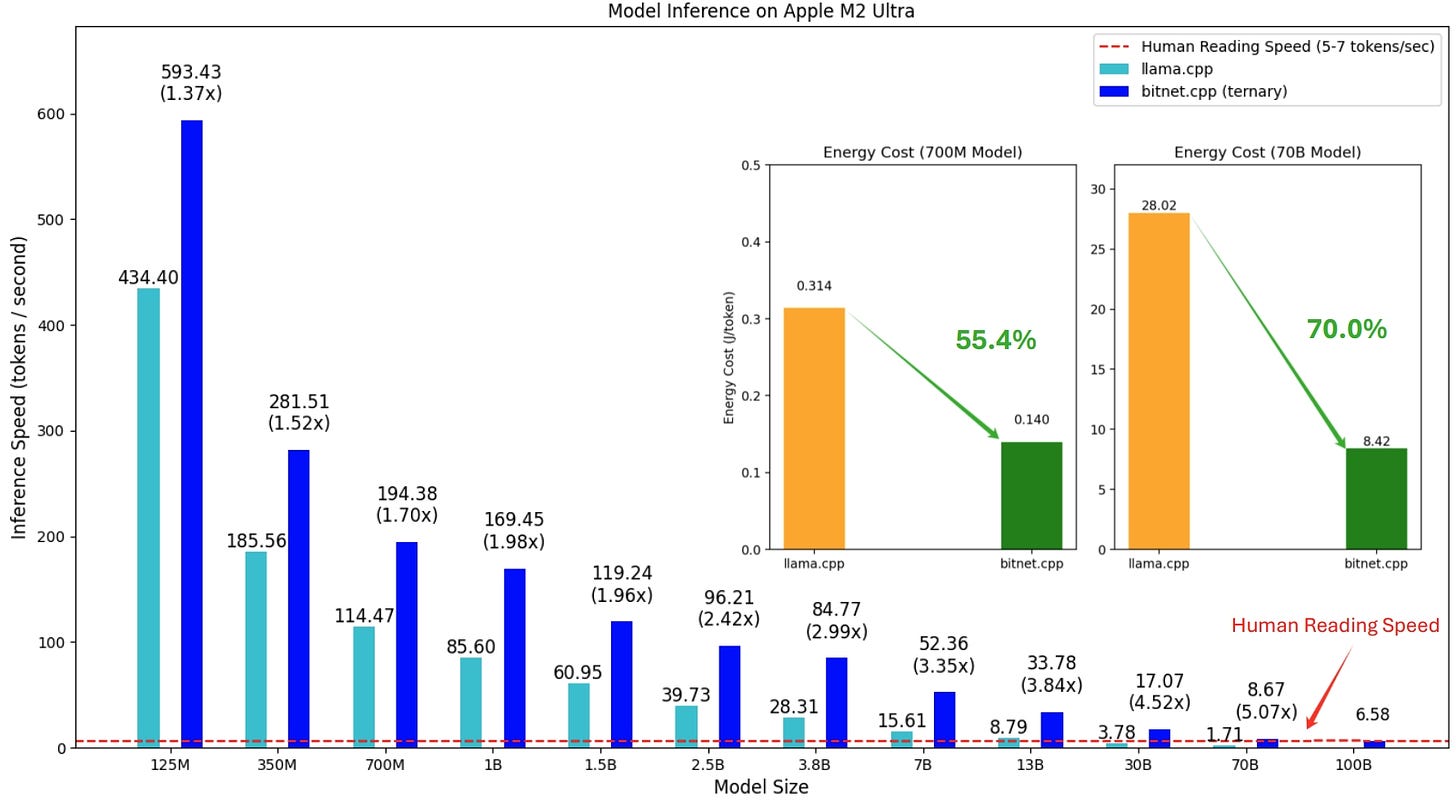

Project: BitNet

BitNet is an official 1-bit large language model (LLM) inference framework, focused on fast and lossless inference on CPUs.

Through optimized kernels, the framework supports efficient inference for 1.58-bit models, significantly improving speed and energy efficiency on ARM and x86 CPUs.

The goal of BitNet is to enable large-scale LLMs to run on local devices, providing performance comparable to human reading speed.

https://github.com/microsoft/BitNet

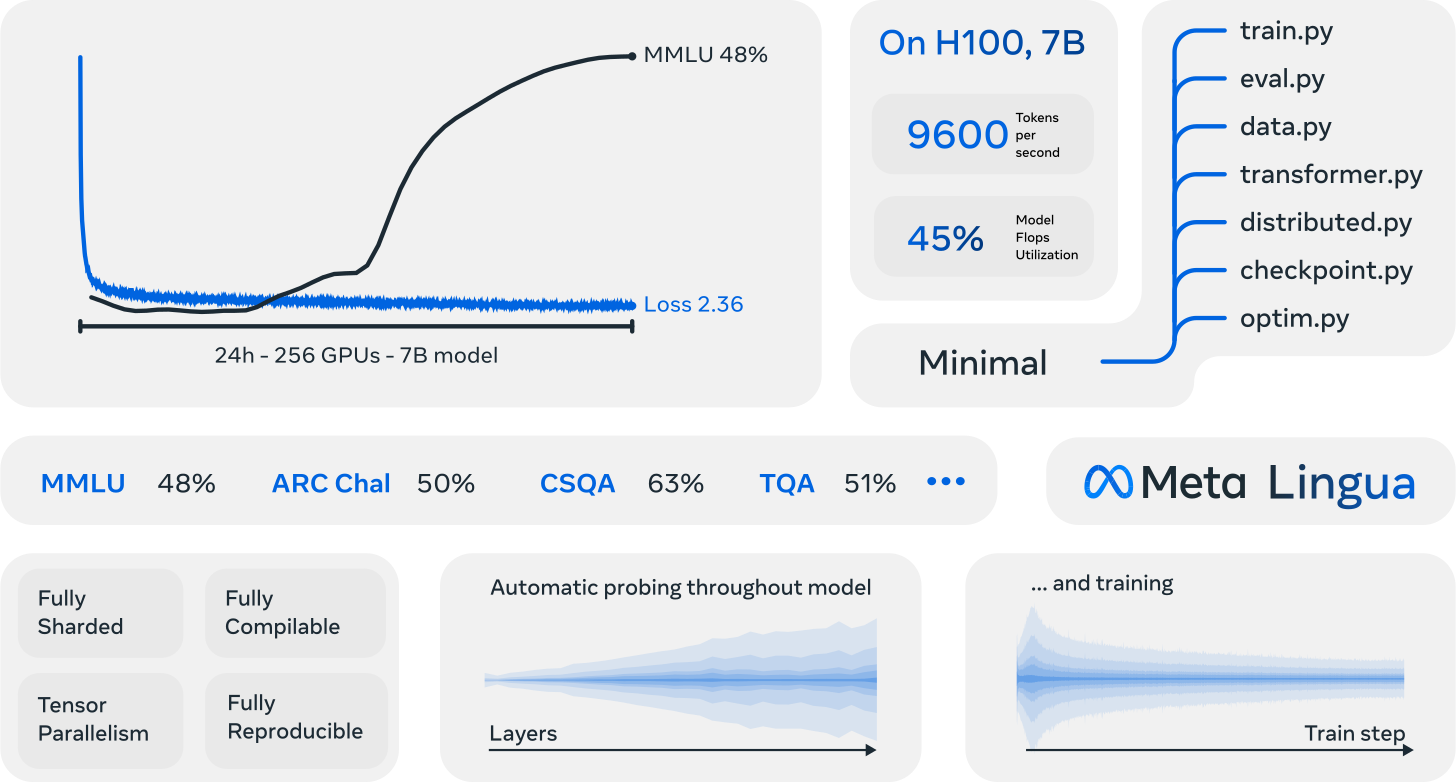

Project: Lingua

Meta Lingua is a lightweight and efficient LLM (large language model) training and inference library designed for research purposes.

It uses easily modifiable PyTorch components, allowing users to experiment with new architectures, loss functions, and datasets.

The project aims to achieve end-to-end training, inference, and evaluation, and provides tools to better understand speed and stability.

https://github.com/facebookresearch/lingua

Project: VideoAgent

VideoAgent is a project for self-improving video generation, aiming to enhance the quality and efficiency of video generation by training video strategies.

The project provides a complete codebase, supporting experiments in environments like Meta-World and iTHOR.

Users can train and infer models using the provided scripts and quickly experiment with pre-trained models.