Today's Open Source (2024-10-23): Stable Diffusion 3.5 Released

Explore the latest AI open-source models like Stable Diffusion 3.5, O1-nano, Moonshine, Fast-LLM, and GraphLLM for cutting-edge image, speech, and text generation.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: Stable Diffusion 3.5

Stable Diffusion 3.5 is a deep learning model for image generation, available in three versions (Large, Large Turbo, and Medium).

This project provides a lightweight inference implementation, supporting various text encoders and Variational Autoencoder (VAE) decoders.

Users can generate high-quality images with simple commands, which are suitable for multiple applications such as AI art creation and computer vision research.

https://stability.ai/news/introducing-stable-diffusion-3-5

Project: O1-nano

O1-nano is an open-source project designed to create a simplified version of the OpenAI O1 model series. This project primarily showcases the model's ability to solve arithmetic problems.

O1-nano combines chain-of-thought reasoning and reinforcement learning, enabling token generation for completion and internal reasoning during training and inference.

The project's goal is to enhance problem-solving capabilities by breaking down complex problems into subtasks.

https://github.com/llSourcell/O1-nano

Project: Moonshine

Moonshine is a set of fast and accurate speech-to-text models optimized for resource-constrained devices.

It is ideal for real-time on-device applications such as live transcription and voice command recognition.

Moonshine achieved a better Word Error Rate (WER) compared to OpenAI’s Whisper model on datasets used by the OpenASR leaderboard maintained by HuggingFace.

https://github.com/usefulsensors/moonshine

Project: Whispo

Whispo is an AI-powered speech transcription tool.

Users can record speech by holding the Ctrl key, and once released, the transcribed text is automatically inserted into the currently used application.

This tool supports any application that accepts text input, with data stored locally.

Whispo uses OpenAI’s Whisper for transcription and allows users to integrate their own API for transcription via a custom API URL.

Additionally, it supports transcription post-processing using large language models (e.g., OpenAI, Groq, and Gemini).

https://github.com/egoist/whispo

Project: Fast-LLM

Fast-LLM is an open-source library for training large language models, built on PyTorch and Triton.

It boasts extremely high speed, scalability to large clusters, support for multiple model architectures, and ease of use.

Unlike commercial frameworks like Megatron-LM, Fast-LLM is fully open-source, encouraging community-driven development, where researchers can freely customize and optimize as needed.

https://github.com/ServiceNow/Fast-LLM

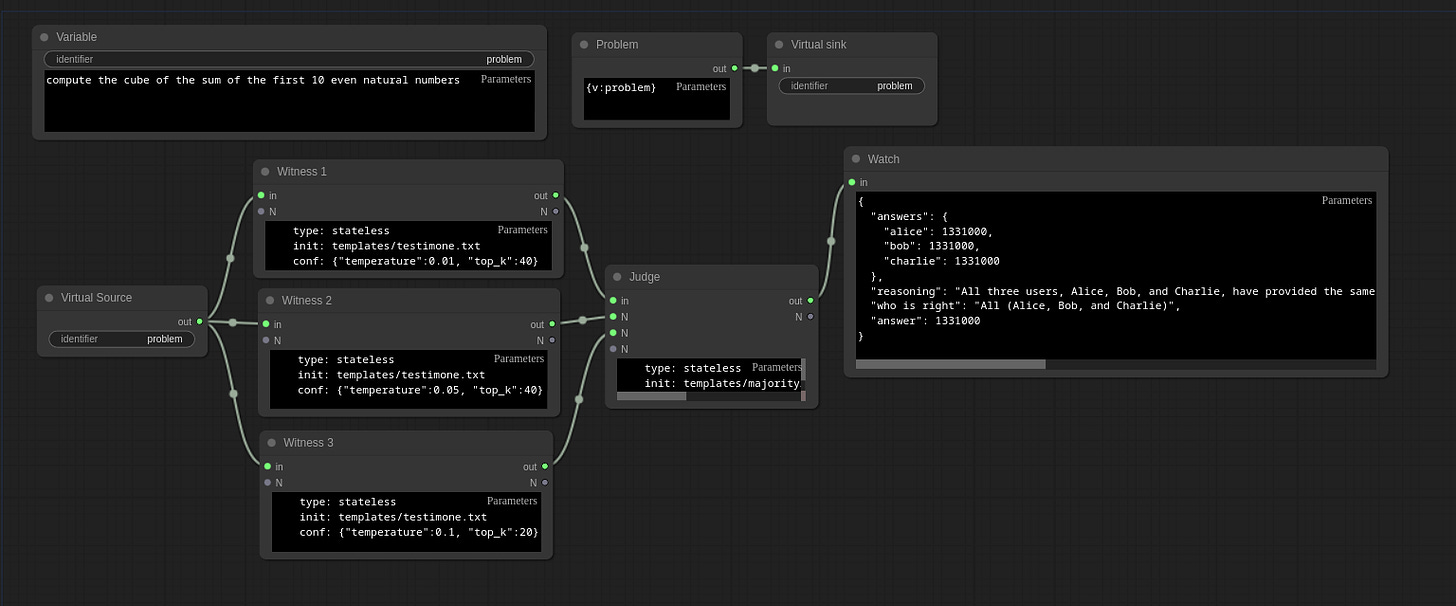

Project: GraphLLM

GraphLLM is a graph-based framework for processing data through one or more large language models (LLMs).

It offers a powerful agent capable of performing web searches and running Python code, along with a set of tools for web scraping and reformatting data into an LLM-friendly format.

GraphLLM is designed to give full control over raw prompts and model outputs without hiding the library's internal workings. The project also develops a GUI similar to ComfyUI to support advanced features for complex graphs.