Today's Open Source (2024-11-11): OpenCoder Code Language Model Family

Explore OpenCoder’s code models, CogVideoX 1.5 video generation, and HK-O1aw legal AI. Discover innovative open-source solutions for AI-driven workflows and NLP.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: OpenCoder

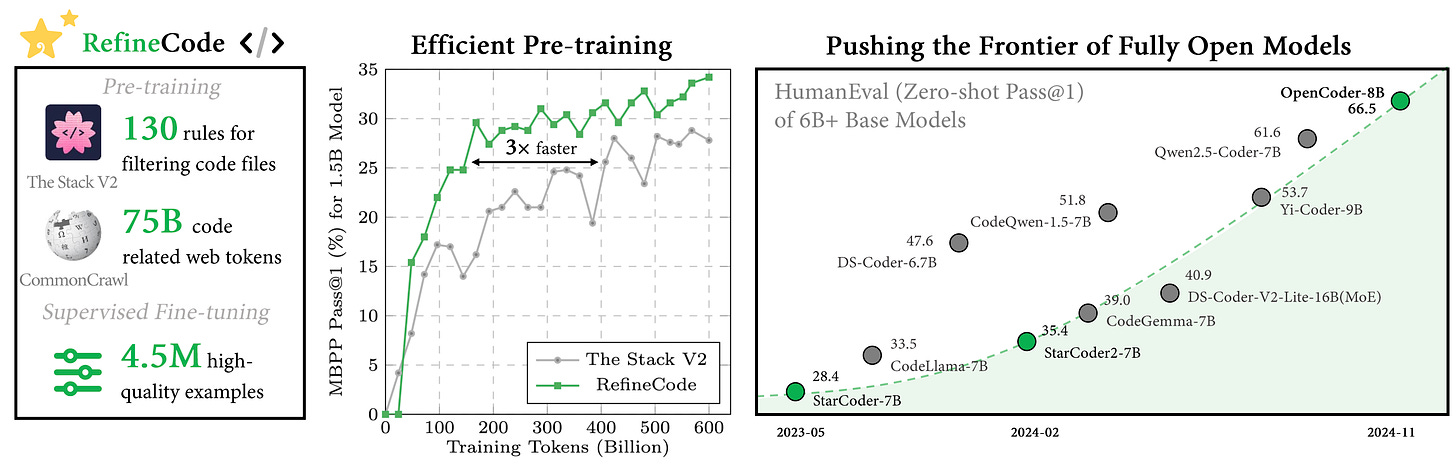

OpenCoder is an open and reproducible family of large language models for code, including 1.5B and 8B base and chat models, supporting both English and Chinese.

OpenCoder is pre-trained from scratch on 2.5 trillion tokens, 90% of which are raw code and 10% code-related web data, and fine-tuned on over 4.5 million high-quality supervised examples to achieve top-level performance among code language models.

Project: CogVideoX 1.5

CogVideoX 1.5 is an open-source video generation model, an upgraded version of CogVideoX, supporting 10-second videos and higher resolution.

CogVideoX1.5 variants support video generation at any resolution.

The model includes SAT-weight versions and integrates Transformer, VAE, and text encoder modules.

https://huggingface.co/THUDM/CogVideoX1.5-5B-SAT

Project: HK-O1aw

HK-O1aw is a legal assistant designed specifically for Hong Kong's legal system, aimed at handling complex legal reasoning.

This project is based on the Align-Anything framework and is trained on the O1aw-Dataset, with the model LLaMA-3.1-8B.

The primary goal of HK-O1aw is to enhance large language models’ reasoning and problem-solving abilities in the legal field.

All training data, code, and prompts for synthetic data generation are open-sourced to foster community research and collaboration.

https://github.com/HKAIR-Lab/HK-O1aw/

Project: AFlow

AFlow is a framework for automatically generating and optimizing agent workflows.

It uses a Monte Carlo tree search in a workflow space represented in code to identify efficient workflows, aiming to replace manual development with machine effort.

This approach has shown the potential to outperform handcrafted workflows across various tasks.

Project: VideoChat

VideoChat is a real-time interactive digital human project, supporting end-to-end voice solutions (GLM-4-Voice - THG) and cascaded solutions (ASR-LLM-TTS-THG).

Users can customize the digital human's appearance and voice, achieving voice cloning without training, with first-packet latency as low as 3 seconds.

The project is ideal for applications requiring real-time voice interaction, offering flexible customization options.

https://github.com/Henry-23/VideoChat

Project: Chonkie

Chonkie is a lightweight and high-speed RAG chunking library, designed to simplify the text chunking process.

It supports various chunking methods, including word-based, sentence-based, and semantic similarity-based chunking.

Chonkie aims to provide an easy-to-use, redundancy-free solution suitable for diverse natural language processing tasks.