Today's Open Source (2024-09-19): Alibaba Cloud Launches Qwen2.5 with Multilingual and Long-Text Support

Discover Alibaba Cloud's Qwen2.5, a multilingual large language model with 18T tokens, and explore Moshi's low-latency speech-to-text framework.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: Alibaba Cloud Qwen2.5

Qwen2.5 is a large language model series developed by Alibaba Cloud's Qwen team.

The training data expanded from Qwen2's 7T tokens to Qwen2.5's 18T tokens. It supports 29 languages and comes in various sizes, ranging from 0.5B to 72B parameters. It handles up to 128K tokens of context, with over 32K tokens processed using YARN for extrapolation. The maximum output length is 8K tokens.

Qwen2.5 shows significant improvements in following instructions, generating structured outputs, and supporting multiple languages. It also includes open-source models like Qwen2.5-Coder and Qwen2.5-Math.

https://github.com/QwenLM/Qwen2.5

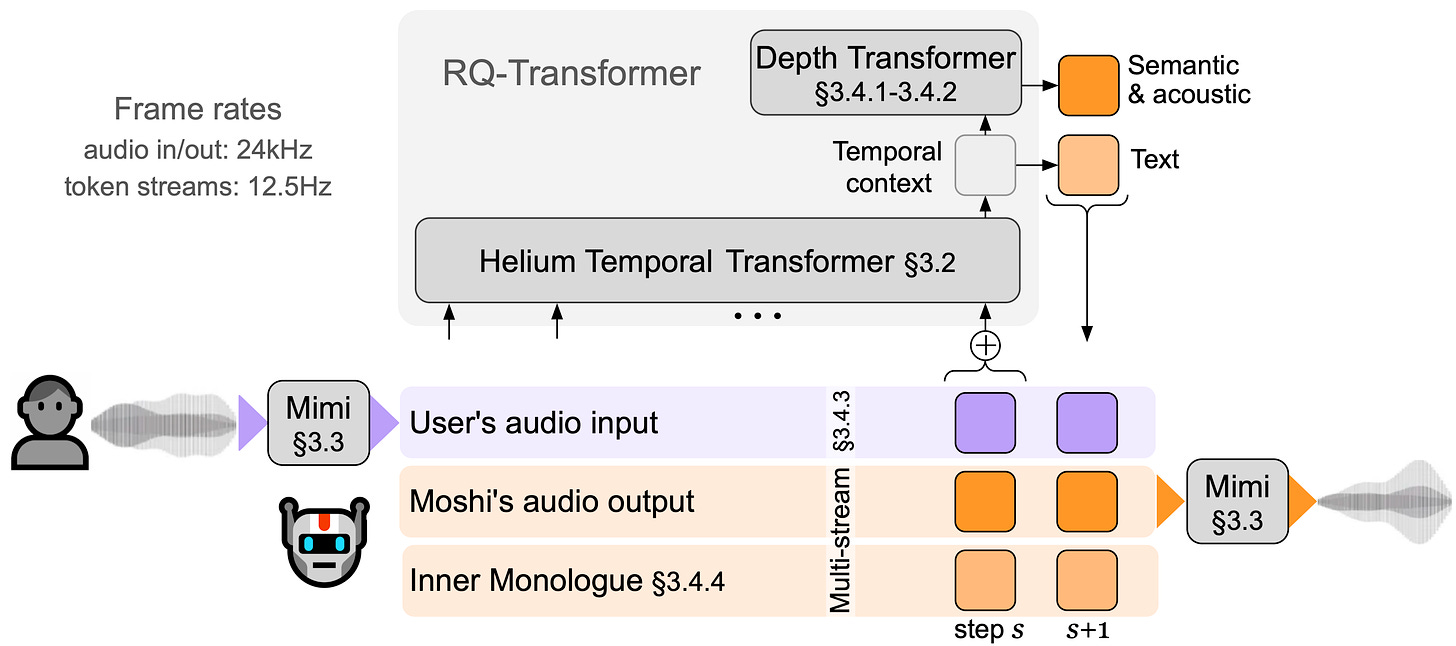

Project: Moshi

Moshi is a speech-to-text foundational model and a full-duplex voice dialogue framework.

It uses the advanced Mimi streaming neural audio codec, processing 24 kHz audio at 1.1 kbps with just 80ms latency.

Moshi operates with two audio streams—one for itself and one for the user. It predicts text tokens for its own speech, improving generation quality.

The project achieves real-world latency as low as 200ms on L4 GPUs.

https://github.com/kyutai-labs/moshi

Project: Windows Agent Arena

Windows Agent Arena (WAA) is a scalable platform for testing and benchmarking multimodal AI agents on the Windows OS.

It provides researchers and developers with a reproducible and realistic environment to test agent workflows across various tasks.

WAA supports large-scale agent deployment on Azure ML infrastructure, allowing parallel runs of multiple agents and fast benchmarking of hundreds of tasks within minutes.

https://github.com/microsoft/windowsagentarena

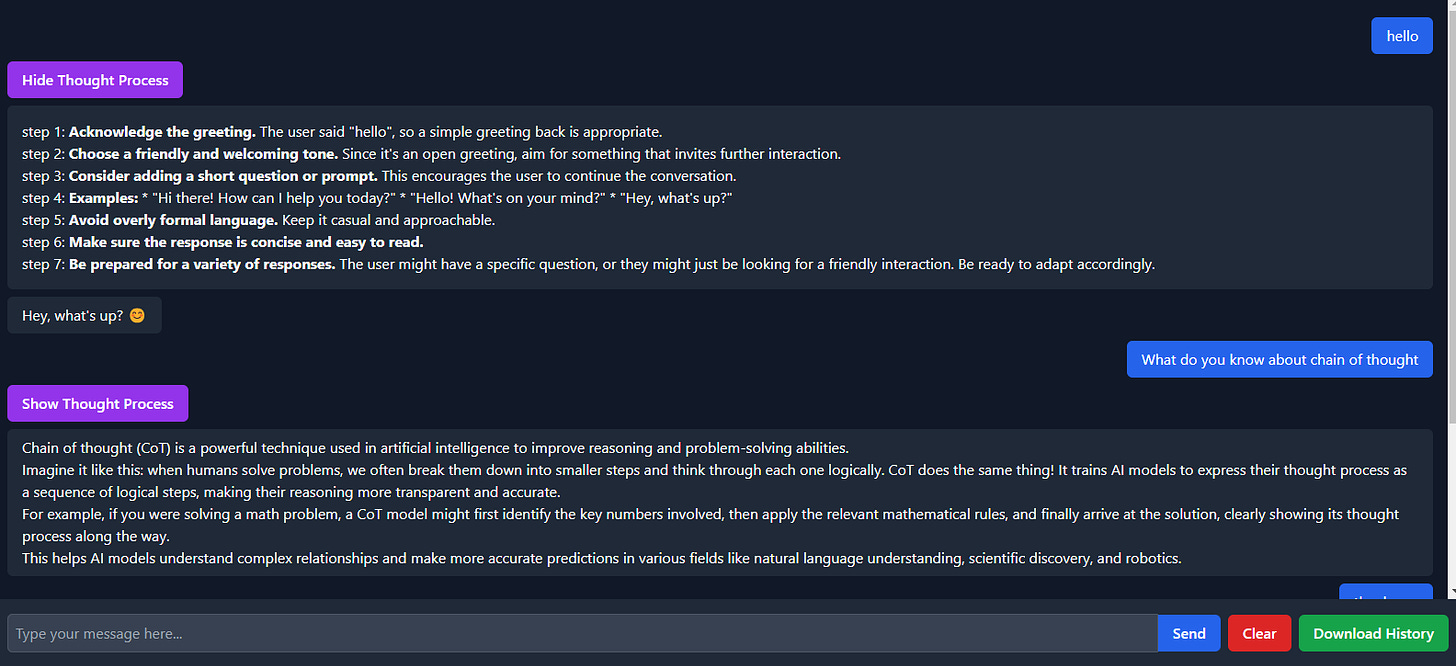

Project: ReflectionAnyLLM

ReflectionAnyLLM is a lightweight proof-of-concept project. It demonstrates basic chain-of-thought reasoning with any large language model (LLM) that supports OpenAI-compatible APIs.

It works with both local and remote LLMs, allowing users to switch between different providers with minimal setup.

https://github.com/antibitcoin/ReflectionAnyLLM

Project: LLM-Engines

LLM-Engines is a unified inference engine for large language models (LLMs). It supports open-source models like VLLM, SGLang, and Together, as well as commercial ones like OpenAI, Mistral, and Claude.

The project validates inference correctness by comparing outputs from different engines under the same parameters. Users can easily call different models via a simple API.

https://github.com/jdf-prog/LLM-Engines

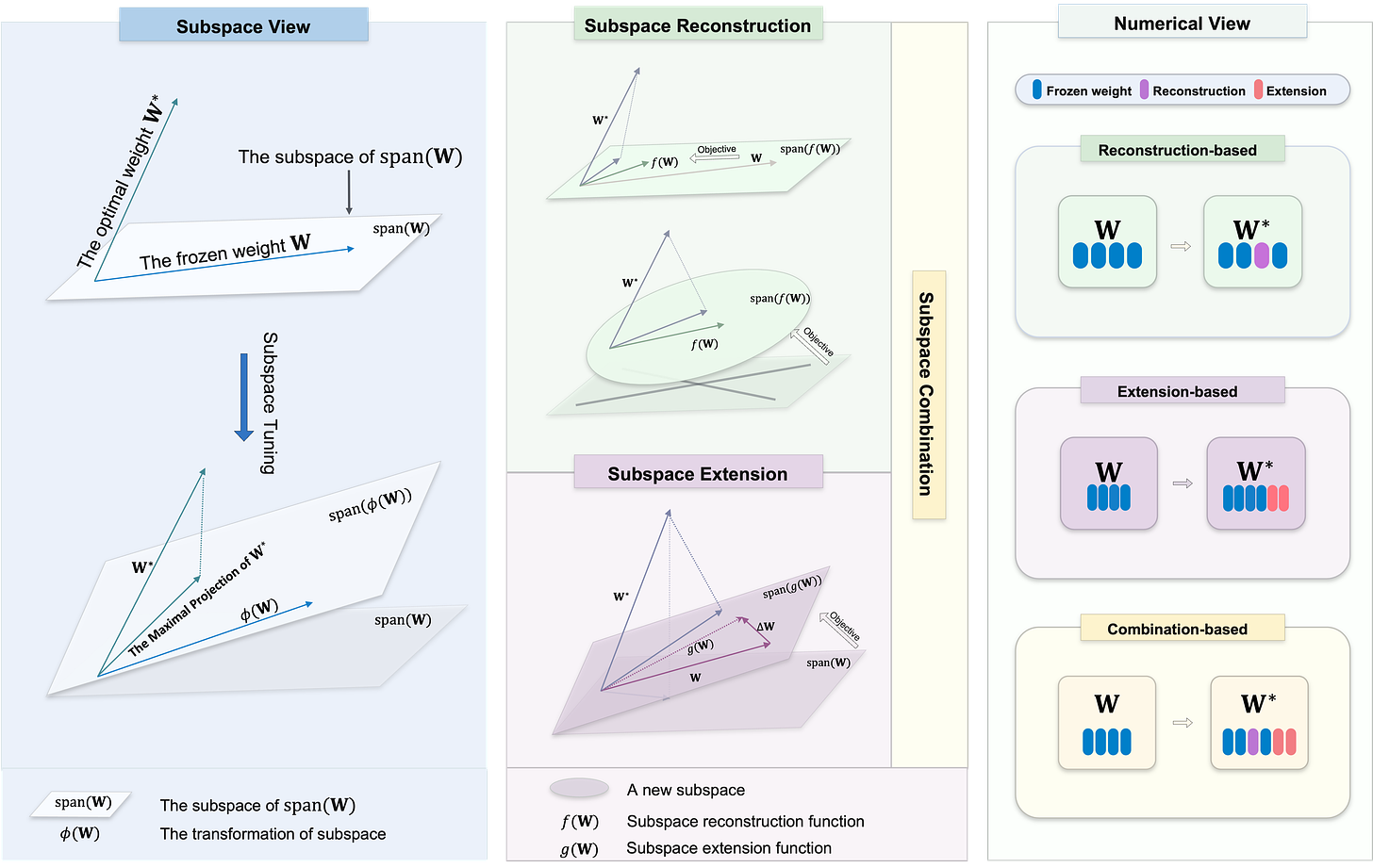

Project: Subspace-Tuning

Subspace-Tuning is a general framework designed to enable parameter-efficient fine-tuning using subspace methods.

Its goal is to adapt large pre-trained models for specific tasks by making minimal changes to the original parameters.

It does this by finding the optimal projection of weights in subspace. The project offers resources to help researchers and practitioners integrate it into their own work.