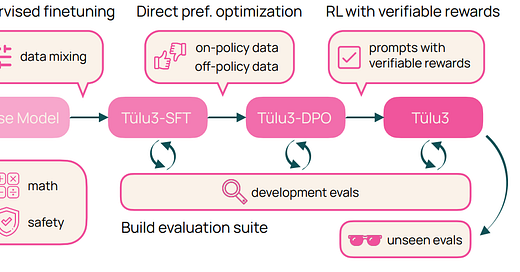

Tülu 3 Open-Source Model: Unlocks All "Post-Training" Secrets, Outperforms Llama 3.1 Instruct

Tülu 3 open-source model by AI2 redefines post-training, outperforming Llama 3.1 Instruct. Explore its 73-page technical report and groundbreaking methods!

The open-source model ecosystem welcomes a new heavyweight contender: Tülu 3.

Developed by the Allen Institute for Artificial Intelligence (AI2), Tülu 3 currently offers 8B and 70B parameter versions (with a 405B version planned for the future) and outperforms the equivalent versions of Llama 3.1 Instruct!

Its extensive 73-page technical report delves deeply into post-training details.

In recent discussions around whether Scaling Laws have plateaued, post-training has become a focal point of attention.

Notably, OpenAI's recently launched o1 demonstrated significant improvements in mathematics, coding, and long-term planning tasks. These advancements are attributed to reinforcement learning during the post-training phase and increased computational depth during inference.

This has led some to propose a new scaling paradigm: Post-Training Scaling Laws, sparking community debates about computational resource allocation and post-training capabilities.

However, details on how to conduct effective post-training and which aspects most impact model performance remain scarce, as such knowledge is often proprietary.

Breaking the silence, the Allen Institute for AI (AI2), previously renowned for redefining "open source" with the first fully open-source large model, has now stepped forward again.

Not only did AI2 open-source two new models—Tülu 3 8B and 70B (with a 405B version on the horizon)—that outperform their Llama 3.1 Instruct counterparts, but they also published detailed post-training methods in their technical report.