GPT-4o Arrives: The King of Multimodal AI

Ultimate AI: GPT-4o, Your Digital Companion, Launches Today!

On May 13, 2024, OpenAI launched GPT-4o, a new flagship model that improves human-computer interaction.

GPT-4o, where "o" means "omnidirectional," changes how we communicate. It can handle text, audio, and images easily. It processes audio fast, in 232 ms or less, averaging 320 ms, nearly as quickly as humans react.

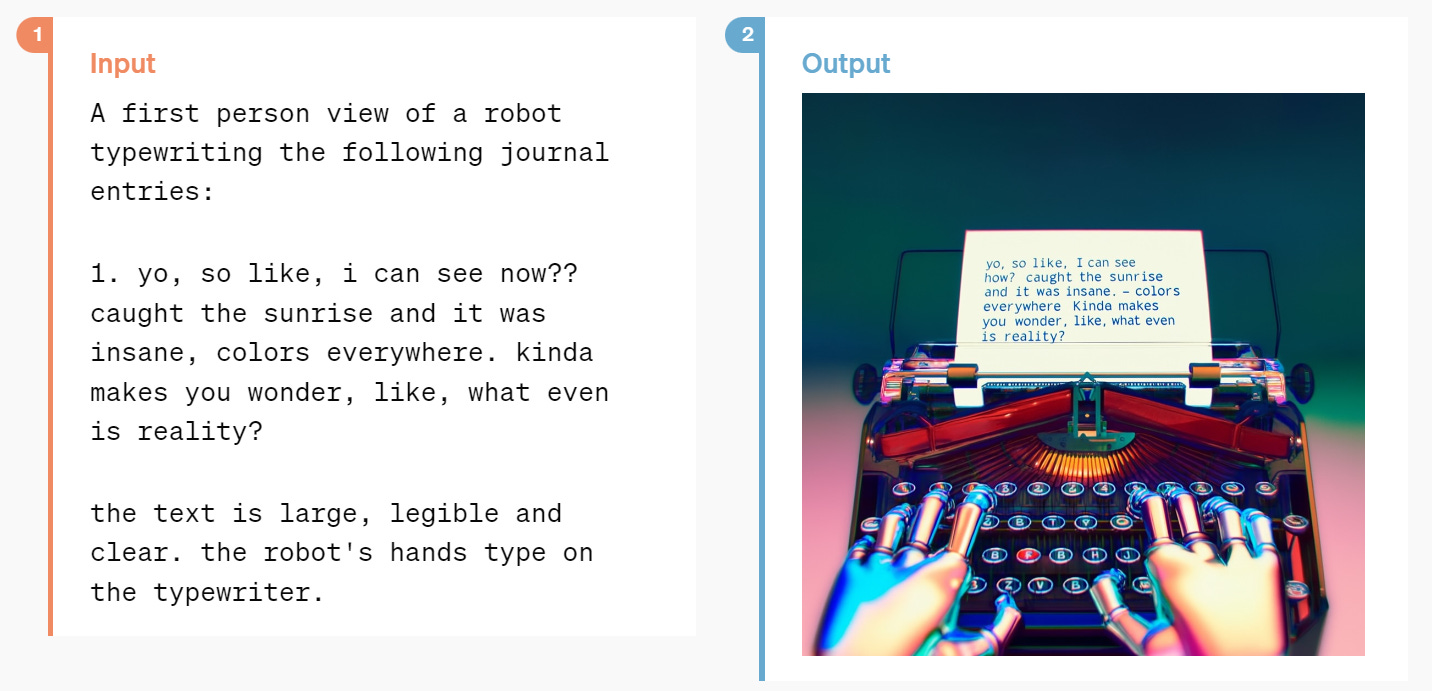

GPT-4o also has made great progress in understanding and discussing images, far better than previous models.

GPT-4o will be available to ChatGPT free users but with some limits. Plus users will have five times more messages than free users. Team and Enterprise users will have even higher limits.

GPT-4o is OpenAI's first model to handle text, visuals, and audio with one neural network. OpenAI is just starting to explore its potential and limits.

gpt2-chatbot: The Test Version of GPT-4o

In April, OpenAI's CEO, Sam Altman, often talked about GPT2. It was a hint at GPT-4o coming. The test version, im-also-a-good-gpt2-chatbot, already showed great potential in the LMSys arena.

However, the ELO rating system used to measure skill levels can have limitations with certain tasks. For instance, with simple prompts like "What's up," no model can achieve very high win rates. But with more challenging prompts, especially in coding, GPT-4o excelled. It scored over 100 ELO points higher than our previous best model, proving its strength.

Support for Multiple Devices

Now officially available on desktop, PC, and mobile. GPT-4o will be gradually rolled out.

Both free and paid users can now try the ChatGPT desktop app designed for macOS. With a single shortcut (Option + Space), ChatGPT is ready to answer your questions.

Discuss screenshots directly in the app without switching windows, boosting work efficiency.

Starting today, Plus users will get early access to the macOS app. We will open it to more users in the coming weeks. A Windows version is also in the works, expected later this year.

Multimodal Capabilities

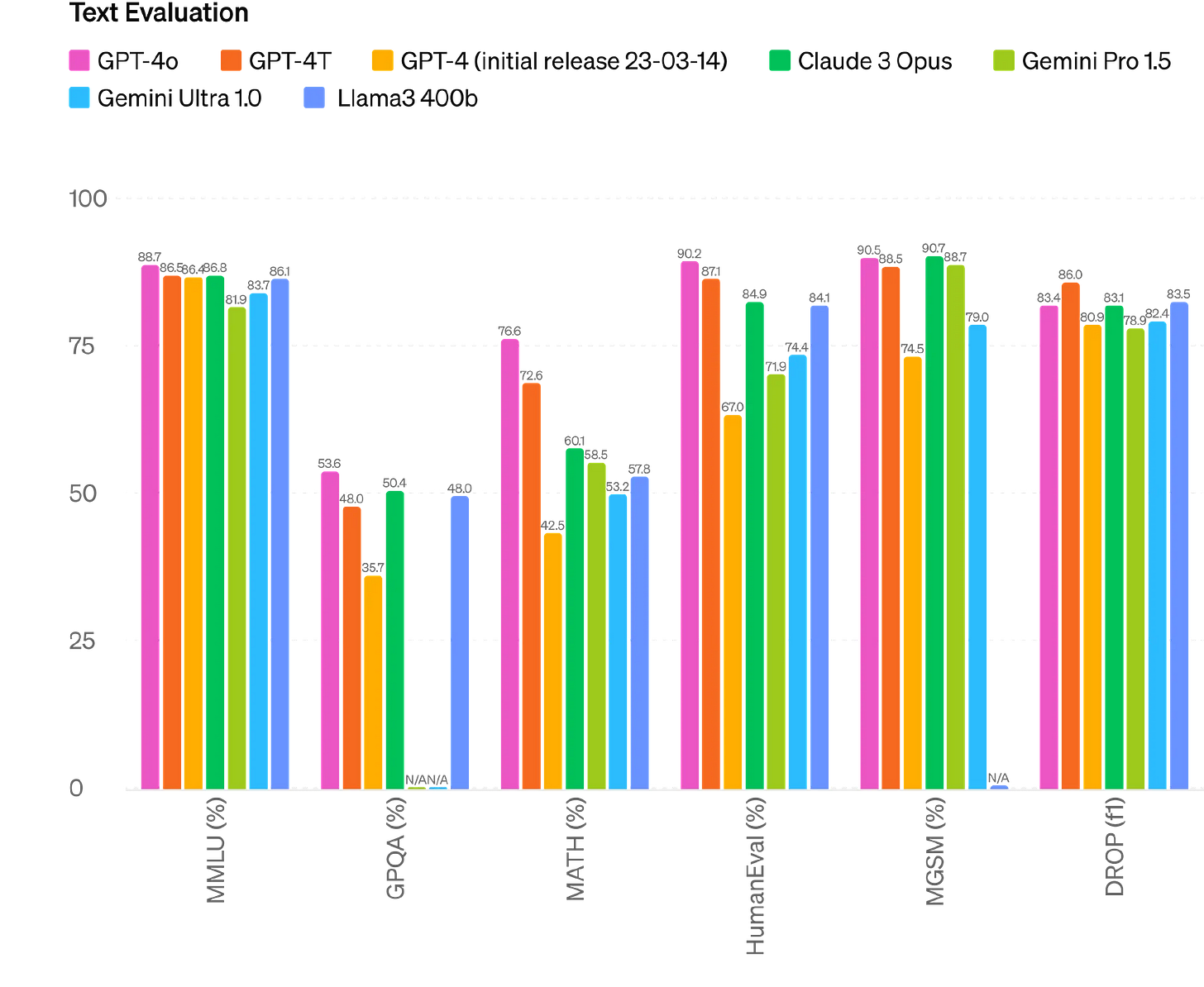

GPT-4o achieved a new high score of 88.7% on 0-shot COT MMLU (common sense Q&A). This means it can accurately answer various common sense questions without special training.

In the traditional 5-shot no-CoT MMLU test, GPT-4o also set a record with a high score of 87.2%.

GPT-4o equals GPT-4 Turbo in text, logic, and coding. It also sets new levels in languages, sound, and images.

It uses 20 languages, showing its better way of compressing words. This covers many language types, giving it a language edge.

In the coming weeks, ChatGPT Plus users will experience the new voice mode powered by GPT-4o.

API

Developers can now access GPT-4o through the API as a text and visual model. It is twice as fast as the GPT-4 Turbo, costs half as much, and has a rate limit five times higher.

In the coming weeks, OpenAI plans to introduce new audio and video features in the API to a select group of trusted partners.

For more examples, visit: https://openai.com/index/hello-gpt-4o/

Hi! My name is Meng. Thank you for reading and engaging with this piece. If you’re new here, make sure to follow me. (Tip: I’ll engage with, and support, your work. 🤫thank me later).

Thanks for summarizing!

How do you see chat gpt being used instead of google search or other search engines and what do you think about writers/creatives using AI for writing?