Vector Database Zilliz: How Long-Term Thinkers Conquer the Global Market

Explore how vector databases like Zilliz revolutionize AI, enabling large models with long-term memory, dynamic updates, and cutting-edge applications.

The turning point of destiny began on March 23, 2023, with a routine update from OpenAI.

On that day, OpenAI ChatGPT released a plugin feature called ChatGPT-retrieval-plugin. In the official plugin’s standard use case examples, OpenAI specifically highlighted that vector databases are an indispensable component for large model products to achieve long-term memory.

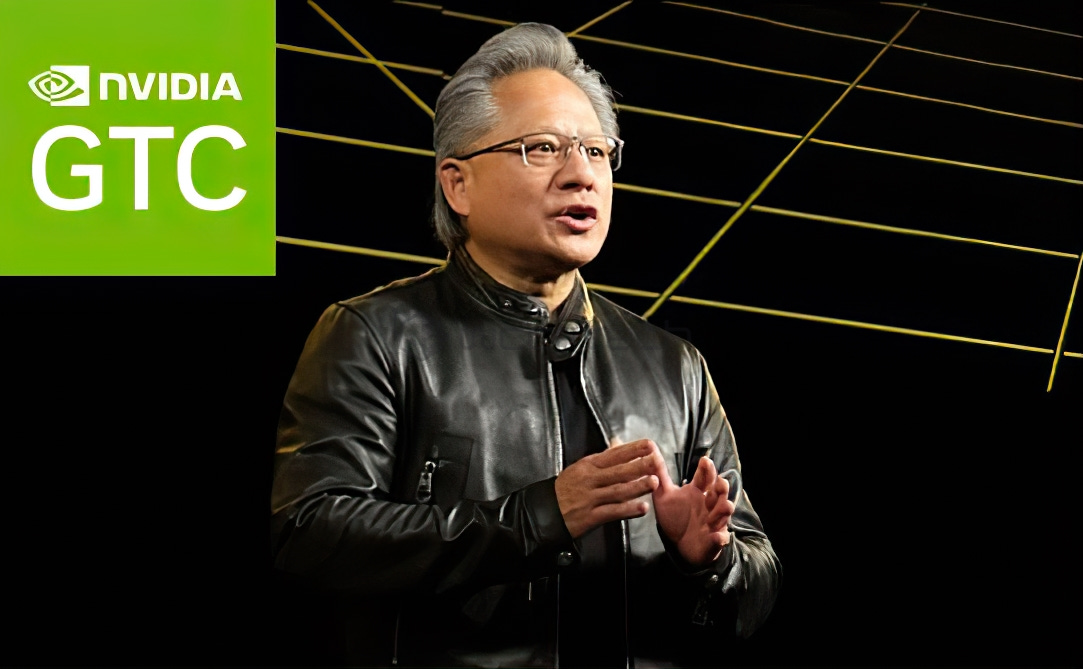

Coincidentally, just three days earlier at the NVIDIA GTC 2023 conference, NVIDIA founder Jensen Huang also emphasized vector databases. A previously obscure vector database startup, Zilliz, was invited to speak on stage three times during the event. Vector databases and large language models became the most discussed topics of this year's GTC, second only to chips.

From that day forward, open-source communities and venture capital markets worldwide saw an explosive surge in interest in vector database projects, drawing a steep growth curve.

Veteran player Zilliz's Milvus quickly saw its GitHub stars increase from 10,000 to 30,000 in just two years. In what was once a sparsely populated field, startups like Pinecone and Weaviate emerged as “dedicated vector databases,” springing up like mushrooms after rain. Billions of dollars in venture capital flooded into these startups.

Amid this feverish enthusiasm came rapid but chaotic growth:

Jeff Delaney, a Google developer expert and creator of the YouTube channel Fireship, was able to push his vector database startup, Rektor, to a valuation of $420 million despite having zero revenue, zero business plan, and zero demonstrable code. Star startups admitted that their products were merely built on top of ClickHouse and HNSWlib, adding vector retrieval and Python wrappers before going to market.

Even in the secondary market, traditional database management companies saw their stock prices skyrocket on mere announcements of developing vector databases. Major corporations rolled out in-house vector database products in less than three months from project inception.

At the time, everyone believed that every era has its defining infrastructure: if the Industrial Revolution had water, coal, and electricity; the Information Age had IOE + Wintel; and the mobile era was defined by Qualcomm + Android + Snowflake, then for the AI era, why not GPU + large models + vector databases?

Owning vector database source code was seen as a ticket to a dream team poised for a trillion-dollar AI future.

What was overlooked, however, was that violent delights often have violent ends. Like the repeated “database wars” of history, in a market characterized by economies of scale, the 80-20 rule had already written the future for most players.

A New Trillion-Dollar Blue Ocean

Before delving into the market's enthusiasm for vector databases, it’s important to first clarify what they are and their relationship with large models.

As the name suggests, vector databases are designed to store and manage vectors. In contrast are traditional relational databases like Oracle and MySQL, and NoSQL databases like PostgreSQL and MongoDB that rose during the Web 2.0 era.

Unlike these systems, vector databases excel in storing and managing unstructured data, such as images, videos, audio files, and documents, which cannot be precisely described using tables (structured formats).