Open Source Today (2024-09-20): Alibaba Unveils Ovis 1.6 – A New Multimodal Language Model

Explore the latest AI projects: Alibaba Ovis 1.6, CogVideoX, GRIN-MoE, Void code editor, and EzAudio, driving innovation in multimodal models and efficiency.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: Alibaba International Ovis 1.6

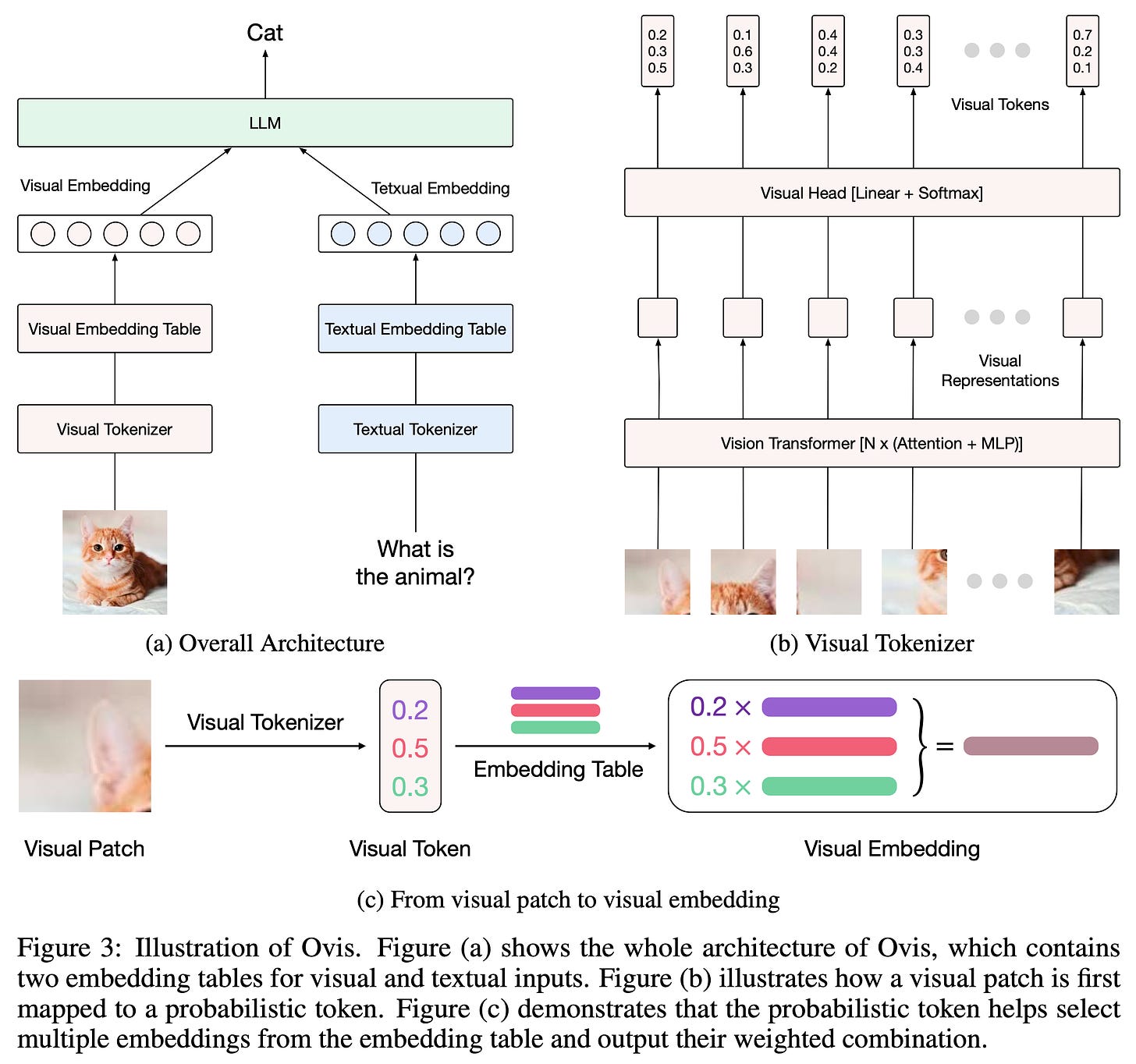

Ovis (Open VISion) is a new multimodal large language model (MLLM) designed to align visual and text embeddings in a structured way.

This project improves the model's performance by using high-resolution images and optimized training data.

Ovis 1.6 introduces a visual tokenizer, visual embedding table, and large language model architecture. It uses a learnable visual embedding table to convert continuous visual features into structured visual tokens. These visual tokens are processed together with text tokens for multimodal tasks.

https://github.com/AIDC-AI/Ovis

Project: CogVideoX-5B-I2V

Zhipu released the CogVideoX series model CogVideoX-5B-I2V, along with its annotation model cogvlm2-llama3-caption.

The team developed an efficient 3D variational autoencoder (3D VAE), which compresses the original video space by 98%, greatly reducing training costs and difficulty for video diffusion models.

The training loss includes L2 loss, LPIPS perceptual loss, and GAN loss from a 3D discriminator. You can input “one image” plus a “prompt” to generate a video. CogVideoX now supports text-to-video, video extension, and image-to-video tasks.

https://github.com/THUDM/CogVideo

Project: GRIN-MoE

😁

GRIN-MoE is an efficient sparse expert model with 6.6 billion activated parameters, excelling in code and math tasks.

It uses SparseMixer-v2 to estimate gradients related to expert routing, instead of traditional MoE training where expert gating proxies gradient estimation.

GRIN-MoE works without expert parallelism or token dropping during training. It’s designed for memory/compute-constrained environments and low-latency scenarios, aiming to speed up language and multimodal model research.

https://github.com/microsoft/GRIN-MoE

Project: Void

Void is an open-source code editor project, a fork of VSCode.

It aims to provide developers with a powerful coding environment, integrated with OpenAI features like ChatGPT and Copilot.

Void offers a wide range of extensions and plugins to help developers increase coding efficiency.

https://github.com/voideditor/void

Project: EzAudio

EzAudio is an advanced text-to-audio model based on diffusion. It aims to create high-quality audio for real-world applications while reducing computing demands.

EzAudio combines efficient diffusion transformers to generate audio faster while keeping high sound quality.