Performance Differences Between NVIDIA A10 and A10G!

Uncover the performance secrets of NVIDIA A10 vs A10G! Explore their subtle differences, memory bandwidth impact, and practical GPU selection tips.

GPU Battle: Who Will Reign Supreme?

What’s the untold story behind NVIDIA’s "twin brothers," the A10 and A10G?

In the world of GPUs, subtle differences often hide behind seemingly identical specs.

Today, let’s uncover the little-known secrets between NVIDIA A10 and A10G.

Surface-Level "Similarity"

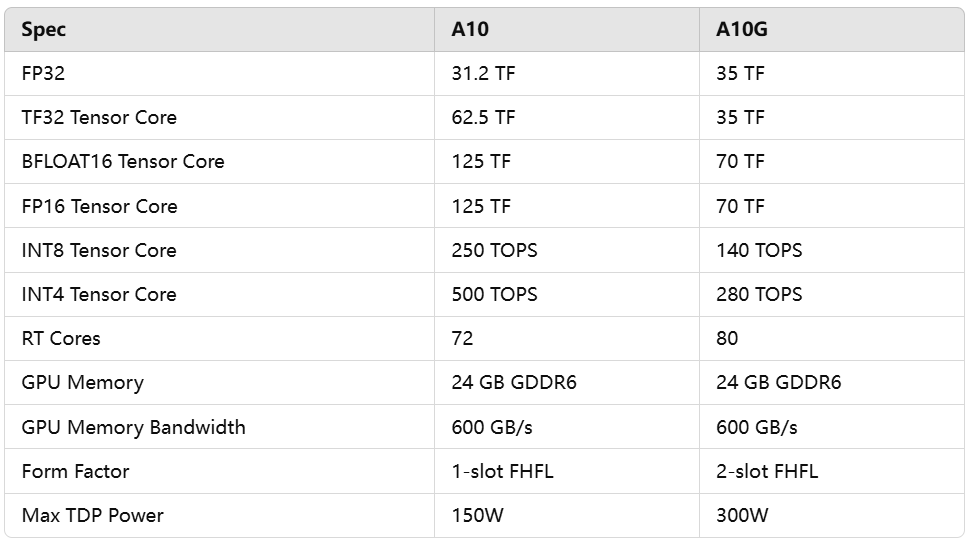

At first glance, the A10 and A10G look like exact clones:

24GB GDDR6 memory

600 GB/s memory bandwidth

Both based on the Ampere architecture

But hold on—the devil is in the details!

Performance Showdown: Who’s the Better Choice?

Surprisingly, despite their seemingly similar specs, their performance in machine learning inference tasks is almost identical.

Here’s the key: Most ML inference tasks are "memory-bound" rather than "compute-bound."

On tensor core performance, the A10 does pull ahead:

A10: 125 TF

A10G: 70 TF

But don’t let the numbers fool you. For models like Llama 2 with 7 billion parameters, memory bandwidth is the real performance bottleneck.

Behind the Scenes: Why Are They So Similar?

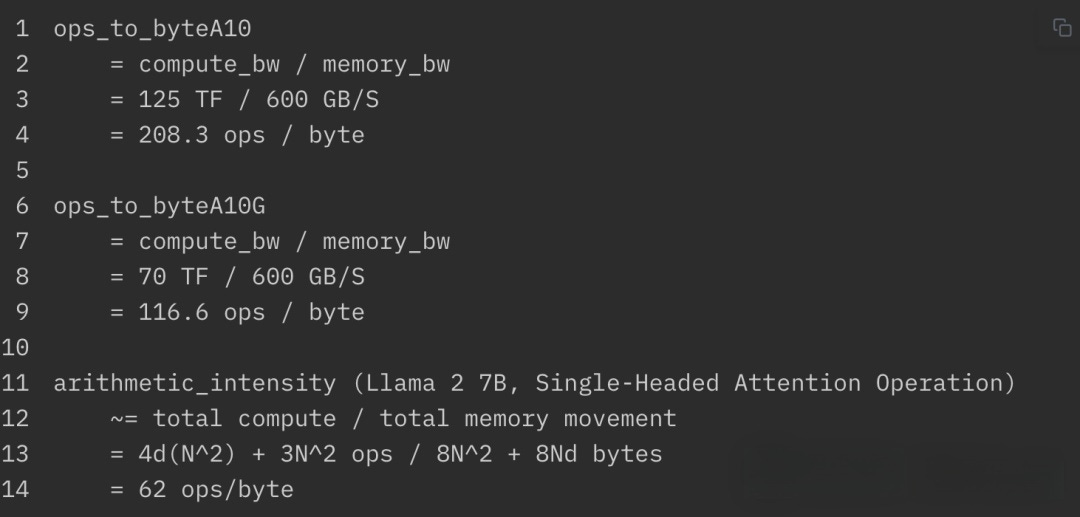

Computer scientists conducted detailed analyses:

Compute intensity of the Llama 2 7B model: 62 ops/byte

Compute capacity of the A10: 208.3 ops/byte

Compute capacity of the A10G: 116.6 ops/byte

The verdict?

Both GPUs exceed the computing requirements of the model.

AWS’s "Customized Edition": The Story of A10G

The A10G was essentially designed as a custom variant for AWS. It trades off some tensor compute performance for slightly better CUDA core performance.

Practical Insights

For most ML inference tasks, the A10 and A10G are nearly interchangeable.

The only scenario where there might be a noticeable difference? Batch inference. The A10’s higher computing power might give it a slight edge in handling large batches.

Selection Tips

When choosing an inference GPU, follow this golden rule: Prioritize memory size first, bandwidth second, and compute capacity last.

With 24GB of memory, both A10 and A10G meet the "entry ticket" for many large models. Either one should serve you well.

In the GPU world, numbers aren’t everything.

True performance often lies in the seemingly ordinary details.