Today's Open Source (2024-09-23): Multimodal Large Language Model Oryx

Discover innovative projects like Oryx MLLM, AgentTorch, MAgICoRe, OneGen, and VPTQ that push the boundaries of AI, visual data, and large-scale simulations.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: Oryx

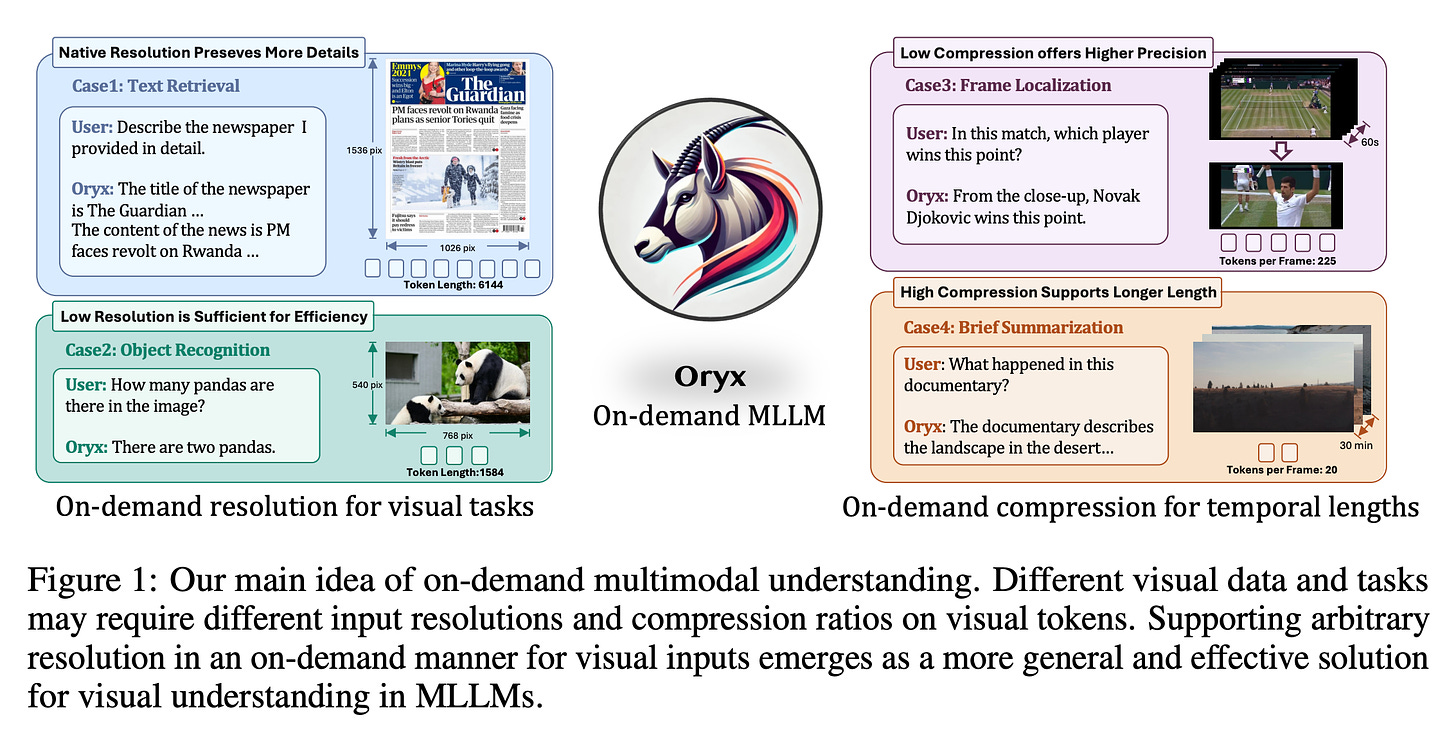

Oryx is a multimodal large language model (MLLM) designed to overcome the limitations of current models when handling visual data of different resolutions and durations.

Oryx introduces two key innovations to dynamically process visual input of any resolution and duration. First, the pre-trained OryxViT model encodes images into visual representations suitable for LLMs. Second, a dynamic compression module allows compressing visual tokens between 1x to 16x as needed.

https://github.com/Oryx-mllm/Oryx

Project: AgentTorch

AgentTorch is an open-source platform designed to tackle challenges in computational efficiency and behavior modeling for large-scale agent simulations.

It optimizes GPU usage, making it possible to efficiently simulate the behaviors of entire cities or countries.

AgentTorch’s design focuses on scalability, differentiability, composability, and generalization.

It helps capture agents’ adaptive behaviors in complex environments, such as pandemics. Researchers can simulate millions of agents and analyze their decisions in various social and economic situations, providing insights for policymaking.

https://github.com/AgentTorch/AgentTorch

Project: MAgICoRe

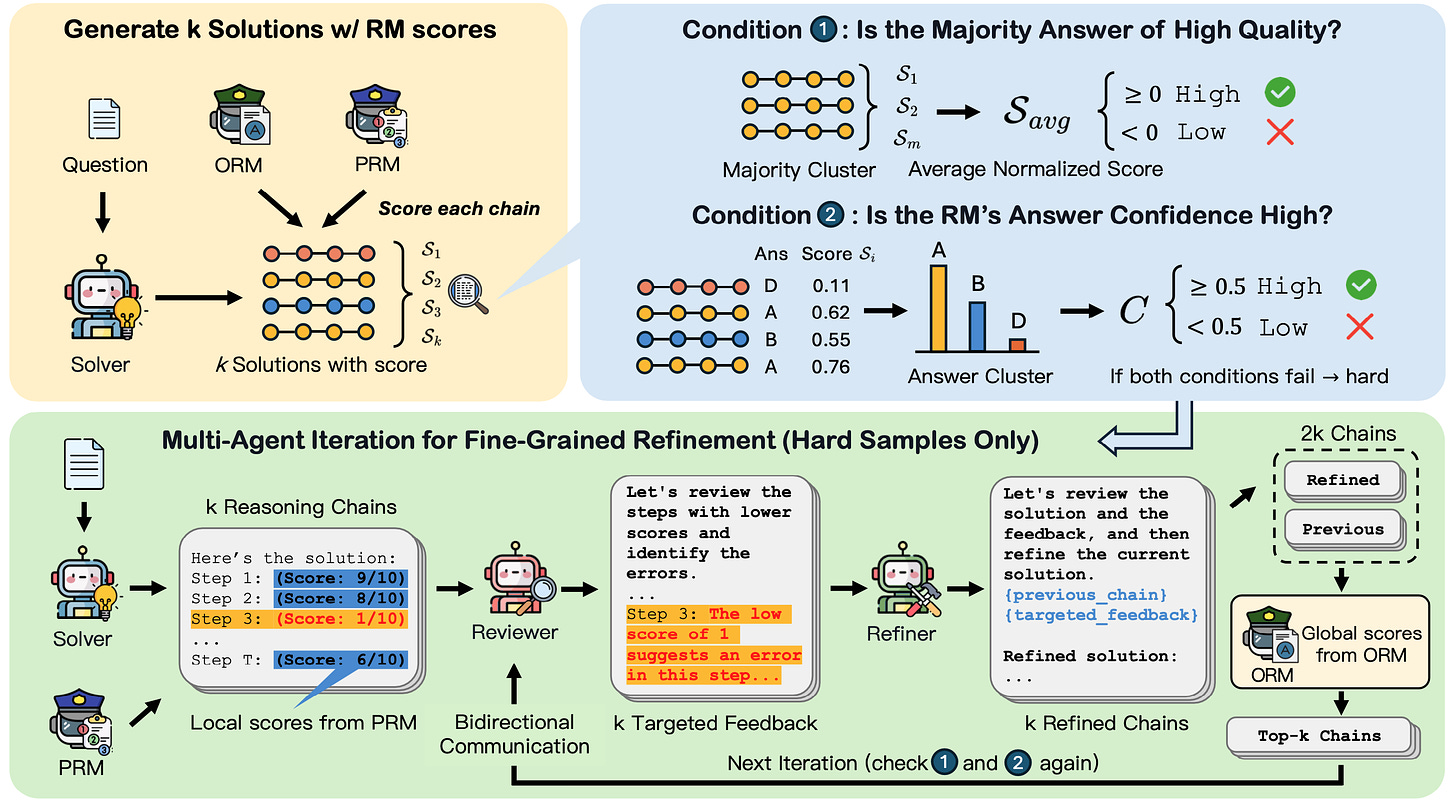

MAgICoRe is a multi-agent reasoning framework designed to solve complex reasoning tasks through a step-by-step refinement process.

Its key idea is to generate multiple reasoning chains and refine them for difficult cases, improving accuracy and efficiency.

MAgICoRe introduces a difficulty-aware mechanism that splits problems into "easy" and "hard" categories. With three collaborating agents, the model can better identify and correct errors, improving the quality of answers.

https://github.com/dinobby/MAgICoRe

Project: OneGen

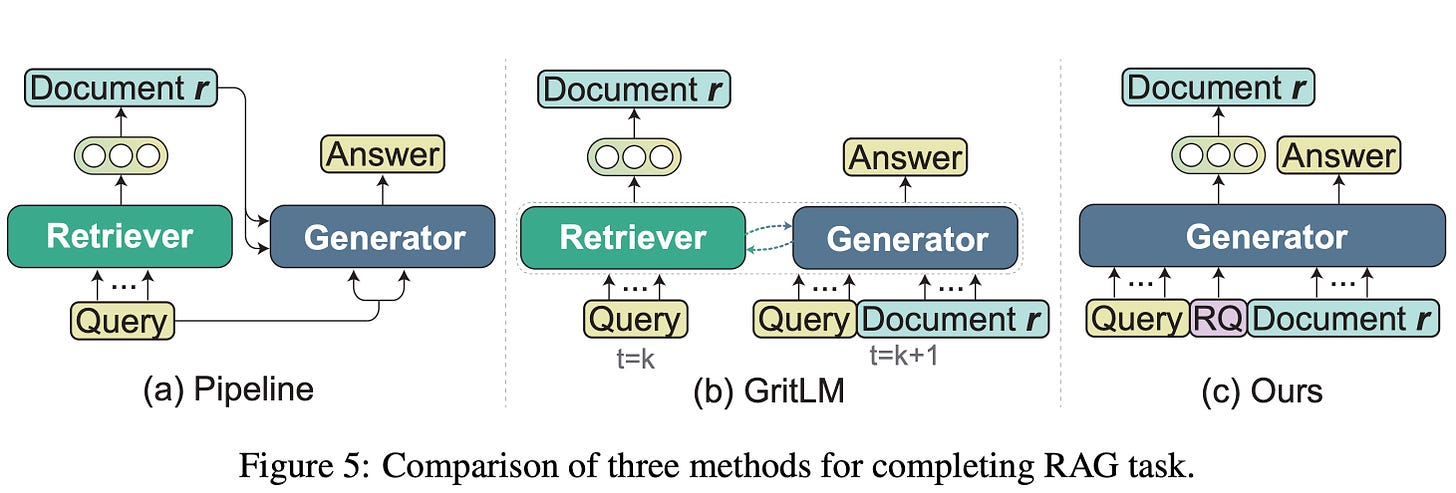

OneGen is a framework for one-shot generation and retrieval for large language models (LLMs).

Its main idea is to assign retrieval tasks during the autoregressive generation process, integrating both tasks into the same context. This reduces deployment costs and significantly lowers inference costs.

https://github.com/zjunlp/onegen

Project: VPTQ

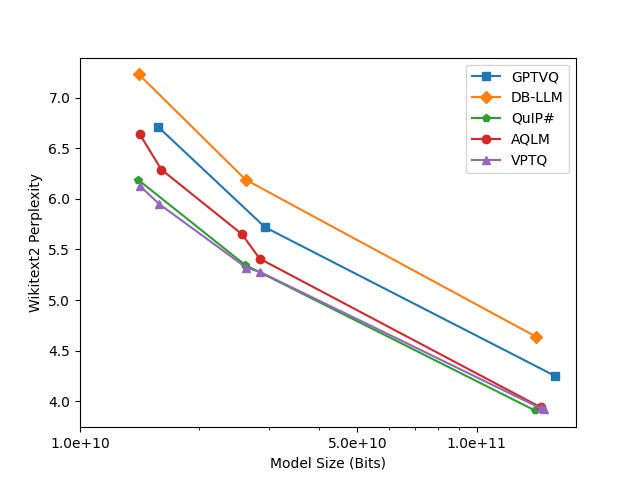

VPTQ is a novel post-training quantization method that uses vector quantization to achieve high accuracy at very low bit-widths (less than 2-bit).

It can compress models as large as 70B or even 405B to 1-2 bits without retraining, while maintaining high accuracy. This method reduces memory needs, optimizes storage costs, and lowers memory bandwidth requirements during inference.