Today's Open Source (2024-09-30): China Telecom Releases TeleChat2 Star Semantic Model

Explore cutting-edge AI projects: TeleChat2's powerful semantic model, CogView3's image generation, ChatMLX for secure chats, Outspeed SDK, Llama Assistant, and MemoryScope's long-term memory for LLMs

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: Telechat2

TeleChat2 is a large language model developed by China Telecom's AI Research Institute. It's fully trained using domestic computing power.

The open-source TeleChat2-115B model was trained on 10 trillion tokens of high-quality bilingual data. Multi-format, multi-platform weights for this model are also released.

Improvements in data and training methods have significantly enhanced TeleChat2's performance in general Q&A, knowledge tasks, coding, and math compared to TeleChat1.

https://github.com/Tele-AI/TeleChat2

Project: CogView3

CogView3 is a new text-to-image generation system using relay diffusion technology.

It breaks down high-resolution image generation into stages. By adding Gaussian noise to low-resolution images, the diffusion process from these noisy images starts.

Through gradual distillation, CogView3 reduces inference time to 1/10th of SDXL while maintaining output quality.

https://github.com/THUDM/CogView3

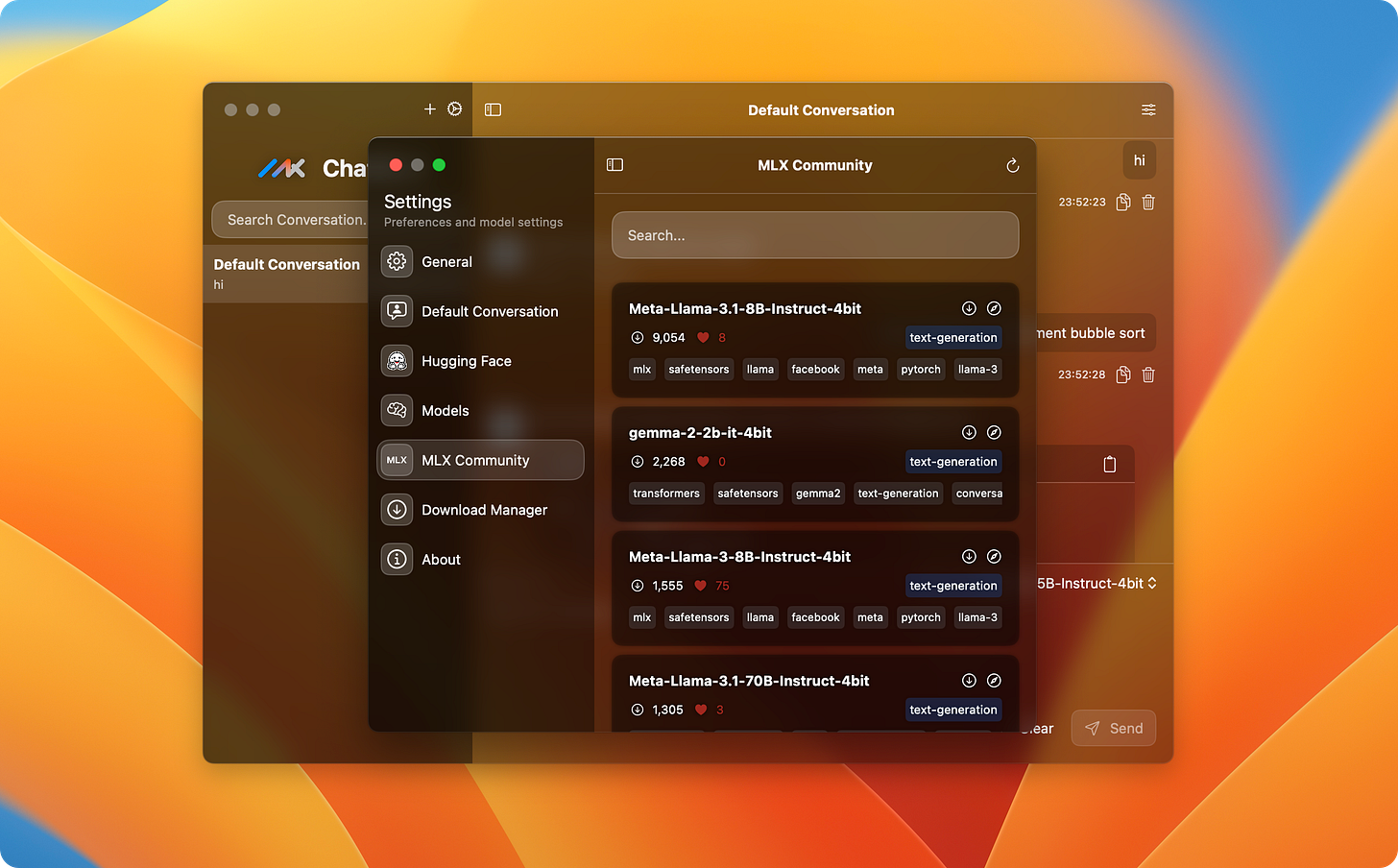

Project: ChatMLX

ChatMLX is a modern open-source, high-performance chat app powered by large language models and optimized for MLX and Apple silicon.

It supports various models, offering diverse chat options. The app runs LLMs locally, ensuring user privacy and security.

https://github.com/maiqingqiang/ChatMLX

Project: Outspeed

Outspeed is a PyTorch-inspired SDK for building real-time AI apps based on voice and video input. It provides low-latency streaming for audio and video, an intuitive API for PyTorch users, flexible AI model integration, data preprocessing, and deployment tools.

It is ideal for developing real-time AI apps like voice assistants and video analysis.

https://github.com/outspeed-ai/outspeed

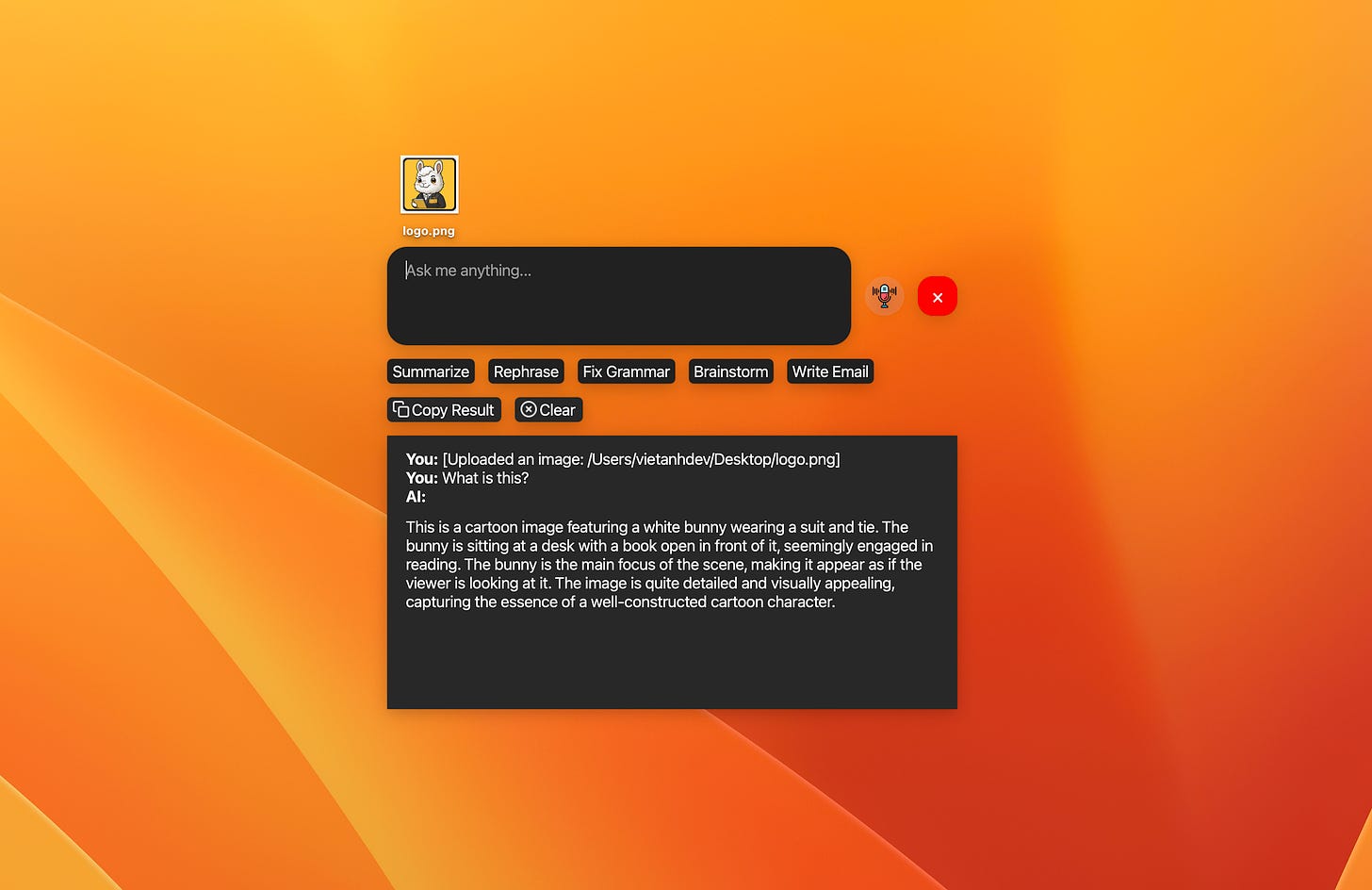

Project: Llama Assistant

Llama Assistant is an AI tool based on Llama 3.2, designed to assist with daily tasks.

It recognizes your voice, processes natural language, and can perform actions like summarizing text, rephrasing sentences, answering questions, and writing emails.

The assistant runs offline on your device, ensuring your privacy by not sending data to external servers.

https://github.com/vietanhdev/llama-assistant

Project: MemoryScope

MemoryScope provides powerful and flexible long-term memory for LLM chatbots, offering a framework to build such memory capabilities.

It’s useful for personal assistants and emotional companions, allowing LLMs to learn and remember user preferences, making interactions feel more intuitive over time.