Today's Open Source (2024-10-10): Gradio 5 Launch—Build Efficient AI Apps in Just a Few Lines of Code!

Explore the latest AI open-source models, including Gradio 5, MCTS-LLM, and Fira. Build efficient applications with ease and enhance LLM performance today!

Here are some interesting AI open-source models and frameworks I wanted to share today:

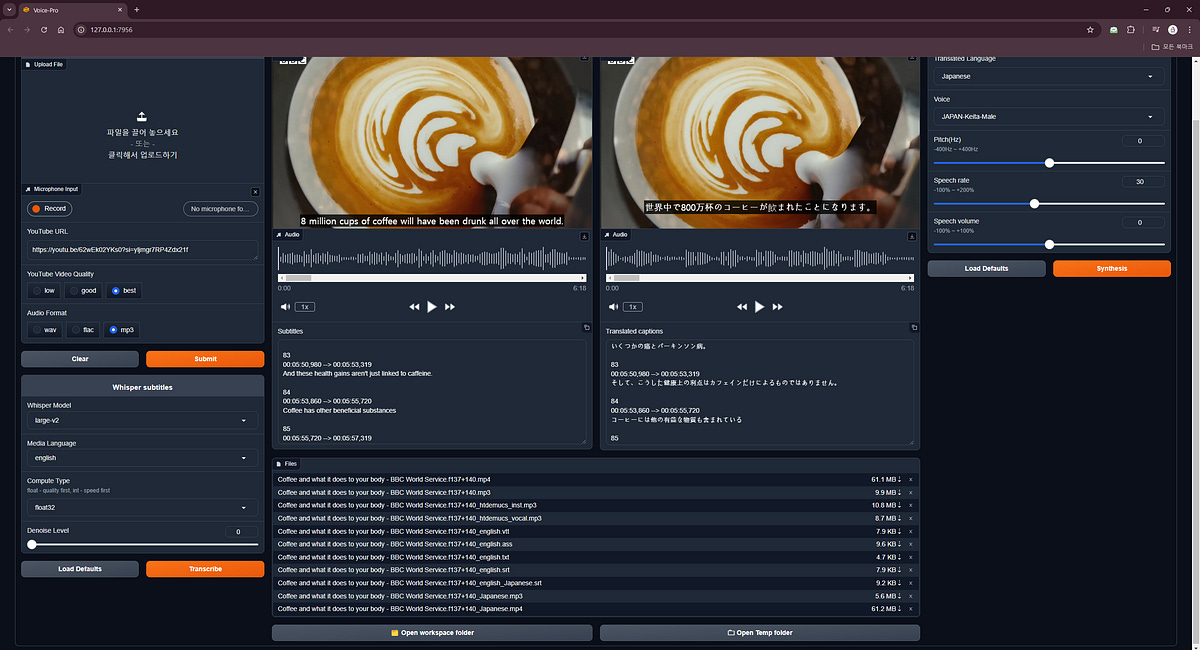

Project: Gradio 5

The latest version of Gradio aims to bridge the gap between the expertise of machine learning practitioners and web development skills.

Gradio 5 offers high-performance, scalable, aesthetically pleasing application development capabilities that adhere to best web security practices.

With simple Python code, developers can quickly create and deploy machine learning applications.

It also introduces server-side rendering, modern design, low-latency streaming support, and an experimental AI Playground, further enhancing the development experience.

https://github.com/huggingface/blog/blob/main/gradio-5.md

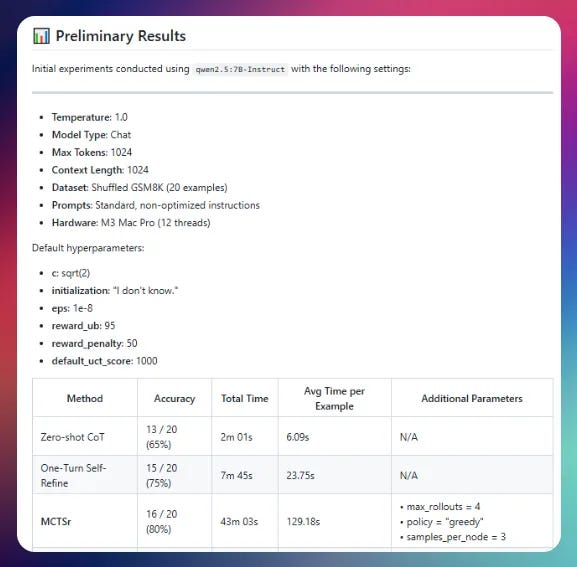

Project: MCTS-LLM

MCTS-LLM is a lightweight project that combines Monte Carlo Tree Search (MCTS) with prompt engineering techniques to enhance the performance of large language models (LLMs).

The project aims to improve the response quality of LLMs by extending the reasoning phase rather than increasing computational resources during the training phase. It allows fine-tuning of prompt instructions and benchmarking of various MCTS adaptations.

https://github.com/NumberChiffre/mcts-llm

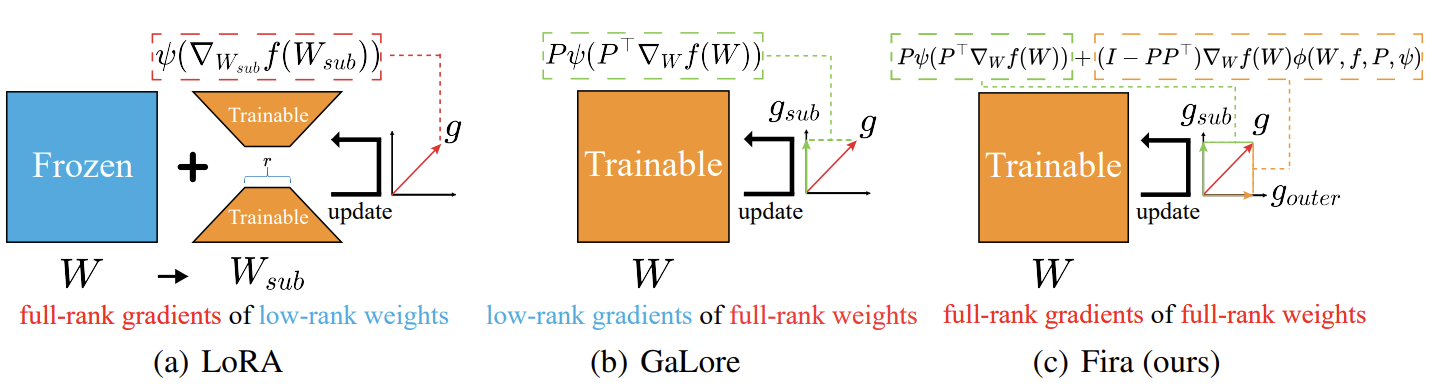

Project: Fira

Fira is a memory-efficient training framework for large language models (LLMs).

Unlike LoRA and Galore, Fira implements full-rank gradient training under low-rank constraints.

This is the first attempt to achieve consistent full-rank training under low-rank constraints. The method is easy to implement, requiring only two lines of equations.

https://github.com/xichen-fy/fira

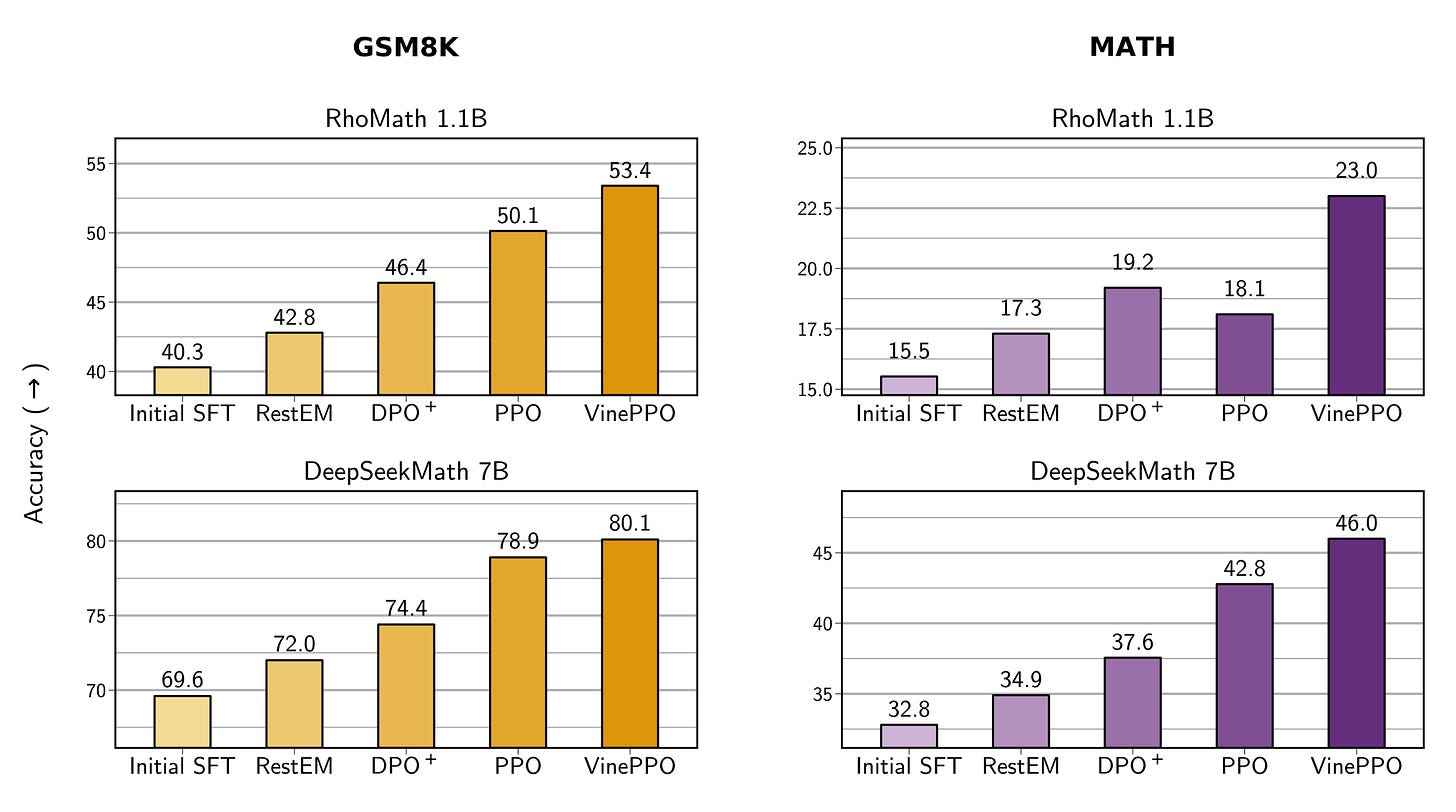

Project: VinePPO

VinePPO is a reinforcement learning method for large language model (LLM) inference, designed to enhance model performance through fine-grained credit assignment.

The project proposes a simple approach that leverages the flexibility of the language context to calculate unbiased Monte Carlo estimates, thereby eliminating the need for a large value network.

https://github.com/mcgill-nlp/vineppo

Project: SparseLLM

SparseLLM is a global pruning project for pre-trained language models (LLMs) aimed at reducing model parameters through pruning techniques to improve computational efficiency and resource utilization.

The project supports pruning for OPT and LLaMA models and offers various sparsity methods, including unstructured pruning and semi-structured N: M sparsity.

Although its iterative optimization process may take longer than single-pass pruning methods, it has potential advantages in performance and numerical stability.

https://github.com/BaiTheBest/SparseLLM

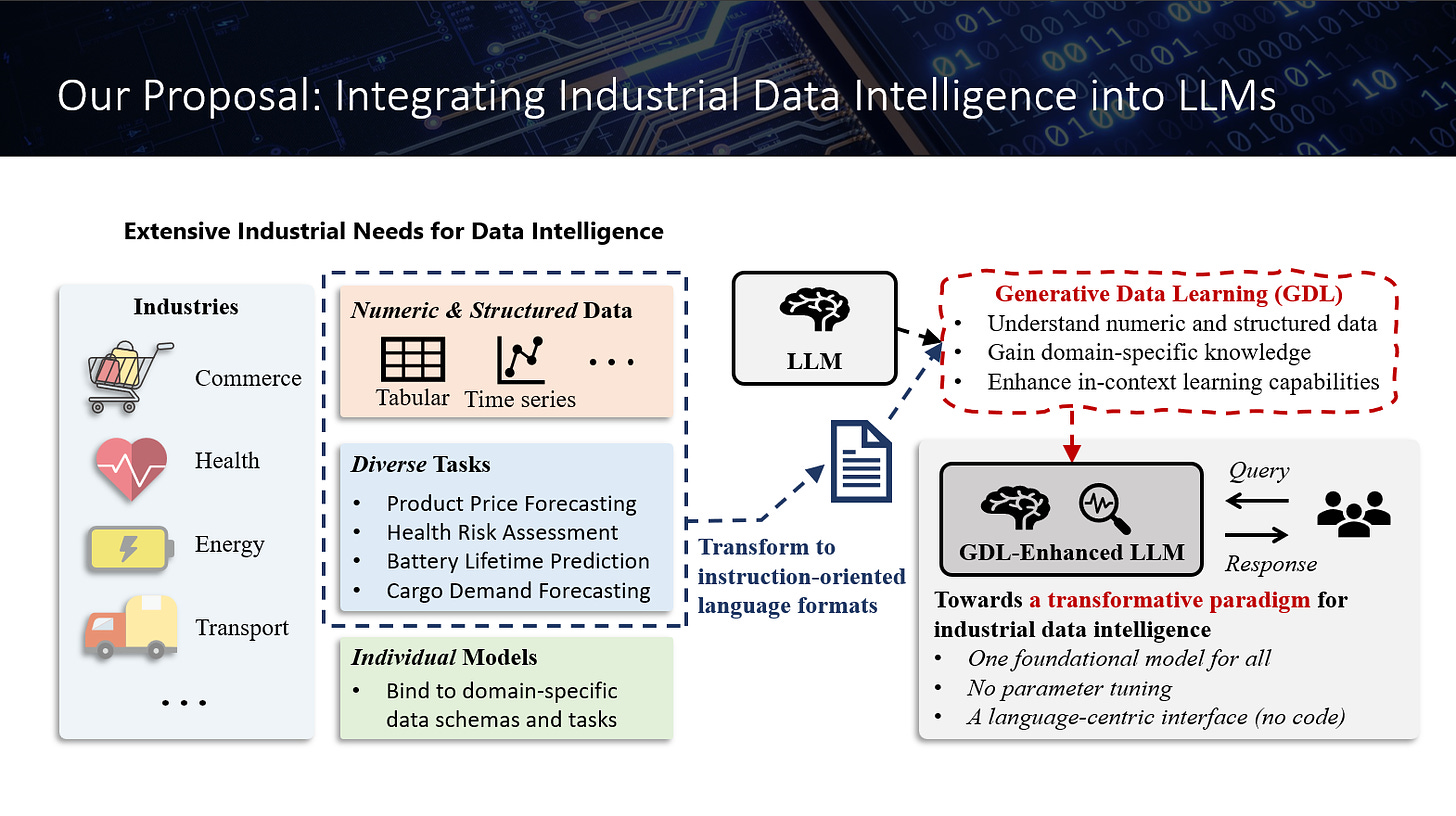

Project: Industrial Foundation Models

The Industrial Foundation Models (IFMs) project is dedicated to building industrial foundation models to achieve universal data intelligence across industries. The project aims to enhance capabilities in instruction tasks, cross-task and cross-industry knowledge extraction, prediction, and logical reasoning by combining industrial data intelligence with large language models (LLMs).

IFMs showcase superior generalization capabilities on unseen data and tasks through enhanced LLMs learning tabular data, driving innovation in data science applications.