Today’s Open Source (2024–10–08): The New 3D Generation Model 3DTopia-XL

Discover cutting-edge AI tools like 3DTopia-XL for 3D asset creation, LiveKit Agents for real-time AI interactions, and Bolt.new for browser-based full-stack app development.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: 3DTopia-XL

3DTopia-XL is a 3D diffusion transformer (DiT) based on native diffusion, capable of generating 3D assets with smooth geometry and PBR textures from a single image or text input. This project offers high-quality 3D asset generation, ideal for applications requiring realistic 3D models.

https://github.com/3DTopia/3DTopia-XL

Project: LiveKit Agents

LiveKit Agents is a framework allowing developers to build AI-driven server programs that can “see, hear, and speak” in real time. Through LiveKit sessions, agents can process text, audio, image, or video streams from user devices and generate outputs in these modalities, returning them in real time to the user. The framework supports multimodal APIs, ensuring ultra-low latency WebRTC transmission.

https://docs.livekit.io/agents/

Project: TEN Agent

TEN Agent is an open-source multimodal AI agent with voice, vision, and knowledge base access capabilities. The TEN framework provides high-performance, low-latency AI applications for complex audio and video, supporting multilingual and multiplatform expansion. It integrates flexible edge and cloud deployment, surpassing model limits and enabling real-time agent state management.

https://github.com/TEN-framework/TEN-Agent

Project: Bolt.new

Bolt.new is an AI-driven full-stack web development agent, allowing users to prompt, run, edit, and deploy full-stack applications directly in the browser, with no local setup needed. It integrates advanced AI models and StackBlitz’s WebContainers, supporting npm tools and libraries, running Node.js servers, interacting with third-party APIs, and deploying to production from chat.

https://github.com/stackblitz/bolt.new

Project: LOTUS

LOTUS is a semantic query engine designed to help users process structured and unstructured data with large language models (LLMs) using a Pandas-like API. LOTUS extends the relational model with semantic operators, allowing users to build AI-based pipelines for high-level logical data processing. LOTUS provides a declarative programming model and an optimized query engine for building knowledge-intensive LLM applications.

https://github.com/TAG-Research/lotus

Project: DreamScene

DreamScene is a novel text-to-3D scene generation strategy that integrates objects and environments through generation mode sampling and camera sampling strategies. It addresses inefficiencies, inconsistencies, and limited editability in current text-to-3D scene generation methods.

https://github.com/DreamScene-Project/DreamScene

Project: Mirage

Mirage is a tool that automatically generates fast GPU kernels for PyTorch programs using ultra-optimized techniques. Users only need to write a few lines of Python code to describe calculations, and Mirage automatically searches for highly optimized GPU kernels that perform better than existing expert-designed custom kernels.

https://github.com/mirage-project/mirage

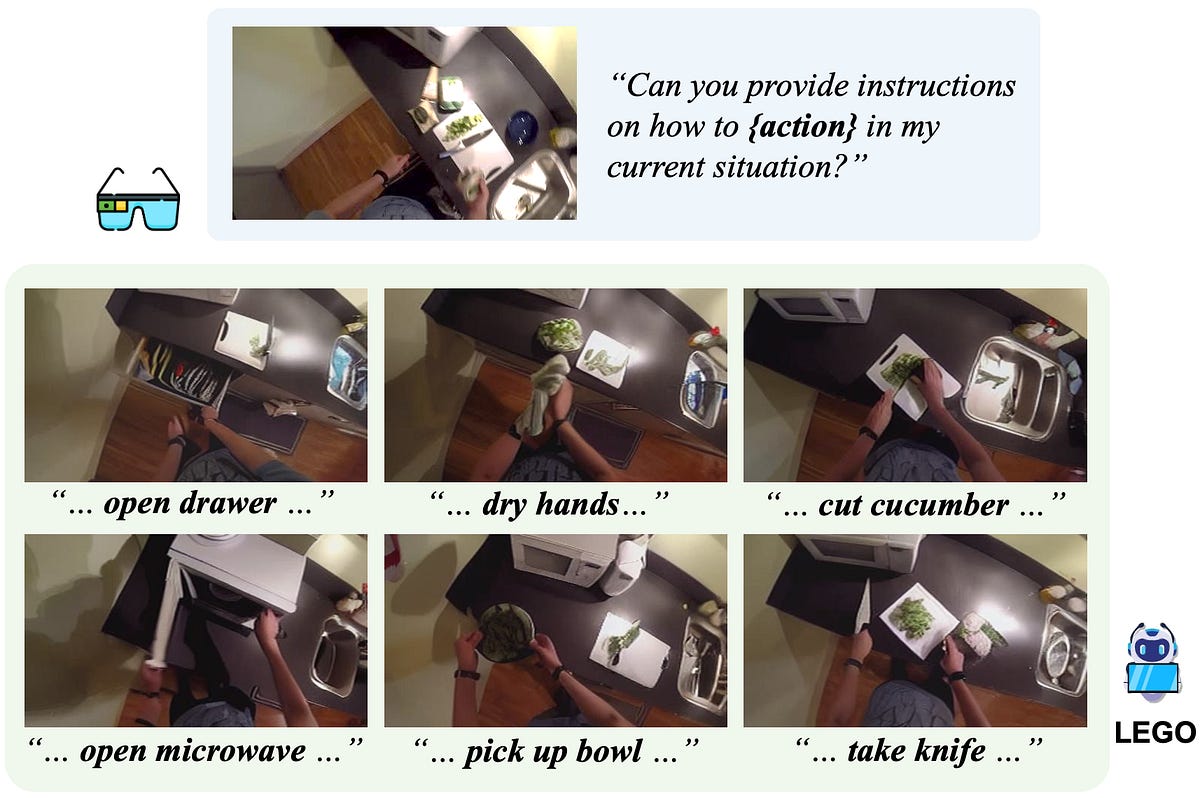

Project: LEGO

LEGO generates first-person action images from user queries and photos of the current scene, guiding the user more accurately to perform the next action. It introduces innovative fine-tuning of large language models to enrich action details while using their features to improve diffusion model image generation performance.

https://github.com/BolinLai/LEGO

Project: CE3D

Chat Edit 3D (CE3D) integrates multiple models with large language models (LLMs) for interactive 3D scene editing via text prompts. It allows users to edit 3D scenes similarly to interacting with ChatGPT and integrates over 20 visual models for powerful editing capabilities.

https://github.com/Fangkang515/CE3D/tree/main

Project: LLM Structured Output

LLM Structured Output is a library for constraining large language models (LLMs) to generate structured output. It ensures that generated output meets specific structural requirements using JSON Schema and tool calls. Uniquely, it directly uses JSON Schema to guide output rather than converting it to formal grammar, enabling more flexible and deep control.